News

2024 Red Hat Collaboratory Research Incubation Award Recipients

The Red Hat Collaboratory at Boston University has announced the recipients of its 2024 Research Incubation Awards. Twelve new and renewed research projects received nearly $2 million in funding to support research in topics ranging from reconfigurable hardware to homomorphic encryption, energy efficiency, and training models for autonomous vehicles. This... More

2023 Red Hat Collaboratory Research Incubation Awards Recipients

The Boston University (BU) Rafik B. Hariri Institute for Computing and Computational Science & Engineering: Red Hat Collaboratory is excited to announce the 2023 recipients of the Red Hat Collaboratory Research Incubation Award! The Red Hat Collaboratory is an innovative partnership between Boston University and Red Hat aimed at advancing research... More

Red Hat Collaboratory Developments in the new RHRQ

BY KENNETH RUDOLPH The new issue of the Red Hat Research Quarterly (RHRQ) explores developments in projects associated with the Red Hat Collaboratory. The issue provides updates on the smart village project funded by the Collaboratory’s inaugural Research Incubation Awards, the BU and Red Hat collaboration “Morphuzz”, and the newly-funded undergraduate... More

AI for Cloud Ops Project Featured in Red Hat Research Quarterly

Ayse Coskun, a Research Fellow at the Hariri Institute and Professor of Electrical and Computer Engineering at Boston University, was interviewed for the May 2022 Red Hat Research Quarterly about the Red Hat Collaboratory-funded project, AI for Cloud Ops. Coskun discussed machine learning for operations and how artificial intelligence (AI) ... More

Red Hat Collaboratory Announces 2022 Student Research Projects

Led by BU undergraduates, the open-source projects aim to improve security, efficiency, and intelligence of computing systems. BY GINA MANTICA The Red Hat Collaboratory is excited to announce newly funded Student Research Projects. As part of Boston University’s expanded partnership with Red Hat, the Student Research Projects aim to provide BU students... More

2021 Red Hat Collaboratory Research Incubation Award Recipients

BY GINA MANTICA The Boston University (BU) Rafik B. Hariri Institute for Computing and Computational Science & Engineering: Red Hat Collaboratory is excited to announce the first recipients of the Red Hat Collaboratory Research Incubation Award! Through BU and Red Hat’s expanded partnership, the Red Hat Collaboratory seeks to create more... More

Steps Toward Open Source Education – Red Hat Research Days Event

During a recent Red Hat Research Days event, Jonathan Appavoo, an Associate Professor of Computer Science and a former Junior Faculty Fellow at the Hariri Institute, presented “Steps Towards Open Source Education'' alongside Orran Krieger, Co-Director of the Red Hat Collaboratory. Appavoo discussed his work developing educational experiences that teach computer... More

Mentorship in the Red Hat Collaboratory: Compiler episode

The Red Hat Collaboratory provides opportunities for BU students to work with Red Hat engineers to scale the open hybrid cloud. These experiential learning opportunities prepare BU students for industry research. In the latest Compiler episode, a Red Hat podcast hosted by Angela Andrews and Brent Simoneaux, Red Hat Collaboratory... More

Join a Research Days event on Steps Toward Open Source Education

Attend the next Red Hat Research Days event, which will be an exciting discussion on "Steps Toward Open Source Education." Jonathan Appavoo, Computer Science Associate Professor at Boston University, will share his work to develop a hands-on live educational experience for teaching computer science in a real cloud environment that uses JupyterBook and containers powered by... More

Save the Date: Open Research Cloud Initiative Workshop, March 7-9, 2022

The Open Research Cloud Initiative Workshop will be held on March 7-9, 2022 (rescheduled from November 1-3, 2021) at Boston University, Northeastern University, and virtually. Formerly the MOC and Open Cloud Workshop, the new name reflects the creation of the ORCI, a structure to organize, accelerate and support the interrelated... More

Internship & Co-Op Opportunities with Red Hat – 2022

Have an interest in cloud computing /research? Red Hat is looking for interns and co-ops. These posting are for all Red Hat internship/co-op opportunities, but there are opportunities specifically in the research space. Internship Opportunities (starting summer 2022) Software Engineering Internship Technical Internship Product, Marketing & Design Internship Business Tech Internship Co-Op... More

Fall 2021 Request for Proposals Opened

We are pleased to announce that the Red Hat Collaboratory at Boston University has opened a Request for Proposals in support of the launch of the expanded Collaboratory. All information can be found on the RFP announcement page. The Deadline for a submission is October 1, 2021, and awardees will... More

Expansion of Collaboratory Announced at Red Hat Summit

Boston University (BU) and Red Hat announced a $20 million renewal and expansion of their partnership at Red Hat Summit 2021, including a generous software donation, to drive new talent, processes, and innovations for the open hybrid cloud. Over the next five years, the Red Hat Collaboratory, housed within BU’s Hariri Institute... More

Colloquium: Pause for democracy: Leaving open source storage to help Americans vote in 2020

Red Hat Collaboratory at Boston University Colloquium Sage Weil Ceph Project Lead; Sr Distinguished Engineer, Red Hat Pause for democracy: Leaving open source storage to help Americans vote in 2020 Abstract In 2020 I took a leave of absence to work on democracy and voting related efforts with VoteAmerica, a national get-out-the-vote organization with a... More

Colloquium: Open Technology and Unlock Human Potential

Red Hat Collaboratory at Boston University Colloquium Jonathan Bryce Executive Director, Open Infrastructure Foundation Open Technology and Unlock Human Potential Abstract Throughout human history, a conflict between closed innovation and open sharing has tugged at the progress made possible by technological advancement. When I create something new, do I control the benefits and retain the... More

Colloquium: The Platform Of The Future: What Is An Open Hybrid Cloud And What Does It Mean For Open Source

Red Hat Collaboratory at Boston University Colloquium Hugh Brock Research Director, Red Hat The Platform Of The Future: What Is An Open Hybrid Cloud And What Does It Mean For Open Source Abstract One of the early developments in computing that made it an essential part of both scientific research and business operations was a basic... More

Colloquium: Making AI faster, easier, and safer

Red Hat Collaboratory at Boston University Colloquium Rania Khalaf Director of AI Platforms and Runtimes at IBM Research Making AI faster, easier, and safer Abstract Artificial intelligence is being infused into applications at an ever increasing rate. The proliferation of machine learning models in production has surfaced the need to bridge between the worlds of... More

Colloquium: Open Cloud Testbed: Developing a Testbed for the Research Community Exploring Next-Generation Cloud Platforms

Red Hat Collaboratory at Boston University Colloquium Mike Zink Associate Professor, Electrical and Computer Engineering Department, University of Massachusetts Open Cloud Testbed: Developing a Testbed for the Research Community Exploring Next-Generation Cloud Platforms Abstract Cloud testbeds are critical for enabling research into new cloud technologies - research that requires experiments that potentially change the operation of... More

A fork() in the road – conversation between Uli Drepper & Orran Krieger

Uli Drepper, Red Hat Distinguished Engineer Orran Krieger, Lead/PI Mass Open Cloud (MOC) and Red Hat Collaboratory Next month at the 17th Workshop on Hot Topics in Operating Systems in Bertinoro, Italy, there will be a session on a paper entitled "A fork() in the road" by Andrew Baumann (Microsoft Research), Jonathan... More

Microarchitecture Workshop on Wednesday, February 20, 2019

The Red Hat Collaboratory at Boston University will be holding a Microarchitecture Workshop (with a focus on security) on February 20, 2019 10:00 AM – 3:30 PM at the Hariri Institute for Computing, Boston University. The event will convene faculty, graduate students, and industry participants working in the microarchitecture area... More

UKL, a Linux-based Unikernel with a Community-based Approach Created via the Boston University Red Hat Collaboratory

Over at the now + Next Red Hat blog, Ph.D. researcher Ali Raza details a Collaboratory effort he worked on this summer as an intern to build UKL, a Linux-based Unikernel. Ali worked under the advisement of Dr. Orran Krieger at BU as well as Red Hatters Richard W.M. Jones and... More

Tech Talk: Why consider a career as an SRE?

Red Hat Collaboratory at Boston University Tech Talk Mike Saparov Senior Director of Engineering,Red Hat Service Reliability Team Why consider a career as an SRE? Abstract What do SREs actually do? And why do they get paid so much? :) Service Reliability Engineering (SRE) team was originally created at Google to manage their massive infrastructure. According... More

Colloquium: Networking as a First-Class Cloud Resource

Red Hat Collaboratory at Boston University Colloquium Rodrigo Fonseca Associate Professor, Computer Science Department, Brown University Networking as a First-Class Cloud Resource Abstract Tenants in a cloud can specify, and are generally charged by, resources such as CPU, storage, and memory. There are dozens of different bundles of these resources tenants can choose from, and... More

Colloquium: The Future of Enterprise Application Development in the Cloud

Red Hat Collaboratory at Boston University Colloquium Mark Little Red Hat, Vice President of Engineering and JBoss Middleware CTO The Future of Enterprise Application Development in the Cloud Abstract Since the dawn of the cloud, developers have been inundated with a range of different recommended architectural approaches such as Web Services, REST or microservices, as... More

Boston University ranked among Most Innovative Universities in US News & World Report

BU Today posted a story on the new US News & World Report education rankings that place Boston University at the #28 slot of the "Most Innovative Schools" in the US. The Red Hat Collaboratory was cited as one of the programs involved in BU's innovation engine leading to this... More

Colloquium: Towards Tail Latency-Aware Caching in Large Web Services

Red Hat Collaboratory at Boston University Colloquium Daniel S. Berger 2018 Mark Stehlik Postdoctoral Fellow in the Computer Science Department at Carnegie Mellon University Towards Tail Latency-Aware Caching in Large Web Services Abstract Tail latency is of great importance in user-facing web services. However, achieving low tail latency is challenging, because typical user requests result... More

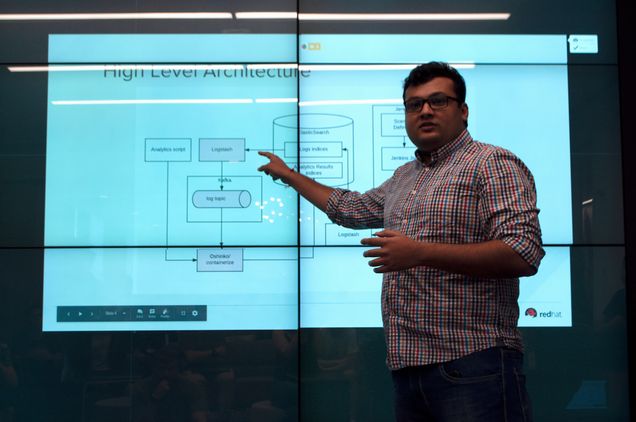

Intern Presentation Series: Container Verification Pipeline, Dataverse/Solr, and Design/Marketing

Every Friday for the past month, Red Hat interns in the Boston office have come together to present their work. Below is an event recap for the following presentations: Container Metrics, Dataverse/Solr, and Design/Marketing. Container Verification Pipeline The first presentation was given by Lance Galletti and covered his work on... More

From Intern to Employee: A Feature Interview with Urvashi Mohnani, Associate Software Engineer at Red Hat

Urvashi Mohnani, Software Engineer at Red Hat, is a regular contributor to the open source community and Red Hat technology. As someone who entered college with no computer science experience, Urvashi never envisioned herself as a programmer. “I joined Red Hat about a year ago as an intern. I graduated... More

DevConf.US 2018

Define the future! Dates & Times Friday, August 17 - Sunday, August 19 9:30 AM - 6:00 PM Add to Calendar 08/17/2018 09:30 AM 08/19/2018 04:00 PM America/New_York DevConf.US 2018 (This event runs from 9:30 AM - 6 PM each day.) DevConf.us 2018 is the 1st annual, free, Red Hat sponsored technology conference for community project and professional contributors... More

An Inside Look into the ChRIS Project from a Student’s Perspective

At first look Parul appears deceivingly shy. However, my first impression couldn’t have been farther from the truth. A second-year masters student at Northeastern, Software Engineer Intern at Red Hat, and aspiring comedian — Parul is articulate, authentic, and intelligent. At Red Hat, Parul is working on a collaborative project between Red Hat, More

Spotlight Interview — Jenny McCauley, Campus Program Manager at Red Hat

Jenny McCauley is the ideal recruiter. Reliable, resourceful, and generous — Jenny is genuinely passionate about helping students find jobs and internships. As a Campus Program Manager, Jenny is responsible for managing Red Hat’s North America Intern Program. Currently, the main focus of her job is on strengthening Red Hat’s partnership with Boston... More

Intern Presentation Series: M2/Foreman, Data Hub, and OpenSCAP

This past Friday, Red Hat interns in the Boston office participated in their second week of presentations. The afternoon consisted of three presentations on: Malleable Metal as a Service (M2)/Foreman, Data Hub, and OpenSCAP. M2 / Foreman The first presentation was given by Ian Ballou and covered his research on Foreman, an... More

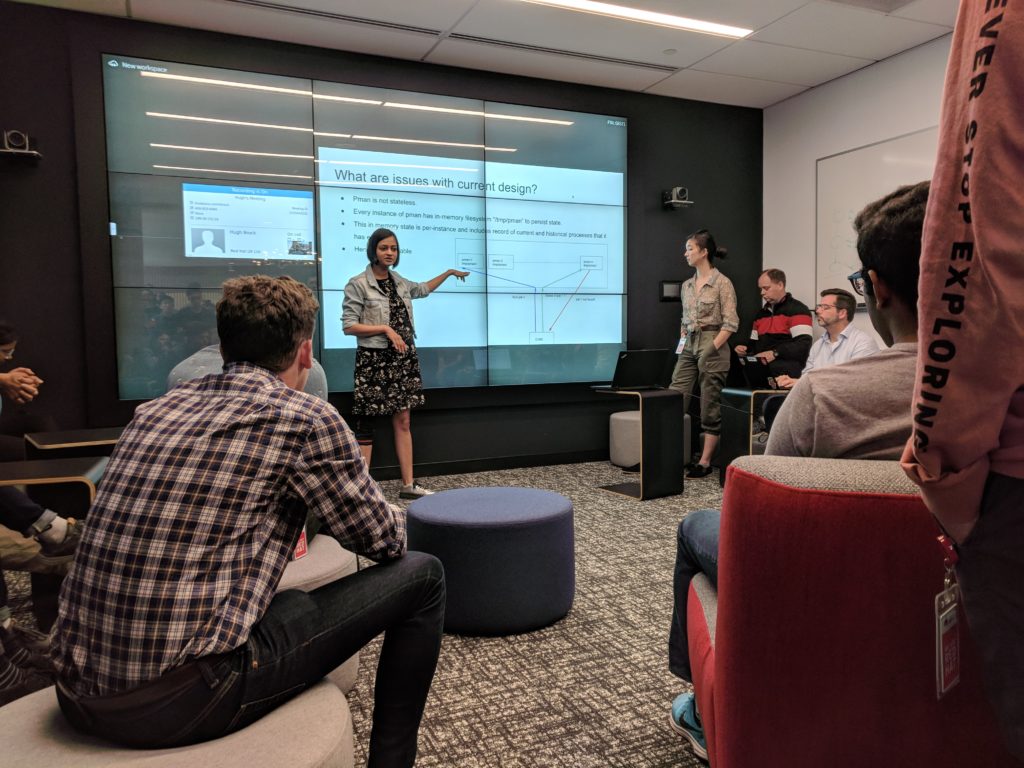

Intern Presentation Series: ChRIS & Artificial Intelligence

Last Friday afternoon in Red Hat’s Boston office, Collaboratory interns came together to participate in the first of series of weekly intern presentations. ChRIS The first presentation was given by two Boston University students, Chloe and Parul, on ChRIS, a web-based neuroimaging and informatics system for collecting, organizing, processing, visualizing, and sharing of medical data. More

ChRIS at the Red Hat Summit

"Ideas Worth Exploring" Keynote with Jim Whitehurst and Dr. Ellen Grant At the Red Hat Summit last month, Dr. Ellen Grant, Director of Fetal and Neonatal Neuroimaging Research at Boston Children's Hopsital and Professor of Radiology and Pediatrics at Harvard Medical School joined Jim Whitehurst up on stage during his keynote, More

Microarchitecture Security Roundtable Report

The 2017 Spectre and Meltdown vulnerabilities showed the world that microarchitecture security problems are real. Given the unique nature of microarchitecture security, topics and research areas discussed covered many aspects of discovery and mitigation. In order to address this important topic, the Collaboratory hosted a roundtable event on Microarchitecture Security on... More

Double your team with interns in a different time zone

Partnering with the Red Hat Collaboratory and Boston University Teaching is one of the best ways to truly learn something. If you’ve ever wondered about bringing on interns as a way of developing your team, exploring new areas and opportunities, and giving back to the open source community, this story is... More

Baremetal Provisioning Roundtable Report

On April 6 2018, the Collaboratory hosted a roundtable session on Baremetal Provisioning. The focus of this roundtable was twofold: To create a roadmap for upstreaming the secure elastic baremetal provisioning capabilities of HIL, M2 (formerly BMI), and Bolted. These projects are used in and developed by the MOC and... More

ChRIS Code Lab Event Report

On March 27 and 28, the Collaboratory hosted a code lab event for the ChRIS imaging platform at Red Hat's Open Innovation Lab in Boston. ChRIS (ChRIS Research Integration Service) is a cloud-based platform developed as part of a collaborative effort between Boston Children’s Hospital, Red Hat, Boston University, and the... More

Colloquium: ‘Enzian: a research computer’

Red Hat Collaboratory at Boston University Colloquium Timothy Roscoe Professor, Systems Group of the Computer Science Department, ETH Zurich Enzian: a research computer Abstract Academic research in rack-scale and datacenter computing today is hamstrung by lack of hardware. Cloud providers and hardware vendors build custom accelerators, interconnects, and networks for commercially important workloads, but university... More

Red Hat Formally Announces Partnership with Boston University

Red Hat, Inc. has issued a press release detailing the partnership between Red Hat and Boston University. The partnership involves a 5-year commitment and a $5 million dollar grant from Red Hat to be administered by Boston University's Cloud Computing Initiative, housed by the Hariri Institute for Computing and Computational Science... More

Boston University and Red Hat announce $5 Million, 5-year collaboration

As reported in BU Today, Red Hat and Boston University have announced a collaboration with the goal of innovating and advanced research in emerging and translational technologies, including cloud computing and big data. Researchers from both Red Hat and Boston University will work together on advancing these technologies. This announcement... More