The Ineluctable Role of Lifecourse Context, Revisited.

In several previous Dean’s Notes, I have commented that context, or the conditions that shape how we live, is inextricably linked to our collective health. There are well-established theoretical arguments that aim to make this point, and interesting empirical work putting the theory in practice across a range of areas of scholarship. I keep coming back to this point because it seems to me central to our enterprise. If context is an ineluctable component of the causal architecture of population health, then it must be a core focus for those of us concerned with promoting the health of populations.

In several previous Dean’s Notes, I have commented that context, or the conditions that shape how we live, is inextricably linked to our collective health. There are well-established theoretical arguments that aim to make this point, and interesting empirical work putting the theory in practice across a range of areas of scholarship. I keep coming back to this point because it seems to me central to our enterprise. If context is an ineluctable component of the causal architecture of population health, then it must be a core focus for those of us concerned with promoting the health of populations.

But do we always think about context as much as we should when we go about conducting the science of public health? And are there implications if we do not think about context as much as we should? To explore this point, it may be instructive to consider the lessons learned from some of the more rigorous scientific designs we employ to understand causation: randomized controlled trials (RCTs). RCTs not infrequently aim to experimentally test findings from observational studies. In most cases, the effort indeed finds concordance between RCT and observational study findings. However, notable discrepancies sometimes arise. Such discrepancies are explainable if either the observational studies or the experimental studies in questions are flawed or, more technically, not internally valid. But what if both studies are internally valid? Traditionally we have thought these differences between findings to be accounted by “unmeasured” confounding or interaction that is unaccounted for in the observational studies. This suggests that the observational study failed to fully uncouple the links between “other covariates” and the treatment assignment, resulting in alternate causal explanations to the ones documented in the observational studies, with the causal truth then being revealed by the experimental study. I do not disagree with the notion that discrepant observational-experimental findings are explained by variables not considered by the studies. I would argue, however, that the unmeasured variables may be equally well present in the observational as in the experimental studies, and that these unmeasured variables are rather frequently features of the context in which our studies take place. These differences therefore provide an illuminating window into the central role of context in the study of population health.

I illustrate this point using two well-known examples of observational-experimental findings discrepancy.

Hormone Replacement Therapy and Coronary Heart Disease

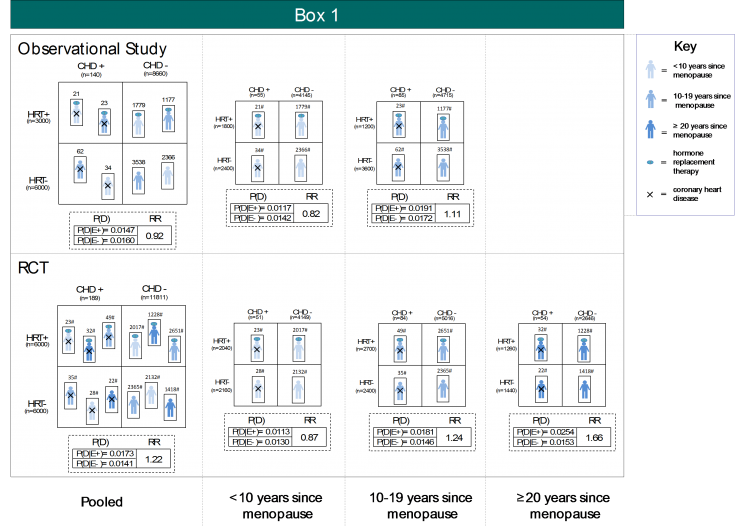

Scholarly arguments about the potential link between hormone replacement therapy (HRT) for post-menopausal women and coronary heart disease raged for a good part of a decade. Much of the debate was fueled by discrepant findings from the observational Nurses’ Health Study (NHS) demonstrating a protective effect (risk ratio = 0.56) of HRT on risk of coronary heart disease (CHD), while the Women’s Health Initiative (WHI) RCT found a slightly harmful effect (hazard ratio = 1.24). Hernán and colleagues (2008) reanalyzed the NHS as a series of experiments of initiation versus non-initiation of HRT, and yielded a pooled hazard ratio of 0.96 for eight years of follow-up. Accounting for time since menopause, both in Hernan’s reanalysis and in the WHI, further and convincingly aligned effect estimates from the two studies. Box 1 provides a numeric illustration, by way of simulated data, of how this might have arisen. In sum, between-study differences in sample distribution of time since menopause (e.g., no individuals with ≥ 20 years since menopause in the observational study), which interacts with HRT in risk of CHD, principally drove the substantial discrepancies in pooled study estimates. HRT appears to be slightly protective for those within 10 years of menopause, slightly harmful for those between 10 and 19 years since menopause, and most harmful among those who have been in menopause for ≥ 20 years. When we stratify the effect estimates by time since menopause, these between-study differences are greatly reduced.

Therefore, the factor that was unaccounted for—the feature of context that explained the difference between the observational and experimental study—was time, in this case time since menopause of women in these studies. This reflects how differences in the lifecourse context of the study participants ultimately accounted for substantial inter-study differences, and challenges our understanding of the drivers of population health.

Vitamin C and Coronary Heart Disease

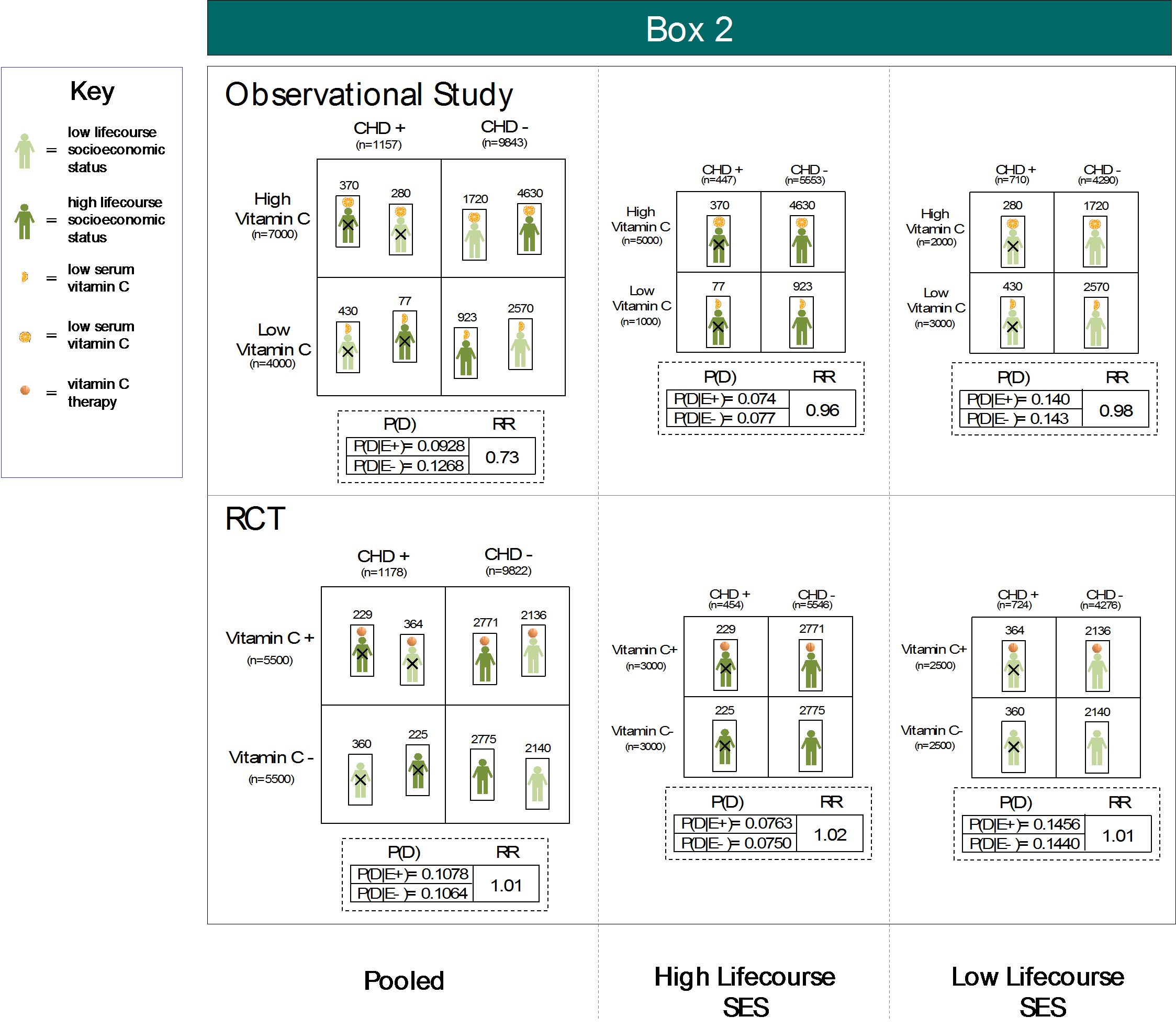

In another classic illustration of this phenomenon, a large observational study of vitamin C and CHD demonstrated a protective effect (hazard ratio = 0.68), while a major RCT demonstrated a null effect (hazard ratio = 1.02); observational studies used serum vitamin C levels to define exposure status, while RCTs randomly allocated vitamin C supplementation to study participants. Reanalyses by Lawlor and colleagues in 2004 and 2005 found that the protective effect estimates from observational studies were a product of confounding by lifecourse socioeconomic status (SES). A later prospective study confirmed the positive association between lifecourse SES and circulating vitamin C. In Box 2, we illustrate with simulated data how this spurious association could have emerged. Centrally, circulating vitamin C is positively related to lifecourse SES, which is causally associated with CHD. Thus, classifying into strata of high and low vitamin C is akin to classifying into respectively high and low lifecourse SES. Accordingly, high lifecourse SES individuals flooded the numerator in the pooled estimate for the observational study, pulling the effect estimate from the null to the observed protective value. Randomizing patients to supplemental vitamin C effectively broke the links between lifecourse SES, vitamin C status, and CHD, demonstrating a consistent null effect in both the pooled and stratified effect estimates. Therefore, in this situation, it was again lifecourse that explained these differences, but in this case, specifically, socioeconomic status across the lifecourse of participants.

In Sum

While discrepancies between observational studies and RCTs are often attributed to inability of observational studies to break the bind between treatment choice and outcome, these examples argue that lifecourse context is a principal driver of differences between observational studies and experimental studies. Context, and in the case of these examples, lifecourse context, is deeply embedded in the effects we observe and their external validity to populations that extend beyond the immediate study sample. This agitates for a more explicit focus on external validity in public health research, together with our current focus on internal validity. Less technically, absent a consideration of population lifecourse context in our study designs and analyses, population health inference stands a real chance of having limited utility and, at worst, producing erroneous findings. As commented previously, our documentation of findings that challenge the field’s validity are not cost-free, further inciting us to consider the role of context in shaping our findings, and as a focus of population health intervention.

I hope everyone has a terrific week. Until next week.

Warm regards,

Sandro

Sandro Galea, MD, DrPH

Dean and Professor, Boston University School of Public Health

Twitter: @sandrogalea

Acknowledgement: This Dean’s Note is based on work being conducted together with Gregory Cohen, MSW, and presented jointly at scientific meetings.

Previous Dean’s Notes are archived at: https://www.bu.edu/sph/category/news/deans-notes/