Evidence-Based AI in Learning (EVAL) Industry Collaborative

Bridging the Critical Evidence Gap in Educational AI

Generative AI is rapidly transforming K–12 education, offering new opportunities for learning and teaching. Yet fewer than 10% of AI tools in education have undergone independent validation. This gap between rapid adoption and proven effectiveness risks wasted resources, inconsistent learning outcomes, and missed opportunities to improve education.

The Evidence-Based AI in Learning (EVAL) Industry Collaborative bridges this gap by conducting rigorous research to evaluate AI tools’ impact on K-12 student learning outcomes. We provide the research infrastructure and expertise EdTech companies need to demonstrate impact, build trust, and accelerate adoption—ensuring AI innovation truly benefits students.

We are looking for industry partners to conduct efficacy research. Contact us today.

Turning AI Innovation into Real Learning Impact

Decades of research in education and cognitive science have shown how to teach core domains—literacy, mathematics, and science—effectively. We also know that impact depends on context: what works, for whom, and under what conditions. Translating this expertise into the design, evaluation, and implementation of generative AI tools presents both a major challenge and a unique opportunity to turn AI innovation into real learning impact.

The EVAL Industry Collaborative was established by the Wheelock College of Education and Human Development to meet this challenge. We combine education, cognitive science, and implementation research with cutting-edge methodologies to evaluate AI tools at scale, ensuring they align with how students learn and how teachers teach in diverse classrooms.

Our approach is rooted in empirically proven methods and led by experts in AI, cognitive science, and education. In partnership with EdTech innovators, we provide the research infrastructure and expertise needed to build a stronger, evidence-based foundation for AI in education.

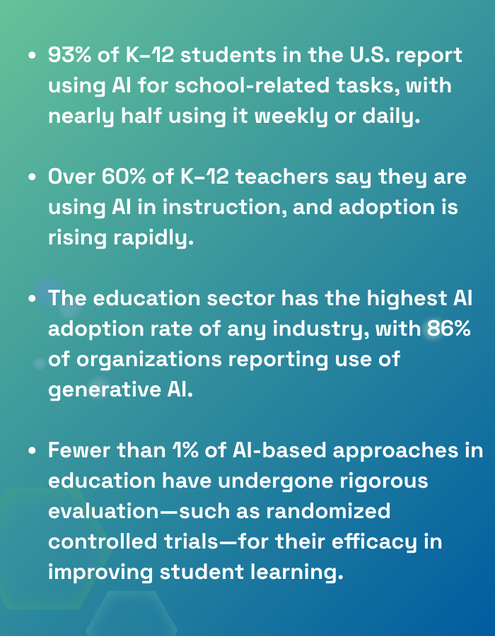

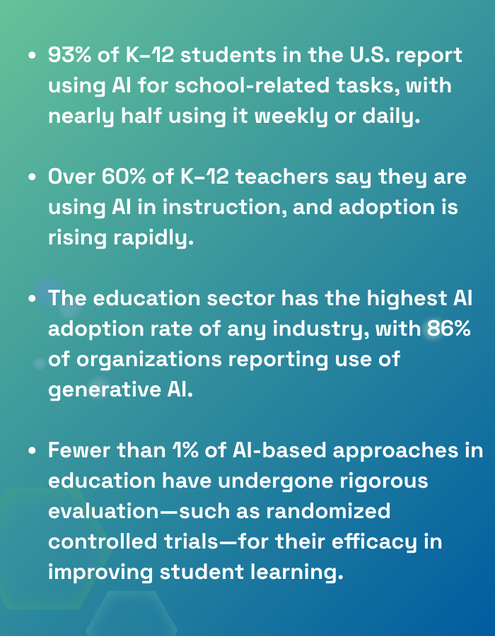

Why EVAL Matters: Statistics & More

- Sources: Microsoft–PSB Insights. AI in Education Report (2025); Gallup & Walton Family Foundation. Voices from the Classroom Survey (2025); Microsoft Education Report (2025); IDC. AI Opportunity Study: Education (2024), cited in Microsoft Education Report (2025); UNESCO. Global Education Monitoring Report on AI in Education (2024).

The Cost of Untested Tools

Schools have often adopted educational technologies that seemed promising but lacked evidence. These untested tools can fail students and disproportionately affect vulnerable populations.

Co-Design for Impact

Educators, students, and families should be active co-designers, not passive users. Their involvement ensures AI tools are equitable, culturally responsive, relevant, and grounded in real classroom needs.

Closing the Gap

Independent, rigorous evaluation is the bridge between research, innovation, and practice. It ensures AI-based approaches are not only innovative but effective, engaging, and impactful for all learners.

An “FDA for Education”

Just as the FDA safeguards public health by testing the safety and efficacy of medical treatments, education needs a trusted process for evaluating AI tools before they are scaled in schools. Without such safeguards, technologies may widen achievement gaps instead of closing them.

Enter the EVAL Challenge — Prize Package Worth $150,000

Competition Overview

The EVAL AI in Learning Challenge invites developers of innovative AI-powered K–12 learning tools to collaborate with Boston University researchers in independent, gold-standard evaluations that translate innovation into proven learning outcomes.

Core Selection Criteria:

• Learning Impact: Tools that assess and accelerate K-12 learning outcomes with clear theory of change

• Equity Focus: Address opportunity gaps and expand access for diverse learner populations

• Research Readiness: Capacity to provide technical support for rigorous evaluation while maintaining complete separation from research methodology, analysis, and publication processes

Research Independence Guarantee: EVAL Collaborative maintains complete methodological independence with binding commitments to publish all results regardless of outcomes. Companies provide technical support only and will be acknowledged but not involved in research design, analysis, or publication.

Learn More