Event Recap: Addressing Inequality in Artificial Intelligence

BY: NATALIE GOLD

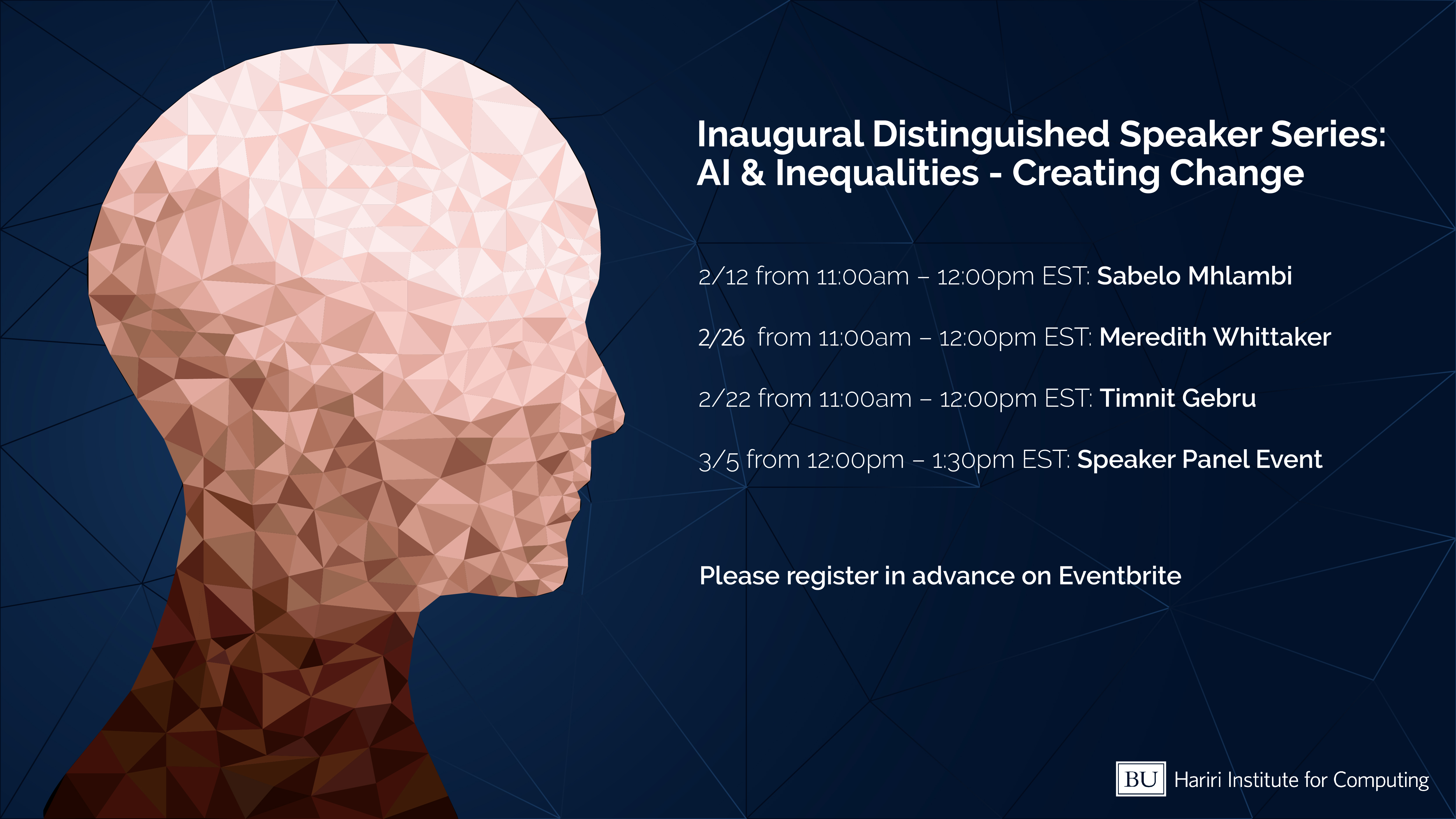

When you open your phone with facial recognition or notice suggested products on your social media accounts, that is artificial intelligence (AI) at work. Though AI is useful and can help complete a variety of tasks that usually require human intelligence, it is also laced with problems of inequality and bias. BU’s Hariri Institute for Computing hosted three guest speakers at the forefront of creating change in the field of AI as part of the inaugural Distinguished Speaker Series: AI & Inequalities-Creating Change. Sabelo Mhlambi, Timnit Gebru, and Meredith Whittaker presented individually on topics ranging from the colonial values embedded in AI to AI ethics at big tech companies, and then came together for a public panel event.

Sabelo Mhlambi, a Technology and Human Rights Fellow at Harvard Carr’s Center for Human Rights and founder of Bantucracy, was the first presenter in the series. Mhlambi discussed how AI researchers can reject colonial values by reversing the dehumanization that has been caused by racism, capitalism, and colonization. Mhlambi proposed incorporating an Ubuntu framework into AI. Ubuntu is a sub-Saharan African moral philosophy that posits a person is connected to other persons of the past, present, and future, and is a person because of these connections. Mhalmbi suggests that the Ubuntu framework can help build machines and systems that reflect and value the well-being of communities. “Solutions require the active involvement of all those concerned,“ said Mhlambi.

Timnit Gebru, a computer scientist and co-founder of Black in AI, spoke about her journey to realizing that technology and science are not separate from society. A hierarchy of knowledge, where some ideas are seen as more important than others, can happen when researchers don’t address societal issues in their work. Ideas from areas such as history and critical race theory may be perceived as unimportant. Work that incorporates these ideas and addresses social issues are therefore seen as lesser. Gebru now tries to incorporate ideas from outside the computer science discipline into her AI research. Incorporating social perspectives into computer science research can reveal some of the biases in AI. “Gatekeeping is always done by those in power,” said Gebru.

Meredith Whittaker, Minderoo Research Professor at New York University, founder of Google’s Open Research Group, and co-founder of the AI Now Institute, addressed the intertwining of academia with big tech companies. Only a handful of companies have the means to build and scale AI due to their vast computational power, wealth, and datasets. Many computer science programs across colleges and universities rely on these companies for infrastructure and funding. In return, companies rely on the schools to train and educate the talent that they will eventually hire. This entanglement makes it difficult for criticisms of the tech industry. Whittaker explained that she has seen the same pattern over and over again: researchers ask critical questions of tech companies, conduct research that might threaten the companies, and then face opposition designed to silence their work. “Google is now sure enough of its power that it is no longer pretending to tolerate criticism of its work,“ said Whittaker.

On March 5 2021, the three distinguished speakers came together to answer questions about the role of big tech in AI research, how AI is intertwined with social and labor movements, and what researchers entering the field of AI should know. All the speakers encouraged students to bring their whole selves to their fields of research, including their personal backgrounds and experiences. Whittaker spoke to the power of collective action, specifically pointing to the power of choosing not to work for a certain company. Whittaker suggested that those who have the privilege to choose not to work somewhere should explain why, and amplify their message. Gebru noted that collective movements can support and defend critical research and Mhalmbi echoed these sentiments, suggesting that the rejection of broken systems becomes possible only when the push comes from a larger movement.

These kinds of discussions are just the beginning for addressing biases and inequalities in AI. The Hariri Institute’s inaugural Distinguished Speaker Series highlighted the importance of not only addressing these problems at conferences or lectures, but also in the AI field, itself. Considering how fast the field of AI is growing, solutions for more ethical AI and technology need to be implemented sooner rather than later.

To watch the recordings of events from this series, please visit here.

The next Distinguished Speaker Series: Machine Learning for Model-Rich Problems will begin on Friday, April 16th at 11:00 AM. For details and event registration, please visit here.