BU-developed, brain-inspired algorithm could improve word recognition accuracy in noisy situations

When a group of friends gets together at a bar or gathers for an intimate dinner, conversations can quickly multiply and mix, with different groups and pairings chatting over and across one another. Navigating this lively jumble of words—and focusing on the ones that matter—is particularly difficult for people with some form of hearing loss. Bustling conversations can become a fused mess of chatter, even if someone has hearing aids, which often struggle filtering out background noise. It’s known as the “cocktail party problem”—and Boston University researchers believe they might have a solution.

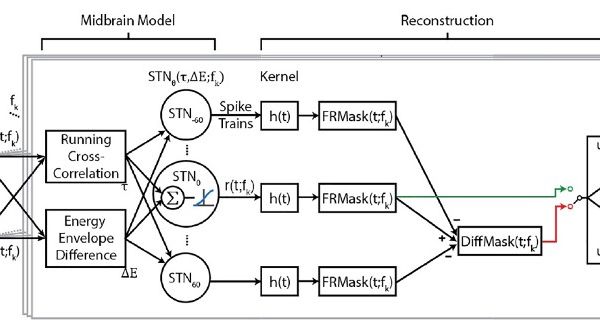

A new brain-inspired algorithm developed at BU could help hearing aids tune out interference and isolate single talkers in a crowd of voices. In testing, researchers found it could improve word recognition accuracy by 40 percentage points relative to current hearing aid algorithms.

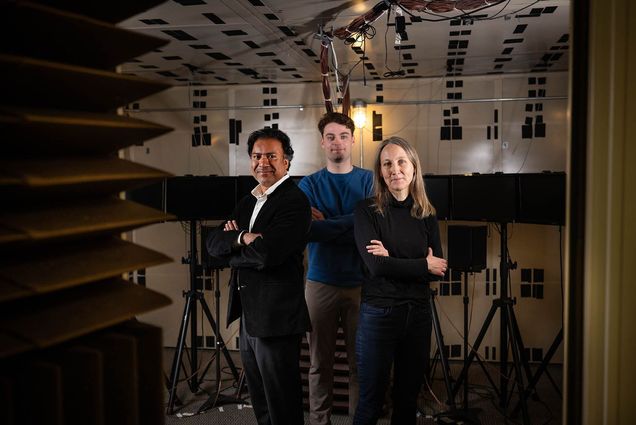

“We were extremely surprised and excited by the magnitude of the improvement in performance—it’s pretty rare to find such big improvements,” says Associate Professor Kamal Sen (BME), the algorithm’s developer. The findings were published in Communications Engineering, a Nature Portfolio journal.

Some estimates put the number of Americans with hearing loss at close to 50 million; by 2050, around 2.5 billion people globally are expected to have some form of hearing loss, according to the World Health Organization.

“The primary complaint of people with hearing loss is that they have trouble communicating in noisy environments,” says Virginia Best, a Sargent College professor who collaborated with Sen on the study. “These environments are very common in daily life and they tend to be really important to people—think about dinner table conversations, social gatherings, workplace meetings. So, solutions that can enhance communication in noisy places have the potential for a huge impact.”

Read the full story at the Brink