Strength in Numbers: Robots Learn to Work Together

By Sara Cody

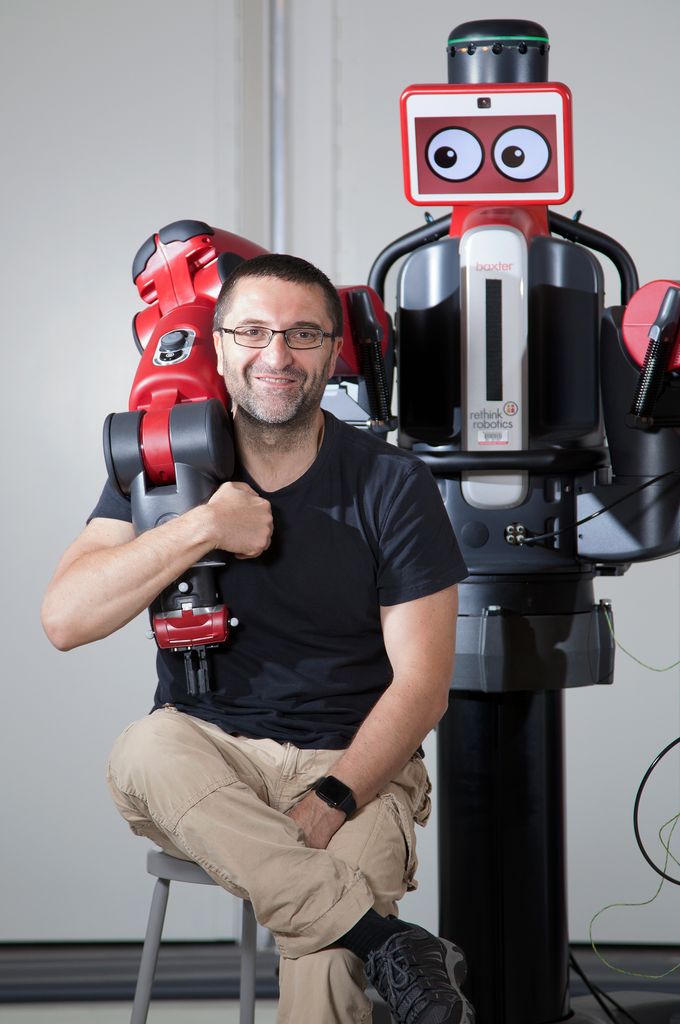

Agriculture. Automotive. Medicine. Biotechnology. Name an industry, and Professor Calin Belta (ME, SE, ECE) can tell you how the field of robotics will impact it—if it hasn’t already. Robots are going to significantly aid in allaying some of society’s greatest problems and stressors in a variety of ways, from performing automated tasks, to helping with housework to preventing dangerous situations. As they grow smarter, robots will also become more autonomous.

Photo by Dana Smith for Boston University Photography

Many of the largest, resource-rich companies in the country—including Google, Amazon and Uber—are now investing in creating autonomous robots. Since it doesn’t make sense for an academic institution to compete with these deeply resourced companies financially, BU researchers are taking a number of unique approaches to advance the field, namely, improving the systems to make them work better.

“My group brings in this kind of rigorous thinking that people use from computer science so we can use these platforms in dynamical systems and robotics to come up with smarter ways to control them,” Belta explains. “We do this by bringing together two fields; one is machine learning and the other is formal methods, which is highly theoretical and seeks to prove the correctness of systems by developing mathematical algorithms to ensure what you make does what it’s supposed to do.”

The College of Engineering supports interdisciplinary research with resources like the Belta-directed Robotics Lab, located behind the Engineering Product Innovation Center (EPIC). His research group, the Hybrid and Networked Systems (HyNeSs) lab, focuses on making robots smarter and able to perform tasks autonomously. Improving upon machine learning—the mechanism that allows robots to become smarter as researchers track their progress—offers valuable insight that in turn helps to answer other questions.

Compared to developing an algorithm that predicts outcomes neatly, machine learning is messy because it does the exact opposite. Machine learning happens through trial and error, through which the robot learns from the data that is immediately available to it without any background or context. However, the process provides important insight into how neural connections are made as the machine learns, which can improve systems going forward. Belta seeks to improve this process by cleaning up the process of machine learning and making it more rigorous, or, as the industry refers to it, explainable.

“Understanding the ‘how’ of an idea working is crucial to improving it and making it smarter,” Belta says. “It’s one thing if an image algorithm that classifies between cats and dogs makes a mistake, and another thing entirely if a safety-critical system fails, like in a space application where lives depend on it working the way it’s supposed to.”

Funded by the Department of Defense, one of Belta’s projects concentrates on persistent surveillance, where teams of robots are sent out to survey an area. Belta uses motion capture technology and floor projections in the Robotics Lab to run disaster relief scenarios, where the goal is to send a robot into a disaster zone and have it find its way through collapsed buildings and debris.

“The idea is to create heterogeneous robots both in the air and the ground that can go into an unknown or hostile environment,” he says. “Instead of sending in people to survey an area, you can send in a robot to identify enemies, collect and interpret data, and move around this unknown environment so they don’t collide into things.”

Belta and his team plan to use robots to build a map, identify areas of interest and locate survivors. They want the robot to not only be able to gather important data about disaster zones, but also demonstrate self-awareness in terms of knowing when it has to return to recharge. In addition to disaster relief and military applications, the technology could be used agriculturally to survey crops.

“Right now, we are working to address technical questions like how we track movement outside of a controlled environment, or even without GPS, which would be useful in military situations where activating GPS is dangerous,” Belta notes. “Answering those fundamental questions about localization is important and helps answer other important questions like how we have the robots actually move around and collect data, or even recognize survivors in a disaster scenario.”

Other engineering disciplines are also exploring this exciting field—faculty members in electronic and computer engineering, as well as biomedical engineering, are looking into ways we can use robotics and autonomous systems to benefit society in a variety of applications.

CONNECTED CARS

The driest period in California history began in 2011 with a drought that lasted until April of 2017. Prolonged, rainless periods resulted in vast expanses of forest land becoming kindling for fires, as the Los Angeles Times reported that firefighters were battling “larger and more aggressive wildfires as drought conditions [continued].” Unless caught quickly, in many cases the fires would grow so large and unwieldy by the time help arrived that they could not be contained. Fire does not discriminate in its path of destruction, and countless wildlife and human homes alike were destroyed.

Imagine a fleet of robots—hundreds of small, inexpensive machines equipped with sensors that survey a given area for inci- dents of extreme heat and temperature—deployed in a dried-out national forest. If one robot picks up on a major temperature fluctuation, it can call on two or three of its comrades to come and assess the situation (if the robots are mobile rather than stationary, and the collective data accurately representing the situation can be relayed back). And perhaps the robots can act on the information and begin the process of quenching the flames if the fire has already begun. According to Professor Christos Cassandras (SE, ECE), when it comes to autonomous systems, there is strength in numbers.

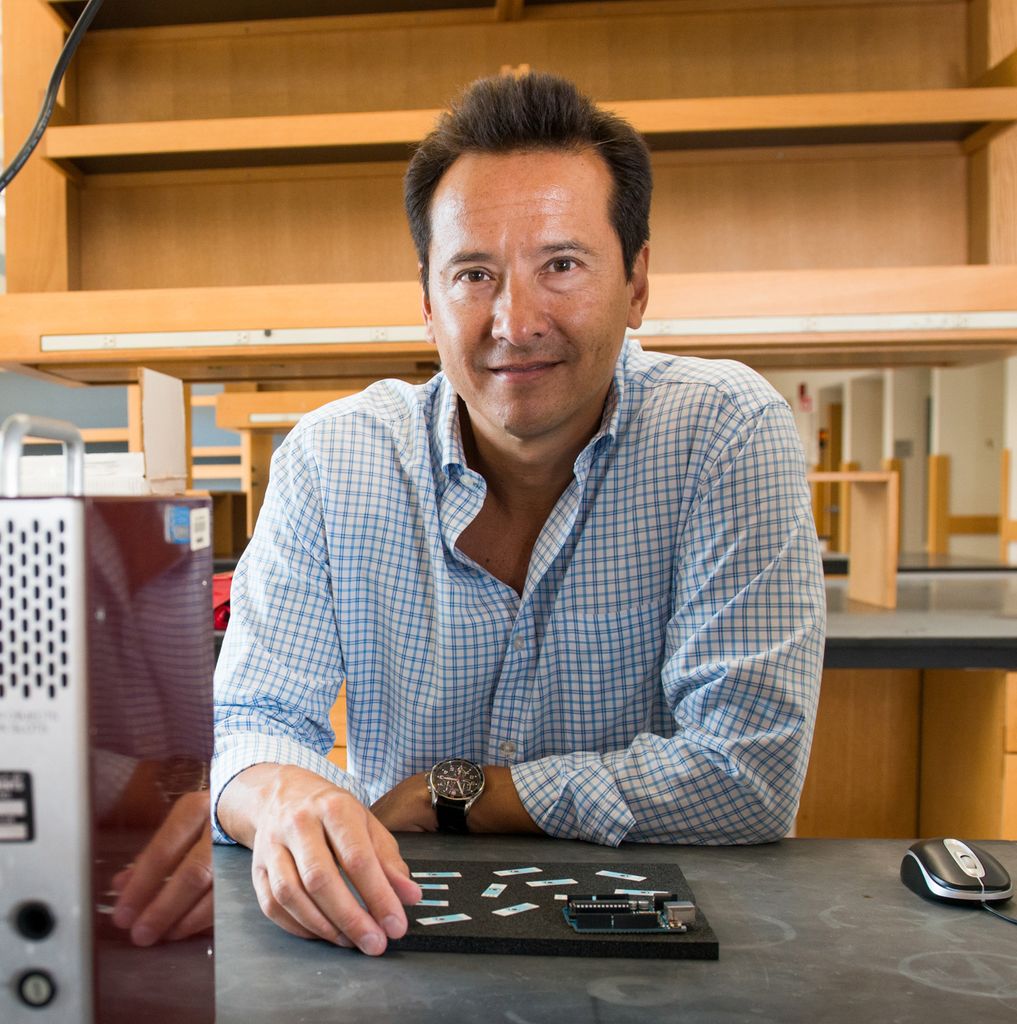

Photo by Conor Doherty for Boston University Photography

“Autonomy means that a device has the ability to not only guide itself without the continuing participation of an operator, but also to make decisions and change its behavior accordingly,” he says. “Intelligence is part of autonomy, the ability to sense the environment and react to different conditions, above all to communicate and cooperate. But cooperation is also integral to autonomy as well. What you don’t want is one robot or one big computer to control everything. That leaves the system vulnerable to security breaches, it’s expensive and it has a single point of failure. If that computer or robot goes down, you’re done.” Cassandras and his research group, the Control of Discrete Event Systems (CODES) laboratory, are interested in Smart Cities; much of their research focuses on making teams of robots work together in order to address problems in society. One such project involves connecting autonomous vehicles wirelessly to create an “internet of cars” that can communicate with each other and their surroundings. Cassandras points out that humans are terrible drivers—we drive when we are tired and stressed; we can’t see all around us at any given time; we get distracted; we don’t react quickly enough to environmental stimuli. But computers thrive in this type of environment, which is why he seeks to create what is essentially an internet of autonomous cars to make driving safer, more efficient and less stressful.

“Most of the time when you develop new technology, there are a number of tradeoffs, but with autonomous cars, once the technology is in place, the result is a win-win-win,” he explains. “You win because you cut down congestion and shorten travel times. You win because you save energy. You win by protecting the environment more by decreasing the amount of toxic emissions.”

Working with researchers at the University of Delaware, the University of Michigan and the Oak Ridge National Laboratory, and with Bosch as a corporate partner, the group received a $4.4 million grant from the Energy Department’s Advanced Research Projects Agency-Energy NEXTCAR program. The project goal is to design a control technology that enables a plug-in hybrid car to communicate with other cars and city infrastructure, and act on that information. Cars with situational self-awareness will be able to efficiently calculate the best possible route, accelerate and decelerate as needed and manage their powertrain.

“My vision is not the Google car, which is full of expensive hardware and software, because you don’t need expensive computers to make autonomy possible,” Cassandras says. “It’s connectivity that we need to make it happen, GPS that can tell us where we are and our relative distance towards one another and our goals—and that’s where it’s really going to pay off. That will require new hardware, but not necessarily expensive hardware.”

Currently, obstacles like stoplights, heavy volume and poorly designed infrastructure that causes bottlenecking contribute to heavy traffic. The constant stopping and starting not only wastes energy, but also expels the most harmful emissions into the atmosphere. Traffic has a significant impact on lifestyle as well, as a long, rush-hour journey to work is hugely stressful for many commuters; anyone who has commuted to or around Boston can attest to the need for a new mode of transportation.

“Five years ago, this could have been considered science fiction, but we are already starting the culture shift towards acceptance,” Cassandras notes. “And it will take work to address certain challenges, like the technology, security, privacy and other social challenges, like legal aspects of accountability. But it’s an exciting time to be doing this work because BU is approaching this from every angle. It’s very interdisciplinary, and that is a key takeaway.”

HARNESSING AUTONOMOUS MICROBES

While robots and cars might seem obvious candidates for autonomous systems, Professor James Galagan (BME, CAS) envisions systems that can monitor our health on a much smaller scale. He is working toward harnessing the power of microbes, which he believes can be designed to home in on specific biological signals autonomously, offering a portable and inexpensive method to sense biology.

“Right now, our autonomous technology in biology is blind to a big chunk of the world because when it comes to being able to sense other biology with the level of specificity and sensitivity needed, we just aren’t there yet,” Galagan says. “When it comes to sensing things like biochemicals, the sensors themselves are the key because they are essentially the eyes for the entire system.”

A biomedical engineer who spent 12 years working in genomics at the Broad Institute of MIT and Harvard mapping genomes, Galagan determined that since bacteria have been exposed to a diverse set of stimuli over millions of years, they have evolved a vast number of molecular mechanisms to sense and respond to their environment. Knowing that, he saw an opportunity not only to learn from microbes, but also to salvage them for parts to use in our technologies.

“We want to identify those parts, pull them out of the bacteria and embed them in our own devices by converting biological parts into electronic parts,” he explains. “But how do we go into nature and essentially shop from nature’s shopping list of parts that is written in a language we don’t understand yet? The genes that we get from a microbe gives us access to the best recipe that three billion years of evolution has come up with and once we have the schematic of the microbe, we can tweak the recipe in order to improve it for our purposes.”

While today’s portable sensors are biased to physical and electromagnetic stimuli, Galagan wants to create sensors sensitive enough to pick up on biochemistry such as enzymes, proteins, DNA and other biochemicals that can be used to monitor health. His idea has precedent in the single most successful biological sensor available: the glucose monitor, used by diabetic patients to monitor blood sugar. The glucometer employs an enzyme, glucose oxidase, which evolved in microbes to help digest glucose. Much later, the enzyme was incorporated into a machine to do work for us. Galagan points out that this singular success is just the tip of the iceberg.

Current efforts to develop biological sensors depend on a limited number of molecular parts available in biochemical catalogs. “Our goal is to cut out the middle man and head straight into nature to identify parts of bacteria that would work well in sensors. We don’t want to use the bacteria themselves because it would require keeping a living cell alive, which is hard to do, and I don’t know many people who would want to attach different bacteria to our bodies,” Galagan says. “Taking the parts out of the microbes avoids this problem. And thanks to the glucometer, we know this idea can work. There is no reason to believe that glucose oxidase is the only enzyme that would work well in our sensors. It would be highly unlikely that we have hit the only jackpot on the first try.”

Galagan is a founding member of the Precision Diagnostics Center (PDC), a research center that seeks to leverage point-of-care technologies to enable precision medicine across a wider swath of disease areas, building upon the success of the Center for Future Technologies in Cancer Care (which will now fall under the umbrella of the PDC). The center will bring together a multidisciplinary group of engineers, scientists and medical providers to identify new opportunities to improve upon diagnostic protocols. While this technology is poised to significantly impact the healthcare industry with the advent of surgical robotics and other healthcare-related systems, Galagan’s vision is to incorporate a cultural paradigm shift that empowers people to think about their health before they get sick.

“These sensors are great for health applications, but I am keen on empowering the average Joe in terms of wellness. There will be a time in our lives when our technology will be able to beam much information about your biology at you, and this accessible information will impact how people make informed decisions about their lifestyles,” he says. “It’s not only about disease and only checking under the hood when you’re sick. It’s about enhancing overall wellness and lives.”

This story originally appeared in the Fall 2017 issue of ENGineer, the College of Engineering alumni magazine.