By ecaughey

The Coral Calamity

Environmental changes to coral reefs threaten aquatic biomes.

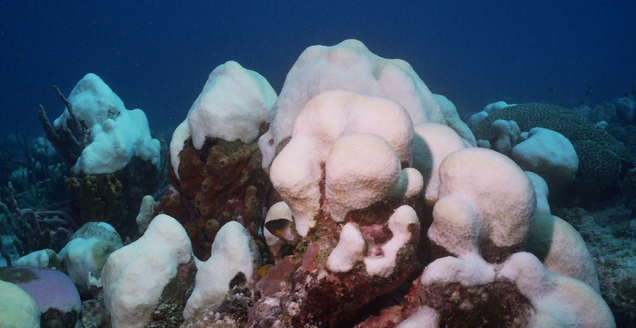

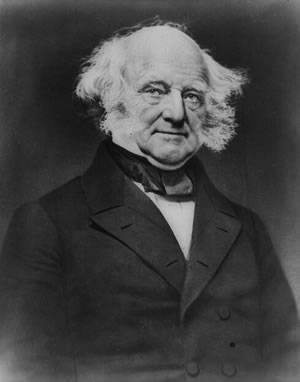

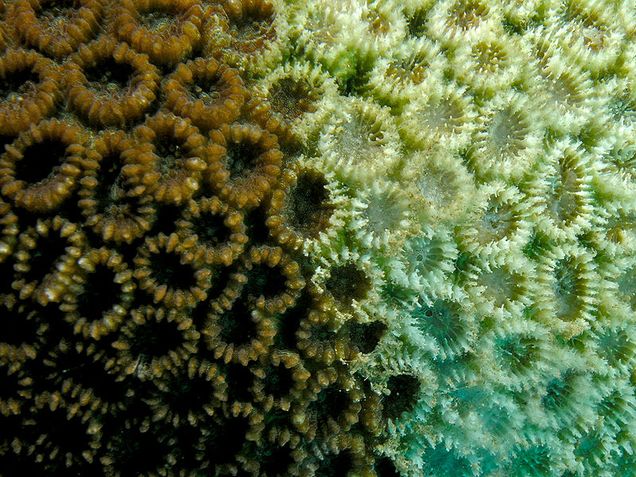

Slight increases in water temperature cause coral bleaching. Credit | Nhobgood via Wikimedia Commons

What if everything you relied upon for survival – your food, your home, your protection – was suddenly stripped away? This tragedy is currently happening to aquatic organisms like plants, fish, and other animals because of the alarming death rates of coral reefs. Corals, invertebrate animals with skeletons comprised of crystalline calcium carbonate, are at the very bottom of the food chain of oceans, feeding on phytoplankton and zooplankton.1 Coral formations like reefs cover an estimated 0.1-0.5% of the ocean floor in warm and tropical climates surrounding islands.2 Corals can only survive in a narrow optimal temperature range of approximately 73 °F-79 °F.3 The thermal limits signify temperature boundaries at which corals can live optimally; the slightest changes due to recent global warming trends, increasing CO2 levels, and over-nutrification are becoming disastrous. Because of their importance as a habitat for other organisms, the 80% decline in coral populations in various tropical and subtropical regions of the World Ocean is an increased threat to global aquatic biodiversity.4

Importance of Corals

Coral reefs are some of the most productive and diverse communities on the planet; they have many uses and provide many ecological services. Coral reefs function as physical barriers for currents and waves in the ocean, and over time, provide appropriate environments for sea grass beds and mangroves (as can be seen in Figure 1).2 Coral reefs’ high species assortment make this aquatic biome one of the most bio-diverse, providing homes and resources for countless marine animals.5 Corals provide a great deal of other ecological services. They act as a physical barrier that slows down erosion, which lets land build up, by blocking or slowing down waves from reaching the rocks of cliffs. Biotic services that offer suitable environments for nursing, spawning, feeding and breeding for a number of organisms, including algae, fish, sharks, eels, and sea urchins, are also provided by coral reefs. Furthermore, corals serve biogeochemical functions as nitrogen fixers in nutrient-poor environments.2

Corals also provide benefits to humans. They generate seafood products consumed and enjoyed around the world, and account for 9-12% of the world’s fisheries.2 Coral reefs contribute to the tourism industry by providing swimming and diving areas, as well as producing aesthetic goods like jewelry and souvenirs.5 Coral has also proven to be useful in the medical world, with successful use of coral skeletons in bone graft operations, and with the increased inclusion of coral reef substances in pharmaceutical products. Additionally, and most superficially engaging, is the contribution of household or public aquariums in the economy, which accounted for $24-40 million in 1985 and has been growing rapidly since.2 Knowing the importance of corals gives us motivation for assessing the causes and effects of coral population, and gives us insight as to why we should pay attention to this topic.

In total, corals contribute an estimated $30 billion to the global economy.6 However, a combination of disturbances, both natural and anthropogenic, or human-induced, has resulted in global declines of up to 80% in coral reefs over the recent three decades.7 This decline is worldwide, where parts of many reefs are being lost rapidly.8

Causes and Effects of Coral Population Decline

One of the main causes of the approximately 80% coral population decline is an increase in global temperature, as a warmer climate leads to other underlying causes such as increased ocean acidity. 1, 4, 9. An approximate 34 °F-36 °F annual increase in water temperature in the tropical zones of the world’s oceans is likely caused by global warming.4 Prolonged exposure to increased temperature results in the partial or complete loss of corals. Since corals exist in environments dangerously close to the top of their upper thermal limit of 73 °F-79 °F, they are vulnerable to the thermal stress that causes mortality.3,7,10 Increasing anthropogenic carbon dioxide (CO2) leads to the warming of the atmosphere and the ocean; these rising upper ocean temperatures cause widespread thermal stress, resulting in a phenomenon known as coral bleaching.6 Coral bleaching is called so because corals lose their color and turn white when affected, since the symbiotic zooxanthellae are released from the host coral.2

Calcification, the process that builds calcium up into a solid mass, is depressed with increased temperature and CO2 levels; this depression inhibits coral skeletal growth, and destroys coral health.7 Though corals can recover from bleaching events, these can still leave a negative impression behind on certain corals. Examples of coral decline after a large bleaching event can be seen in Figure 2. Coral bleaching can be caused by a decrease in salinity, due to run-off from clear cuttings and urbanization, as well as the release of poisonous substances like heavy metals.2

Atmospheric CO2 levels are currently 30% higher than their natural levels throughout the last 650,000 years, and in the last 200 years, approximately one third of all CO2 emissions have been absorbed by the ocean, affecting marine organisms at all depths.12 As carbon dioxide reacts with a body of water, the water surface becomes more acidic. This can negatively impact corals as well as countless biological and physiological processes such as coral and cucumber growth, sea urchin fertilization, and organisms’ larval development, especially for those who cannot survive outside a certain pH range.12 Ocean acidification caused by increasing CO2 levels decreases calcification rates in corals; this decrease results in a decline of coral populations.7

A similar large anthropogenic contributor to coral decline is water pollution through wastes from waterside construction sites and run-off from deforestation sites. Anthropogenic nutrification, the continuous excess nutrient enrichment of coastal waters, is a large contributor of coral population decline; populated sites have significantly higher levels of nutrients than underdeveloped sites, whereby the underdeveloped sites had increased coral cover.11 Corals must also endure mechanical damage from ships, anchors, and tourists colliding against them, which gets harder to do over time since the corals are not strong enough to withstand constant grazing and spillages.4

Future of Coral Populations

An essential part of saving coral populations from further decline is proper assessment of the situation. Researchers should evaluate biotic pigments like chlorophyll in the water around reefs since they play key roles in the uptake and retention of nutrients, which would better understand and improve nutrification problems.11 Aerial photographs, airborne sensors, and satellite images are in the future for quality observation and assessment of coral reef structures and communities.9 Remote sensing technology is also a plausible way to monitor coral reef growth in the future, and to find linkages between growth and different land processes so that we can better understand coral growth.13 Satellite imaging seems to be a good way to better our understanding of coral reef structure and growth, as well as potential threats to this diverse aquatic system through implementation of spatial and time-relevant data. Remote-sensing data is already in use to map and monitor coral reefs, and it is likely that this usage will increase in the future, due to these great advantages.6

The prediction of large-scale coral extinction is very real, with an estimated 80% of reef-building coral populations having been lost in shallow areas of tropical and subtropical zones.4 It is thought that coral bleaching will only become more frequent and intense over the next decades because of anthropogenic climate change that warming and subsequent water acidification will destroy coral reef-building capacities in the decades to come, and that oceanic pH levels will decrease 0.3-0.5 units by the end of the century due to continued absorption of anthropogenic CO2.9 Each of these factors will result in negative impacts on marine organisms.12 The future of coral populations is still very much undecided, but the balance can be tipped in the favor of saving corals if people are proactive about it. The effects of coral population decline have recently come into sight, but now it is clear what needs to be done to minimalize the causes so that coral populations can start steadily increasing. Major physical damage to coral reefs, usually mechanical, can require between 100 and 150 years for recovery, which puts into perspective how important it is for the planet’s inhabitants to make positive changes.14

References

1Keller, N.B. and Oskina, N.S. (2009). About the correlation between the distribution of the Azooxantellate Scleractinian corals and the abundance of the zooplankton in the surface waters. Pp. 811-822. Oceanology, 49; 6.

Miller, Richard L. and Cruise, James F. (1995). Effects of suspended sediments on coral growth. Pp. 177-180. Remote Sens. Environ., 53.

2Moberg, Fredrik and Folke, Carl (1999). Ecological goods and services of coral reef ecosystems. Pp. 215-228. Ecological Economics, 29.

3Al-Horani, F. (2005). Effects of changing seawater temperature on photosynthesis and calcification in the scleractinian coral Galaxea fascicularis, measured with O-2, Ca2+ and pH microsensors. Pp. 347-354. Scientia Marina, 69: 3.

4Titlyanov, E.A. and Titlyanova, T.V. (2008). Coral-algal competition on damaged reefs. Pp. 199- 210. Russian Journal of Marine Biology, 34; 4.

5Bakus, Gerald J. (1983). The selection and management of coral reef preserves. Pp. 305-312. Ocean Management,8.

6Eakin, C.M., Nim, C.J., Brainard, R.E., Aubrecht, C., Elvidge, C., Gledhill, D.K., Muller-Karger, F., Mumby, P.J., Skirving, W.J., Strong, A.E., Wang, M., Weeks, S., Wentz, F. and Ziskin, D. (2010). Monitoring coral reefs from space. Pp. 119-130. Oceanography, 23; 4.

7Glynn, Peter W. (2011). In tandem reef coral and cryptic metazoan declines and extinctions. Pp. 767-771. Bulletin of Marine Science, 87;4.

8Coté, I., Gill, J., Gardner, T., Watkinson, A. 2005. Measuring coral reef decline through meta-analyses. Biological Sciences 360; 1454.

9Scope ́litis, J., Andre ́foue ̈t, S., Phinn, S., Done, T. and Chabanet, P. (2011). Coral colonisation of a shallow reef flat in response to rising sea level: quantification from 35 years of remote sensing data at Heron Island, Australia. Pp. 951-956). Coral Reefs, 30.

10Obura, D and Mangubhai, S. (2011). Coral mortality associated with thermal fluctuations in the Phoenix islands. Pp. 607-608. Coral Reefs, 30.

11Costa Jr. O., Leão, Z., Nimmo, M. and Attrill, M. (2000). Nutrification impacts on coral reefs from northern Bahia, Brazil. Pp. 307-314. Hydrobiologia, 40.

12Albright, Rebecca and Langdon, Chris. (2011). Ocean acidification impacts multiple early life history processes of the Caribbean coral Porites astreoides. Pp. 2478-2479. Global Change Biology, 17.

13Stella, J. S., Munday, P. L. and Jones. G. P. (2011). Effects of coral bleaching on he obligate coral-dwelling crab Trapezia cymadoce. Pp. 719-723. Coral Reefs, 30.

14Precht, W. (1998). The art and science of reef restoration. Pp. 16-20. Geotimes 43

It’s In Your Fridge

The Synapse Weekly - combating smelly mold.

Welcome back, students! Sure, you may not be so happy about the onslaught of papers, exams and reading assignments that are sure to come over the next few weeks – but it’s good to be back in the Hub. Walking into your dorm room is familiar and almost pleasant until … Oh god, not the dreaded post-spring vacation microfridge.

The mini refrigerator is your friend during the semester. The college student is indebted to its existence: the fridge helps keep take out leftovers from spoiling and keeps your drinks cool. After finishing your midterms, you pack the essentials, unplug electronics and bolt. As you spend time with family and friends, the abandoned fridge sits and rebels, allowing a variety of mold spores to grow in its moist, warm interior.

Of course this is all dramatic drivel: your fridge does not plot to make your life miserable while you are on vacation. However, the mold is no joke: some mold spores are known to trigger allergic reactions, and others are able to produce deadly toxins.4, 5

While this may sound ominous, not all molds are deadly, and many molds are beneficial to the environment. These microscopic fungi works to break down nutrients from dead organic material, “recycling” nutrients to foster growth.2 However, this is a double-edged sword. Because mold breaks down organic materials, mold growth can cause significant damage.

All mold needs to thrive are comfortable temperatures, a nutrient source, moisture and spores.3 Mold has the ability to thrive in near-freezing to tropical environments, and may feed off of any organic, or carbon-containing, food source.3 Moisture, in the form of liquid or vapor, must be present for mold spores to grow. Controlling moisture levels is the easiest way to control mold growth.3

Spores allow mold to spread to new environments, and are typically located at the tip of the mold stalk for easy release into the environment.5 The stalks emerge from near-invisible filamentous structures known as “roots”, which grow through the mold’s nutrient source.5 The part of mold that people see is the spore-laden stalk.5 Stalks and spores have a variety of forms, each designed to spread the growth of that particular mold. Consequently, mold spores are present in nearly all environments.3, 4

But never fear! It is easy to clean out your fridge and maintain a mold-free environment. To clean your microfridge, use a 50/50 mixture of warm water and white vinegar.1 The vinegar works to kill the fungus and neutralize any dank odors.1 After the mold is removed, make sure the fridge is free of moisture. Controlling moisture is the easiest way to prevent a mold infestation because mold spores cannot grow without water.3 The more moisture in one’s home environment, the more likely mold infestations will occur.3 Keep moisture levels down, and that horrifying house guest will stay away.

References

1 eHow Home. (2011). How to Clean Mold in a Fridge. Retrieved from

http://www.ehow.com/how_5066391_clean-mold-fridge.html

2 New York Department of Health. (2011). Information about Mold. Retrieved from http://www.health.ny.gov/publications/7287/

3 Fairey, Philip; Subrato Chandra and Neil Moyer, Florida Solar Energy Center. (2007). Mold Growth. Retrieved from http://www.fsec.ucf.edu/en/consumer/buildings/basics/moldgrowth.htm

4 Environmental Protection Agency. (2007). A Brief Guide to Mold, Moisture and Your Home.

Retrieved from http://www.epa.gov/mold/moldbasics.html

5 Food Safety and Inspection Service. (2010). Molds on food: are they dangerous? Retrieved

from http://www.fsis.usda.gov/factsheets/molds_on_food/#1

Seeing Stars

The Galactic Ring Survey aims to put new stars on the map.

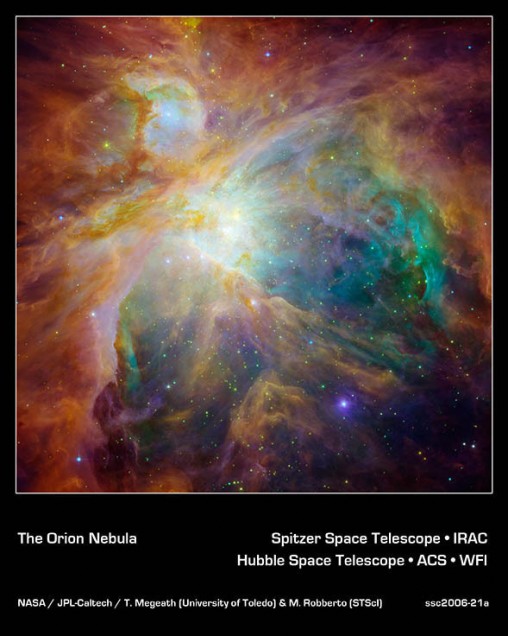

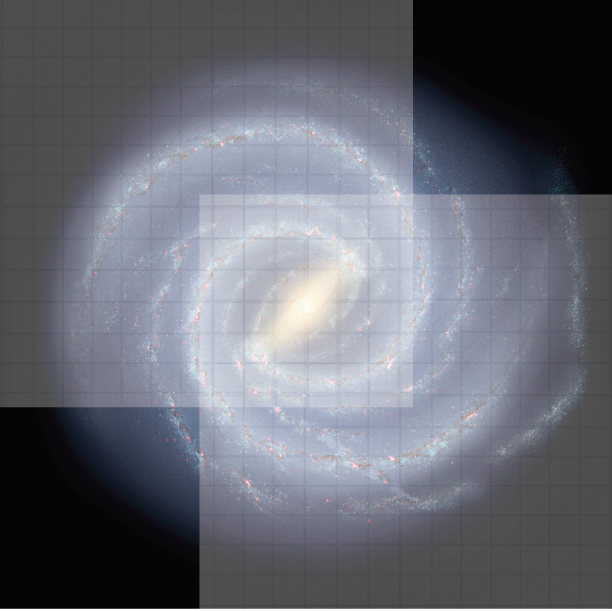

Scientists at Boston University are mapping carbon monoxide in the Milky Way. Illustration by Evan Caughey

A star is born! No, it is not the next American Idol, but one of the celestial bodies illuminating the night sky. Like you, researchers at Boston University have asked themselves how these twinkling objects come to be. To find out, the Astronomy Department initiated the Galactic Ring Survey (GRS) in 1998 in New Salem, Massachusetts.1 Along with the Five College Radio Astronomy Observatory (consisting of University of Massachusetts, Amherst College, Hampshire College, Mount Holyoke College and Smith College)2, BU began an eight-year-long project intended to scan and map out concentrations of carbon monoxide (13CO) gas in the Milky Way. 1 The main purpose of this research was to determine where future stars may form using 13CO gas, which is less common than 12CO or hydrogen in the universe, and thus allows for a narrower scope or error field.3

The Galactic Ring

New stars are most likely to form in the galactic ring, a large area of the Milky Way found inside the solar circle.3. The ring contains 70 percent of all the necessary molecular gases crucial to star formation, including 13CO, 12CO, oxygen and hydrogen. 4 This region is important to the evolution and structure of our Galaxy. However, because the region dominates a large expanse of the Milky Way, it is hard to collect a complete image of the area, making it challenging to estimate distances to, from, and between star-forming regions.3 Most of the ring still remains to be researched; the GRS has only explored the areas between Galactic longitude 18o and 55.7o, only about 10 percent of the entire galaxy.1

New Stars and the Milky Way

Stars are the basic building blocks of any galaxy.5 The Milky Way alone has more than a hundred billion stars, and the capability of producing yet another billion.6 Stars contain and distribute many of the essential elements found in the universe: Carbon, Nitrogen, and Oxygen.5 In addition, stars act as fossil records to astronomers; their age, distribution, and composition of reveal the history, dynamics, and evolution of a galaxy.5

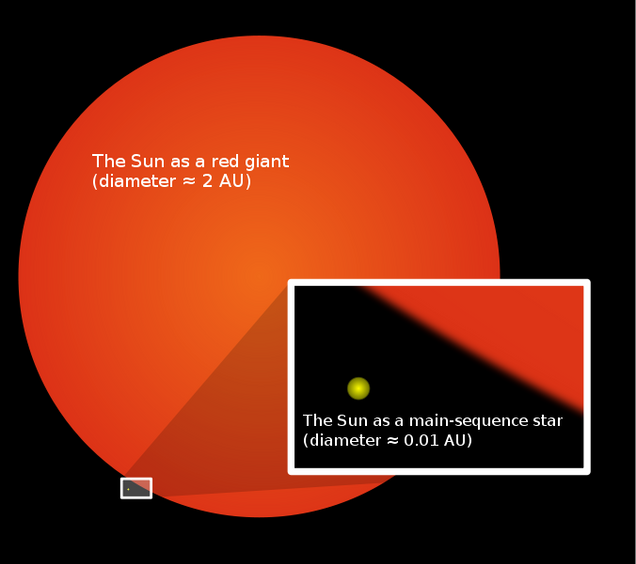

Stars form within dust clouds at different rates and at different times over billions of years. The first form of a star, a mere infant blob of heated gases, is known as a Protostar. New stars are formed when a Protostar sustains nuclear fusions of hydrogen, which enable the development of a hot helium core by preventing the high temperature within to cause the collapse of the star.5 These stars then enter their mature state, a period that can last from just millions of years to more than tens of billions of years. The larger or more massive the star is, the shorter its life span. For example, the Sun is an average-sized star and is expected to stay in its mature state for another ten billion years.5 Small stars, which can be up to ten times smaller than the Sun, are known as Red Dwarfs, and are the most abundant form of stars in the universe. 5 On the other hand, the most massive stars can be up to 100 times the size of the Sun and are known as Hypergiants.5 However, these stars are extremely rare in our current universe because their life span is so short and their deaths is so violent.5

Near the end of its life, a star will often grow into massive red giant. Credit | Mysid via Wikimedia Commons

As stars begin to reach the end of their lifespan, hydrogen fusion within the star stops and temperatures inside the core begin to rise, causing the star to collapse.5 At this stage the star becomes a Red Giant. The dead star still produces heat and light as elements within the stars fuse with hydrogen outside the star’s outer layers.5 Depending on size, explosive and extravagant chemical and nuclear reactions may occur within the star.5 These reactions will ultimately determine what form the dead star will take. Most stars will become White Dwarfs; others, usually if they are larger, will form into Novae or Supernovae. Novae form when the outer layers of the dead star explode, while a Supernovae forms when the core of the dead star explodes.5 The remains of a dead star either form neutron stars, or black holes. Neutron stars are created when electrons and protons in the dead stars combine into neutrons as it collapses. Black holes, are formed when the mass of the collapsing stars is three times larger than the mass of the sun and the gravitational pull is so strong it can pull anything within gravitational distance into its core. At other times the remains of the stars incorporate into dust already present in the area and may contribute to the formation of planets, comets, asteroids or new stars.5

Mapping the Stars

Using a 14-meter telescope with a beam-width of 117 cm, Boston University’s team of astronomers collected vast amounts of images that allowed for a wide range of research to be conducted. 7 The primary advances that emerged from this project were the ability to detect significant samples of star forming clouds, determining the distances of the star clouds and with the distances establish size, mass, luminosity and spatial distributions of clouds. 1

“The images we collected are beautiful, and show that the gas clouds in the Milky Way are not the round blobs we had imagined, but rather more like cobwebs or filaments,” commented Professor Jackson, one of the project’s designers. According to Professor Jackson, over 800 molecular clouds, which are found between stars with the potential to produce new stars, were discovered. 7 The temperature of these clouds was measured and it was discovered that they are very turbulent structures. Turbulence within clouds creates structures of gas and dust that increase in mass, and which collapse due to its own gravitational pull.5 As the gas and dust structure collapses, other materials at the core of the clouds heat up, becoming the basis of a Protostar.5 Each of the observed clouds seemed to have the same amounts of turbulence and thus the potential to become a new star.

Further Investigations

Although the actual data collection of the project ended in 2005, the GRS continues to impact new research. 7 One of the largest areas still being researched is the structure of infrared clouds, which were first discovered in the solar system by the GRS. They are dense, cold clouds that will form large gas clumps. 7These clouds are cold because they are in the early stages of star-formation. 7 Eventually these clouds evolve into massive stars. 7

According to Professor Jackson, over one hundred research papers have used the data collected by the GRS, during and after its conclusion. 7

“I think the GRS data will be used for years to come,” stated Professor Jackson.

This publication makes use of molecular line data from the Boston University-FCRAO Galactic Ring Survey (GRS). The GRS is a joint project of Boston University and Five College Radio Astronomy Observatory, funded by the National Science Foundation under grants AST-9800334, AST-0098562, & AST-0100793.

Works Cited

1Jackson, J. M. (2006). The Boston University–five college radio astronomy observatory. Manuscript submitted for publication, Astronomy Department, Boston University, Boston, MA.

2FCRAO general information. (n.d.) U Mass Astronomy: Five College Radio Astronomy Observatory. Retrieved March 4 2012, from http://www.astro.umass.edu/~fcrao/telescope/.

3 Lavoie, R, Stojimirovic, I, and Johnson, A. (2007). Galactic Ring Survey. The GRS. Retrieved March 4 2012, from http://www.bu.edu/galacticring/new_index.htm.

4Where is M13? (2006). The Galactic Coordinate System. Retrieved March 4 2012, from http://www.thinkastronomy.com/M13/Manual/common/galactic_coords.html.

5Stars-NASA Science (2011). NASA Science. Retrieved March 4 2012, from http://science.nasa.gov/astrophysics/focus-areas/how-do-stars-form-and-evolve/.

6Galaxies. (2011). NASA Science. Retrieved March 4 2012, from http://science.nasa.gov/astrophysics/focus-areas/what-are-galaxies/.

7Jackson, J. M. (2011). Interview by P.C. Garcia [Web Based Recording]. Grs research project. Boston, MA.

Vaccines: What You May Not Know

The Synapse Weekly - vaccinate yourself!

I recently wrote about FDR, poliovirus, and the polio vaccination. It was a pretty interesting article, if I do say so myself. (If you don't believe me, check it out here.) Unfortunately, the blog was not long enough to discuss the history and science of vaccinations - so this week, I present a list of FUN VACCINATION FACTS!

1. Vaccines improve immunity to disease.

Let's start with the basics – a definition will do. In brief, a vaccination is a suspension of weakened toxic or organic material that is introduced into another organism to prevent disease.1

2. Immunization technology originated in China.

Smallpox is a deadly disease named for the characteristic pockmarks that appear on the skin of the infected. Smallpox is easily spread and often infects children. Because of this, the desire to prevent infection was strong.

Innovators in ancient China found an easy way to increase immunity: just snort some ground-up scab from a person infected with smallpox.2 For all those people out there not into huffing tiny bits of scab, immunization could also be administered by grounding material from smallpox sores into the skin.2 The use of diseased organic material to increase immunity is thought to have originated around 1000 CE.2

3. Jenner checks out milkmaids, creates smallpox vaccine.

By the late 1700s, it was well-known that immunity to smallpox was related to exposure of material from another infected individual. This practice is called variolation, and was practiced by wealthy people in the early 18th century.2

The country doctor Edward Jenner applied this knowledge after learning that milkmaids were often unaffected by smallpox after suffering hand sores.2, 3 These sores are from the disease known as cowpox, a smallpox-related disease that is passed from cow-to-cow and (sometimes) cow-to-human.3 After finding a milkmaid suffering cowpox, Jenner ran a needle through the sore.2 This same needle was used to inoculate an eight-year-old boy named James Phipps.3 After exposure to the disease, Phipps did not suffer infection.3 Jenner published his findings, calling his new preventative technique a “vaccine”.3

4. Vaccines have almost always been controversial.

Edward Jenner’s vaccination was met with some derision; after a decade of public promotion of the vaccine, Jenner withdrew from the public eye.3 Today, the controversy continues, largely over vaccination side-effects. The controversy is best demonstrated in the various political, social and religious associations that question vaccination safety and effectiveness.

5. Rabid dogs used to roam the streets of Paris in force.

During the summer of 1880, a Parisian veterinarian grew concerned about the number of rabid dogs in the city, and sent samples of infected dog brain to Louis Pasteur’s lab.3 Five years after receiving these samples, Pasteur developed a vaccine for rabies.3

6. Vaccinations are not related to autism.

In 1998, Dr. Andrew Wakefield published a paper in The Lancet that linked autism with vaccinations.4 After the publication, several doctors and scientists questioned the research, urging a more detailed investigation. In 2004, a group of scientists reviewed Wakefield’s article and found that there was no link between vaccines and autism.5 Further investigation shows that Wakefield was funded by a lawyer suing a vaccine company.

In 2010, Wakefield’s article was formally retracted by The Lancet.6

7. Early vaccinations were often tested on family members.

Famous researcher Salk tested his polio vaccination on his family.2 On the next level of insanity: Dr. Hilary Kaprowski tested his polio vaccination on himself.2

8. There is a temple dedicated to the power of vaccinations.

This fact is half true – Edward Jenner (of smallpox vaccination fame) built a one-room shack in his backyard to vaccinate people for free. He called this building the “Temple of Vaccinia”.3

References

1 Encyclopædia Britannica. (2012). vaccine. Retrieved from http://www.britannica.com/EBchecked/topic/621274/vaccine

2 College of Physicians of Philadelphia, The. (2012). The history of vaccines: Timeline. Retrieved from http://www.historyofvaccines.org/content/timelines/all

3 Riedel, Stefan. (2005). Edward Jenner and the history of the smallpox vaccine. Retrieved from http://www.ncbi.nlm.nih.gov/pmc/articles/PMC1200696/

4 Hensley, Scott. (2010). NPR. Lancet renounces study linking autism and vaccines. Retrieved from http://www.npr.org/blogs/health/2010/02/lancet_wakefield_autism_mmr_au.html

5 Institute of Medicine of the National Academies. (2004). Immunization safety review: vaccines and autism. Retrieved from http://www.iom.edu/Reports/2004/Immunization-Safety-Review-Vaccines-and-Autism.aspx

6 Lancet, The. (2010). Retraction—Ileal-lymphoid-nodular hyperplasia, non-specific colitis, and pervasive developmental disorder in children. Retrieved from http://download.thelancet.com/flatcontentassets/pdfs/S0140673610601754.pdf

Glow In The Dark Cats

The Synapse Weekly - AIDS research and jellyfish?

Everyone knows the Internet is for cats. Nothing else, just cats. Cats are fun, cats are cute, and cats have the ability to improve the quality of any day. I mean, just look at this guy!

Your day is better already.

But wait: aside from chemistry puns, can cats make any important contributions to science? Yes, they do – and in a big way. The Mayo Clinic is now using felines as test subjects to research AIDS, an immunologic disease. And while this sounds pretty cool, it gets even better: these cats glow in the dark.

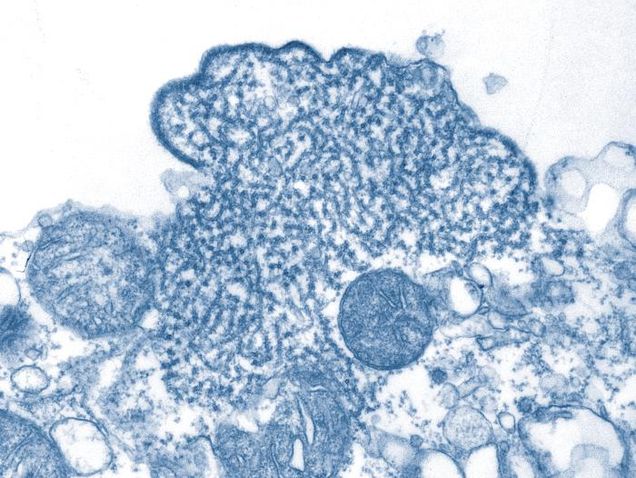

Bioluminescence, the ability to emit light, occurs naturally in organisms like jellyfish, insects and fungi (please note, this group does not include cats). In 1961, Osamu Shimomura (a Boston University graduate and Nobel Prize winner) and his colleagues, Martin Chalfe and Roger Y. Tsien, isolated the protein responsible for bioluminescence in the jellyfish species Aequorea victoria.1 This protein is called Green Fluorescent Protein (GFP). A non-bioluminescent organism can be genetically modified to emit light if the DNA sequence encoding for GFP is incorporated into its genome.

When scientists want to incorporate a specific DNA sequence into an organism for research, it is difficult to determine if the sequence has been properly integrated. To be sure the DNA sequence is functional within the organism, the GFP gene is inserted into the organism’s genome at the same time as the DNA sequence to be researched. If the organism is bioluminescent, the specific DNA sequence has properly integrated into the genome. The fluorescence thus acts as a tag for successful integration, allowing easy identification of the modified organism.

This is a simplified representation of molecular biochemistry with enzymes. Credit | Dhorstool via Wikimedia Commons

At first, bioluminescence tags were only used in single-celled organisms. Now, scientists use the protein in multi-celled organisms … like cats. In this case, the cats that are “tagged” with the glowing GFP protein also successfully produce a protein that protects them against the Feline Immunodeficiency Virus (FIV).2, 3 FIV is the virus that causes feline AIDS, and is comparable to Human Immunodeficiency Virus (HIV) and the AIDS epedemic.2 Because of this, the research involving FIV may also be applicable to humans, helping both species in their struggle against AIDS.

Several other experiments must be completed before we know the benefits of this research, but the results so far are promising. Both GFP and the protective protein transfer through natural reproductive processes, 2 promising at least two things:

1) FIV and AIDS research will continue to test the effectiveness of the protective protein. If the protein proves effective, the transferable nature of the gene may be applied to the whole population of domestic cats. This may then be modified and applied to humans.

2) As research continues, there will be more glowing cats! Everyone wins!

References

1 Shimomura, Osamu. Autobiography. Retrieved from http://www.nobelprize.org/nobel_prizes/chemistry/laureates/2008/shimomura. html

2 Nellis, Robert. (2011, September 11). Mayo Clinic Teams with Glowing Cats Against AIDS, Other Diseases: New technique gives cats protection genes. Retrieved from http://www.mayoclinic.org/news2011-rst/6434.html

3 Wongsrikeao, Pimprapar and Dyana Saenz, Tommy Rinkoski, Takeshige Otoi & Eric Poeschla. Antiviral transcription factor transgenesis in the domestic cat. Nature Methods. 11 September 2011. Retrieved from http://www.nature.com/nmeth/journal/vaop/ncurrent/full/nmeth.1703.html

Flying or Falling?

The ecological significance of flying foxes and why we should care.

This species of flying fox is a native of various East Asian islands. Credit | KCZooFan via Wikimedia Commons

Many people have never heard of flying fox bats, but these winged mammals are extremely important to both their native habitats and surrounding human populations. However, their numbers have been declining over the past several decades. This trend is alarming because flying foxes fulfill several key ecological roles in island ecosystems, which are particularly interconnected. The decline or removal of such an island species has the potential for a drastic impact on the rest of an island’s native plant and animal species. Factors that contribute to the decline and endangerment of these bats are overhunting and deforestation. The conservation of flying foxes faces several different challenges, including the tremendous mobility of the bats across international borders, the lack of consistency in current conservation laws, and the lack of public awareness about the viruses they carry.

Meet the Flying Fox

The majority of flying foxes are classified under the genus Pteropus, which contains 65 species. Named for their unique appearance, these bats generally have wingspans of one to two meters, but they usually weigh less than one kilogram.1,2 Their range extends from the Polynesian Islands in the South Pacific Ocean to the eastern coast of Africa, with the largest concentration of flying fox species found east of the Indian Ocean.3,4 Flying foxes, unlike many other types of bats, congregate in tropical forests rather than caves.5,6 They are strictly frugivorous and nectarivorous mammals, meaning that they derive all of their key nutrients from fruits and nectar.3,7,8 Although they feed at night, they are also sometimes active in flight during the day. 1, 6 Their roles as pollinators and seed dispersers are critical to the regeneration of forests, especially in island ecosystems, where the number of seed disperser species is often small.3

Business Boosters

Not only do these bats benefit their ecosystem, they help humans as well. Flying foxes are seed dispersers for more than 300 different paleotropical plant species. In turn, these plant species provide many products that are valuable in both local and global economies, including timber, medicine, beverages, and fresh fruits.1,3 Nectarivorous bats—which include flying foxes—are the most effective pollinators of the all-important Southeast Asian durian plant, which has a global trade value of over $1.5 billion per year.5

Bat Numbers on the Decline

Though these bats are crucial for a healthy ecosystem, their outlook is grave: 18 out of the 30 flying fox species native to the 11 countries of Southeast Asia were “Red Listed” by the Southeast Asian Mammal Databank, indicating that they are of some level of conservation concern.5 One of the main causes of these declines is extensive hunting by humans.1,8 Most of the flying fox meat resulting from this hunting is for human consumption.6 However, in certain cultures, the meat is believed to have medicinal purposes, such as curing respiratory illnesses like asthma or aiding women recovering from childbirth.8,9 A third motivation for the hunting of flying foxes is their perception as a pest by orchard farmers; some farmers go so far as to hire bounty hunters to rid the area of flying foxes.1,8 As a result, enormous numbers of flying foxes are killed. For instance, in Malaysia, issued hunting licenses permitted the killing of up to 87,000 individuals of Pteropus vampyrus between 2002 and 2006 alone. Researchers predict that if this pattern continues unchecked, the species will eventually be driven to extinction.8

"If this pattern continues, the species will be driven to extinction. A second threat to flying fox populations is the rampant deforestation occurring in their habitats across their range. In the western Indian Ocean, the habitat of the endangered Pteropus livingstonii in the Union of the Comoros is disappearing at a rate of nearly 4% per year.10 Since these bats depend on tree cover for roosts and visit fruiting and flowering trees for food, deforestation is recognized as one of the significant factors in their current and future population declines.5,6,7,9

Virus Vectors: Watch out!

Flying fox conservation has become more complex in recent years with the discovery that these fruit bats have the ability to carry and transmit deadly viruses without displaying adverse symptoms themselves.7,1 Outbreaks of two diseases in particular, the Hendra and Nipah viruses, have claimed the lives of both livestock and humans in Australia, Malaysia, and other countries.9,1 Further outbreaks may be expected if human-to-bat contact through food or hunting continues or increases.9

Conservation Efforts, Successes and Challenges

Flying foxes transmit viruses such as the Nipah virus. Credit | CDC/ C. S. Goldsmith, P. E. Rollin vis Wikimedia Commons

Some progress has been made to understand and preserve flying foxes and their habitats. In areas of Australia, the hunting of flying foxes has come under strict regulation by the government.1 In one area of Borneo, it is now illegal to hunt flying foxes.8 The Southeast Asian Bat Conservation Research Unit, established in 2007, has identified the conservation of flying foxes as an immediate and top priority.5 Additionally, there have been advances in tracking the movements of individual bats by satellite, but it is still a new area of research. Because of the mobility of flying foxes, which allows them to cross international borders, researchers emphasize the necessity of combined international conservation cooperation.7 Therefore, the conservation goals for flying foxes must be twofold: halting the transmission of these infectious diseases while also protecting the biodiversity flying foxes represent in the paleotropics.

Clearly, flying foxes are crucial members of their tropical environment, but they hold great global significance as well. The conservation of flying foxes has implications for fields as diverse as international relations, ecology, and economics. As citizens of the global community, we should appreciate the benefits that we receive from flying foxes and other endangered species, especially those living on the other side of the world. In preserving these species, we are protecting the diverse habitats of the earth now…and for the future.

References

1 Fujita, M. S. and M. D. Tuttle. 1991. Flying foxes (Chiroptera: Pteropodidae): Threatened animals of key ecological and economic importance. Conservation Biology 5:455-463.

2 Smith, C. S., J. H. Epstein, A. C. Breed, R. K. Plowright, K. J. Olival, C. de Jong, P. Daszak, H. E. Field. 2011. Satellite telemetry and long-range bat movements. PloS ONE 6:e14696 doi:10.1371/journal.pone.0014696.

3 Cox, P. A., T. Elmqvist, E. D. Pierson, W. E. Rainey. 1991. Flying foxes as strong interactors in South Pacific island ecosystems: a conservation hypothesis. Conservation Biology 5:448-454.

4 Brown, V. A., A. Brooke, J. A. Fordyce, G. F. McCracken. 2011. Genetic analysis of populations of the threatened bat Pteropus mariannus. Conservation Genetics 12:933–941.

5 Kingston, T. 2010. Research priorities for bat conservation in Southeast Asia: a consensus approach. Biodiversity and Conservation 19:471–484.

6 Brooke, A. P., C. Solek, and A. Tualaulelei. 2000. Roosting behavior of colonial and solitary flying foxes in American Samoa (Chiroptera: Pteropodidae). Biotropica 32:338-350.

7 Breed, A. C., H. E. Field, C. S. Smith, J. Edmonston, and J. Meers. 2010. Bats without borders: long-distance movements and implications for disease risk management. EcoHealth 7:204–212.

8Epstein, J. H, K. J. Olival, J. R.C. Pulliam, C. Smith, J. Westrum, T. Hughes, A. P. Dobson, A. Zubaid, S. A. Rahman, M. M. Basir, H. E. Field, and P. Daszak. 2009. Pteropus vampyrus, a hunted migratory species with a multinational home-range and a need for regional management. Journal of Applied Ecology 46:991–1002.

9 Harrison, M. E., S. M. Cheyne, F. Darma, D. A. Ribowo, S. H. Limin, and M. J. Struebig. 2011. Hunting of flying foxes and perception of disease risk in Indonesian Borneo. Biological Conservation 144:2441–2449.

10 Sewall, B. J., A. L. Freestone, M. F. E. Moutui, N. Toilibou, I. Saĭd, S. M. Toumani, D. Attoumane, and C. M. Iboura. 2011. Reorienting systematic conservation assessment for effective conservation planning. Conservation Biology 25: 688–696.

11 Baker, M. L., M. Tachedjian, and L. F. Wang. 2010. Immunoglobulin heavy chain diversity in Pteropid bats: evidence for a diverse and highly specific antigen binding repertoire. Immunogenetics 62:173-184.

[1]2 Field, H. E., A. C. Breed, J. Shield, R. M. Hedlefs, K. Pittard, B. Pott, and P. M. Summers. 2007. Epidemiological perspectives on Hendra virus infection in horses and flying foxes. Australian Veterinary Journal 85:268-270.

Polio And President’s Day

The Synapse Weekly - celebrating eradication.

Perhaps the best part about spring semester are the long weekends scattered throughout the first few weeks of school. My personal favorite is President’s Day Weekend. The reasoning behind this is quite simple: presidents are awesome. Sure, this day in February is set aside for the more famous Abraham and George, but all presidents are pretty cool. This is because presidents are always controversial and even the boring ones have some sort of distinguishing characteristic (read: Martin Van Buren’s crazy facial hair).

One distinguished and controversial president suffered a severe case of polio at the age of thirty-nine, and went on to win the presidential office at age fifty.3 This president was Franklin Delano Roosevelt. His policies are famous, even today, and he brought the nation comfort during the Second World War. However, let’s focus on what made him even more awesome: his drive to overcome a devastating disease.

Poliovirus is an infectious virus that causes the disease known as polio, or poliomyelitis.3 Viruses differ from bacteria in that they are not truly independent, live organisms. Viruses are composed of a hard shell that encircles genetic material, which may be either RNA or DNA.4 The virus injects its genetic material into a cell, where it essentially hijacks the cell into producing viruses rather than performing normal life functions.3

Polio first infects the oral and digestive tracts.3 Because of this, the virus is often found in the oral, nasal or fecal material of an infected person.4 With time, the virus spreads throughout the body of its host, reaching motor neurons in the central nervous system.3 As the poliovirus uses motor neurons to replicate itself, the motor neurons die.3 As more motor neurons die, the infected person shows signs and symptoms of polio.3

Polio presents itself in a variety of ways, depending on the degree of infection. Not all people infected with poliovirus show signs of the disease, and up to 95% of infected people are asymptomatic while remaining able to spread the disease.3 Of symptomatic patients, most experience flu-like symptoms followed by a full recovery.3 However, not all are so lucky. Approximately 1% of people infected with polio experience some form of paralysis.3

Of those paralysis-inflicted individuals, Franklin Roosevelt may be the most famous. Infected at the age of 39, Roosevelt fought to survive the disease.2 The road to recovery was not easy, and his age exacerbated the infection. At the time, the polio mortality rate for adults in the United States was six times higher than the child mortality rate.3 While Roosevelt’s survival is impressive, the poliovirus left him partially paralyzed. Not to be discouraged, Roosevelt continued combating the aftermath of his illness through intensive rehabilitation.2 Three years after his infection, FDR was able to walk with the aid of crutches.2

FDR was not the only American who suffered through poliovirus. Throughout most of the twentieth century, the United States suffered a polio epidemic.4 During this period, over 600,000 people suffered paralytic polio.5 For several years, nobody knew how the disease was transferred or how to prevent infection, which only added to the hysteria regarding the disease.4 Because of this, medical researchers dedicated a great amount of time to studying the virus, with support and funding from three philanthropic organizations.

These organizations are now known as the Rockefeller Institute, Rotary International and the March of Dimes.4 FDR himself founded the March of Dimes in 1938, inspired by his own experience with the disease. Money from Roosevelt’s organization funded clinical trials for two unique vaccinations and provided free vaccines for thousands of children across the United States.4 The aggressive funding and vaccination of the disease led to the eradication of polio in the US, and other nations were soon to follow.4

Most recently, India experienced its first year without any reported cases of polio.5 In a few weeks, the nation will confirm this with a variety of diagnostic tests.5 While India holds its breath for confirmation of this exciting news, it also prepares to vaccinate more children to prevent a resurgence of the virus.5 India is one of four nations still fostering polio infections, along with Pakistan, Afghanistan and Nigeria.5 These nations are known as endemic environments, which means that the disease is retained within a population without additional factors promoting the spread of the illness.5

The eradication of polio in India would be a major step toward eliminating the disease, but there is still much more work to be done. There remain three other endemic nations, and several African nations have been re-infected within the last year.5 Still, there is hope – and a lot of it is due to the perseverance of one of the United States’ presidents.

References

1 The Miller Center. (2011). American President: Martin Van Buren. Retrieved from http://millercenter.org/president/vanburen

2 The White House. (2011). Franklin D. Roosevelt. Retrieved from http://www.whitehouse.gov/about/presidents/franklindroosevelt

3 Centers for Disease Control and Prevention. (2011). CDC – Pinkbook: Poliomyelitis Chapter. Retrieved from http://www.cdc.gov/vaccines/pubs/pinkbook/polio.html#poliovirus

4 The Smithsonian Institution. (2011). NHAH | Polio. Retrieved from http://americanhistory.si.edu/polio/index.htm

5 Post-polio Health International. (2011). PHI: Incidence Rates of Poliomyelitis in US. Retrieved from http://www.post-polio.org/ir-usa.html

6 Roberts, Leslie. (2011). India Marks First Year without Polio, But Global Eradication

Remains Uncertain. Retrieved from http://news.sciencemag.org/scienceinsider/2012/01/india-marks-1-year-without-polio.html?ref=hp

Media Cited

a Archive, Library of Congress. (2011). [Photograph]. Van Buren. Retrieved from http://usgovinfo.about.com/od/thepresidentandcabinet/tp/One-Term-Presidents.htm

b FDR Suite Restoration Project @ Adams House, Harvard University. Franklin D. Roosevelt smiles. [Photograph]. Retrieved from http://fdrsuite.org/IMAGES/FRANK%20AT%20HARVARD/fdr-smile.jpg

c Link Studio. (2011) [Illustration]. Poliovirus. Retrieved from http://americanhistory.si.edu/polio/virusvaccine/how.htm

Needing Fixing

Promoting resilience in pediatric autoimmune diseases.

Half of all Americans will deal with a chronic illness in their lifetime.1 This overwhelming statistic is curiously a little-known fact, with chronic illnesses rarely discussed and poorly understood by those who do not suffer from disease. Twenty percent of the American population suffers from an autoimmune chronic illness, including Crohn’s disease, arthritis, asthma, and lupus.

Autoimmune diseases generally begin in early adolescence and develop a cyclic pattern of active disease, or ‘flares’, and remission. In these types of conditions, the immune system is stimulated unnecessarily by an unknown cause and perceives an individual’s organ or organ system as a pathogen, attaching and destroying normal tissue as if it were a disease-causing bacteria or virus.

Since many of these disorders begin in youth, both physiological and psychological treatments are critical to ensuring overall health. Most autoimmune diseases are invisible from the exterior; it is nearly impossible to discern if a person is ill from their physical appearance alone. Therefore, people with autoimmune illnesses often fight to legitimize their diseases, whether it is to a doctor, professor, or a peer who cannot ‘see’ the problem. Although autoimmune diseases are typically not terminal, the severity and uncertainty associated with their prognosis often goes unrecognized due to the lack of physicality associated with the disease and the severe limitation of awareness surrounding such illnesses.

Children are a fascinating subset of this population, for patients with pediatric autoimmune diseases prove to be both the center of the care and somehow also the individual with the least amount of control. Yet, once the child transitions to adulthood, they are expected to advocate for themselves despite the fact that they have not been engaged in their healthcare and are offered no coping skills.

Family Matters

The FAAR program provides guidance for family interactions involving children with autoimmune diseases.

The inevitable involvement of the patient’s parents and siblings can act as a catalyst for behavioral problems of the family’s own, namely depression, social isolation, anxiety, and jealousy. Siblings can easily become jealous of the attention the parents or other authority figures in their lives give to the ill child, and the amount of family time that is concentrated on his or her medical care. The optimal family situation provides comfort and openness in discussing the child’s disorder, while maintaining both the child and family’s identity. University of Minnesota Professor Joan Patterson’s Family Adjustment and Adaptation Response (FAAR) Model holistically captures this idea within nine basic criteria.2

1) The family must balance the child’s illness with the family needs and interests of other family members.

2) Parents should maintain clear family boundaries between themselves and their children in regards to discipline, bed times, and household chores.

3) Parents and their children can develop communication competence by ensuring that the child understands important medical information that pertains to them. Through modeling effective communication competence, parents will be able to instill in their children the ability respond to others’ needs and with the appropriate words or actions.

4) The family should try to attribute positive meaning to their situation by focusing on newfound responsibilities instead of their fear of the disease itself.

5) Parents should demonstrate flexibility by allotting as much time to family activities (like a sporting game) as to medical obligations. A child whose family is able to ‘go with the flow’ will experience life as a “normal kid” as opposed to a young adult dealing with an illness.

6) Parents must commit to the family as a unit, remaining a “Mom and Dad” as opposed to separating the sick child from his or her caregivers.

7) The family can engage in active coping skills, including modeling positive ways to deal with stress aloud, using realistic thoughts, employing muscle relaxation techniques, and healthy sleep patterns.

8) The family must maintain social integration within their own household and the neighborhood/community.

9) The family needs to develop collaborative relationships with professionals, including doctors, in order to make decision that is satisfactory to everyone involved in the child’s care, especially the patient. This is a key factor to the child’s immediate and long-term success. The medical professional relationship leaves much to be desired in terms of listening to the child’s needs and wishes. Establishing a collaborative relationship builds a safe relationship where a child can express their desires and fears of their treatments to choose the best therapy.

While the FAAR model holds great potential to engage an entire family affected by chronic illness in order for it to be as successful as possible, it mostly is maintained as a theory and not necessarily as a treatment protocol. However, the best implementation would be giving the parents the guidelines to this model and having an initial therapy session to initiate the practice. As simple as it seems, the most effective behavior is openness between parents and children, while maintaining the boundaries of parent and child, to ensure that each child gets equal treatment and attention by the parents. This behavior exhibited by the parents is expected to be mirrored by the children, thus eliminating further stress and issues such as sibling jealousy.

Think About It

Interestingly, the most important indicator of a patient’s prognosis is not the diagnosis, but rather the individual’s innate attitude. This attitude derives mainly from how the child feels about the disease without any outside influence, but parents and/or other important figures’ reactions play a huge role in how a child internalizes their ailment. For example, a patient diagnosed with mild asthma who is deeply in denial and very depressed has a poorer prognosis and quality of life than a patient with severe asthma who has a positive outlook. The evidence indicates not only the mind exacerbating physical symptoms, but also a strong interplay between stress and immune functioning. Factors such as a positive attitude, an effective social community, and cognitive behavioral stress management strategies are consistently linked to better immune functioning. Conversely, chronic psychological stress, fear before surgery, and coping strategies of denial or loss of control are associated with poorer immune functioning. It deserves to be stressed that the relevance to the pediatric population is that children have not yet had the natural – or facilitated – opportunity to develop successful coping strategies and social support of their own. Thus, such a system that accommodated for the unique position of this population has the potential to optimize their care and success.

Children with chronic autoimmune illnesses face a unique battle. In many ways, they are as not fully autonomous or even aware of what is coming due to their present stage in life. Children do not always understand the extent of their illness as easily as they can perceive that they are “different” from their peers.3The chronic cyclic nature of an autoimmune disease comes at the cost of a child’s education, as severe symptoms may force the child to miss school or even be hospitalized. Diseases such as Crohn’s, which requires a child to make frequent emergency trips to the bathroom, may not only become emotionally embarrassing, but functionally problematic during school or other activities. These children appear in the center of their medical world as both a dependent third party and the one to endure the treatment. In addition to the power that is stripped from them by their diseases, these children must face the struggles of daily life and peer groups who are rarely empathetic to their plight.

All Together Now

"The FAAR model should provide great support for the ill child and the family.In many ways, fragments of the FAAR model have already been implemented within family relationships. Cognitive Behavioral Therapy (CBT) is often the first therapy used to readjust a person’s thinking into a more adaptive style to interact with the world and their emotions in a more relaxed manner. It employs ‘realistic thinking’ to help the individual see what will most likely happen instead of the improbable anxieties the person presents in therapy. In a disease like arthritis that often requires physical therapy, this is often done in a group format. The proposed therapy works like a group CBT arrangement, aiming to address the importance of social support with peers and the optimal cognitive style, coping skills, and stress management. Within the family setting, the use of the FAAR model should provide great support for the ill child and the family at large. On the side of the medical professionals, it is critical to the process for education about using child-friendly language and helping to preserve the childhood of their patients.

Although children with autoimmune diseases generally end up living different lives than their peers, at the heart of their care needs to be a reminder that they are indeed children and every opportunity should be made available to them. Providing children with CBT and coping strategies coupled with the FAAR intervention, their healthcare can be optimized to foster and sustain their resilience as they develop and face new and challenging medical situations. While no family dreams of having a child with a chronic illness, just because their life is not ideal, does not mean it cannot be a beautiful one.

References

1American Autoimmune and Related Diseases Association, Inc.

2Patterson, J. M. (2002). Understanding Family Resilience. Journal of Clinical Psychology, 58(3), 233-246.

3Patterson, J., & Brown, R. W. (1996). Risk and Resilience among Children and Youth with Disabilities.Archives of Pediatrics & Adolescent Medicine, 150, 692-698.

Doggy Genes

Dogs are “man’s best friends,” but do we know about their origins?

Dogs have been beside us for thousands of years, but until very recently, little was known about both their genetic origins and their domestication process. Researchers around the world have been investigating dog genes from mitochondrial DNA (mtDNA), to compile complete genomes. The research has also given important insight towards the origin of the first dogs and the specific genes that cause diseases that both humans and dogs share, such as diabetes.

The Origin of Species

Until canine gene research began, many people assumed that dogs were close descendants of wolves that were domesticated by primitive humans to aid in hunting about 15,000 years ago.1 Through gene research, scientists have been able to clearly prove that dogs are, in fact, straight descendants of the grey wolf (Canis lupus), and were domesticated as early as 45,000 to 135,000 thousand years ago. The domestication process of dogs began from separate and distinct populations throughout East Asia, which then interbred and backcrossed. Backcrossing, the breeding between an individual with its parents or siblings, allowed generations to develop homogenetic breeds. Researchers distinguished four maternal clades, or matriarchal lines, according to genetic differences. Each clade indicates the particular origin of a breed or group of breeds based on the breed’s genetic background, specifically the genetic of mtDNA. The largest and first clade holds the genes of most known breeds, further supporting the fact that dogs may have been domesticated prior to the dates that archeological records indicate. The other three clades were formed afterwards and encompass more specific and unique dog breeds. However, each clade gives insight into the original maternal lineage of modern dog breeds.2

Custom-Made Companions

Dogs are one of the first examples of human manipulation of nature. Primitive humans initiated the evolution of dogs by breeding specific phenotypes of wolves, even without our modern knowledge of genetics. Through selection against genes for aggression and other traits predominant in wolves, the first domesticated generation of “dogs” began an evolutionary change. Subsequent generations had less harsh or menacing features; limbs and bone structure became smaller and new traits such as barking, a unique trait that is distinct from a howl because of tone and pitch, emerged.1 Dog breeds were then created by humans who wanted a specific kind of dog: a herder, a hunter, a guard, or a guide. Scientists have determined that specific dog breeds developed because of controlled breeding and possibly pre-natal modifications, although the real mechanics of how dog breeds specifically emerged are still unknown. Through very close and controlled breeding, different breeds continued to evolve. Tracing the lineage of each specific modern breed is complicated, however, because mtDNA can only show the mutations that occurred in the early stages of domestication. Any modifications of genes found in mtDNA are those that occurred prior to the creation of modern breeds. In other words, the full extent of gene modification in modern breeds is still very much unknown and is currently being researched.2

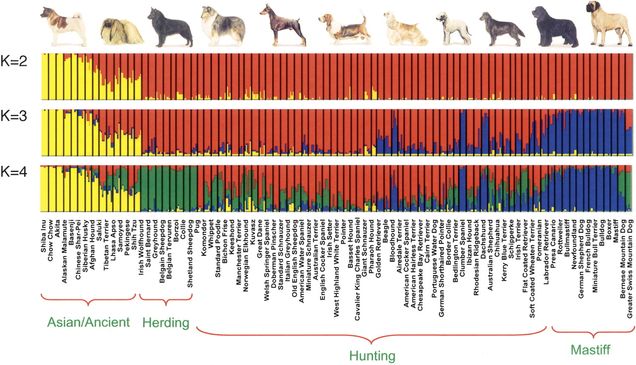

To determine the lineage of individual breeds, loci research, which is based on the location of genes on chromosomes, has to be implemented. Loci research detects the genetic changes that might have occurred because of a genetic drift, of movement of a gene from one chromosome to another.2 This allows researchers to see divergence in allele frequencies and distinguish between breeds. Further research on loci has shown that dog breeds can be clustered by ancestry. Several clusters have already been determined: K2 includes all breeds of Asian origin, such as the Akita and the Shar Pei; K3 includes mastiff-type dogs, such as the Bulldog and the Boxer; and K4 includes working-type dogs and hunting breeds, such as the Collie and the Sheepdog.2

Mapping the Genome

Early research began in the late 1990s, but advances were minimal because genetic research was predominantly focused on humans and mice. By 2004, however, scientists had created a fully integrated radiation hybrid map of a dog genome. An integrated hybrid map is a genome map made from DNA fragments which are divided through radiation. These fragments are then synthetically injected and reproduced inside a hybrid cell made from the DNA of two individual species.2 This allowed further research initiatives to identify specific breed genomes and to create a comparative map between human and dog genes. In 2005, the largest canine sequence, the Boxer genome, was made public. It revealed that dog genes replicate at lower rates than humans and the deletions or insertions of nucleotide bases are rare. Following this discovery, mapping of other breeds became easier because similar sequences, certain genes that are linked together, divide dogs into two groups with similar gene structures. One group probably developed from the first domestication and the second from specific breeding. Therefore genome-wide association mapping can be used for later construction of breed genomes.2

Benefits of Canine Genome Research

Because the canine genome has fewer reported genes than the human genome, and is more primitive in content, it allows for an easier insight into disorders present in both human and dogs, such as cancer, diabetes and narcolepsy. Diseases linked to specific dog genes and loci can help identify similarly linked genes in humans. This advancement allows human biologist to identify the mechanisms that may cause certain diseases, and to identify the interaction between genes and their effects on diseases. For example, the discovery of the gene that targets narcolepsy on dogs has indicated how certain genes in the human body relate to our sleep patterns and disorders. It has also opened a new approach to cancer research. By identifying the sources, origins, and developments of cancer and tumors in dogs through canine genes, researchers may understand the predisposition and susceptibility of genes to cancer.

References

1 Dogs Decoded: Nova. Dir. Dan Childs. Nova, 2010. Film.

2 Ostrander, E. & R. W. (2005): The canine genome: genome research. 15: 1706-1716. Web. <http://genome.cshlp.org/content/15/12/1706.full>.

Tagged as: DNA, canine, breeding, genome

Bearing the Cold

Many large carnivores, like bears, don’t actually "hibernate". More