12 Steps to a Fast Multipole Method on GPUs

by Dr Rio Yokota

Boston University

Why would I want to learn FMM?

The combination of algorithmic acceleration and hardware acceleration can have tremendous impact. The FMM is a fast algorithm for calculating matrix vector multiplications in O(N) time, and it runs very fast on GPUs. Its combination of high degree of parallelism and O(N) complexity make it an attractive solver for the Peta-scale and Exa-scale era. It has a wide range of applications, e.g. quantum mechanics, molecular dynamics, electrostatics, acoustics, structural mechanics, fluid mechanics, and astrophysics.

What are the prerequisites?

- Basic understanding of linear algebra and calculus

- Some experience with C or C++

The aim of this course

This course will provide a hands-on tutorial with simple exercises that will lead to a full FMM code that runs on GPUs. Each step is carefully planned so that there is no sudden jump in the difficulty. During the tutorial each attendee will have remote access to the GPU cluster in Nagasaki Japan. There will be Teaching Assistants walking around to assist you, so that no one is left behind.

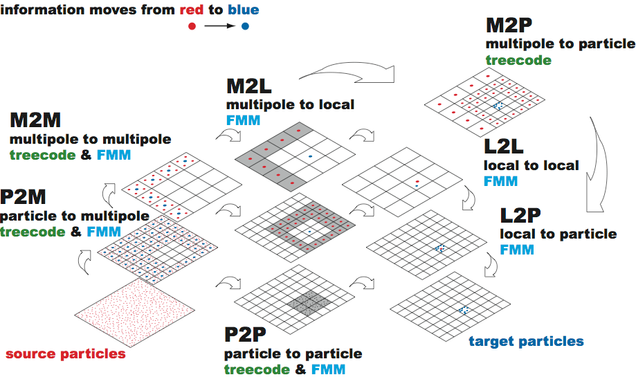

The 12 steps

- step 1 : direct N-body

- step 2 : multipole expansion

- step 3 : tree structure

- step 4 : link lists, interaction lists

- step 5 : treecode

- step 6 : M2L translation

- step 7 : single level FMM

- step 8 : M2M,L2L translation

- step 9 : multi-level FMM

- step 10 : direct N-body on GPUs

- step 11 : P2P on GPUs

- step 12 : M2L on GPUs

Printable course description

Supplementary material

- http://www.ics.uci.edu/~ihler/papers/ihler_area.pdf

- http://math.nyu.edu/faculty/greengar/shortcourse_fmm.pdf

- http://www.umiacs.umd.edu/~ramani/cmsc878R/index.html