Removing Memory as a Noise Factor

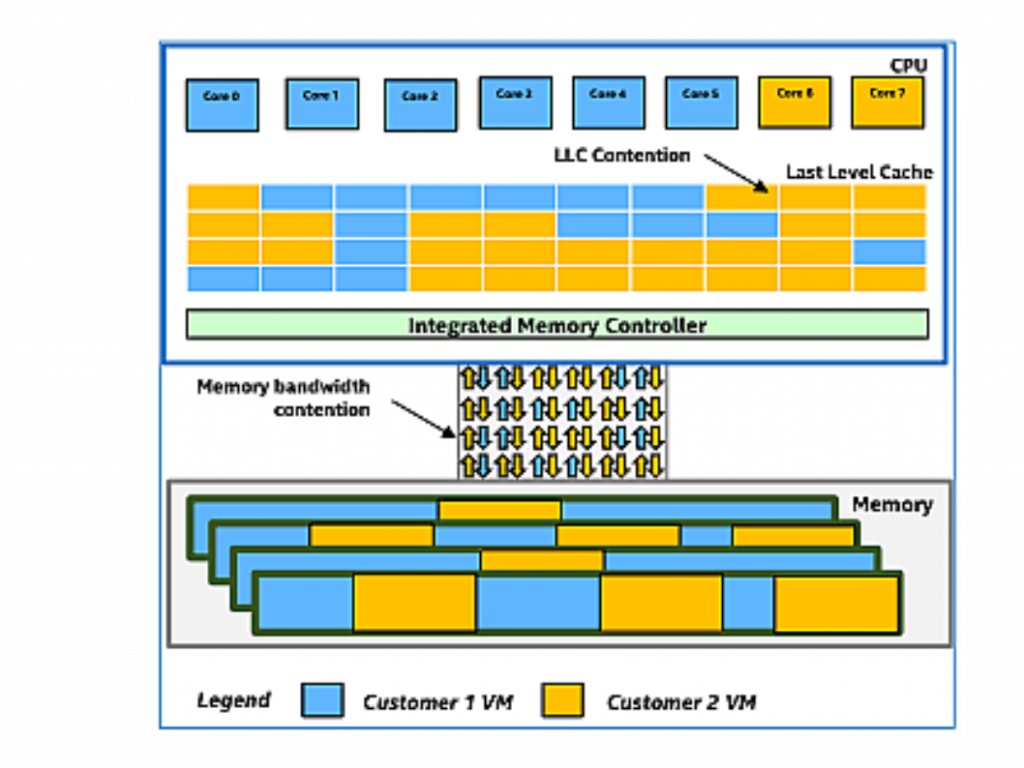

Memory bandwidth is increasingly the bottleneck in modern systems and a resource that, until today, we could not schedule. This means that, depending on what else is running on a server, performance may be highly unpredictable, impacting the 99% tail latency, which is increasingly important in modern distributed systems. Moreover, the increasing importance of high-performance computing applications, such as machine learning and real-time systems, demands more deterministic performance, even in shared environments. Alternatively, many environments resist running more than one workload on a server, reducing system utilization. Recent processors have started introducing the first mechanism to monitor and control memory bandwidth. Can we use these mechanisms to enable machines to be fully used while ensuring that primary workloads have deterministic performance? This project presents early results from using Intel’s Resource Director Technology and some insight into this new hardware support. The project also examines an algorithm using these tools to provide deterministic performance on different workloads.

Additional Information

The project is affiliated with the Mass Open Cloud. For additional information, please view the Removing Memory as a Noise Factor project page on Red Hat Research.