The Robot as Decider

Teaching computers how to learn

In The Jetsons, the helper robot of the future handles all manner of chores. Rosie can do the laundry, pick up the groceries, and keep Elroy out of trouble.

In today’s reality, we have all kinds of artificial intelligences (AIs) at work for us—scouring the web for information, diagnosing car trouble, even performing surgeries. But no one of these specialized machines could perform all those tasks, or any variety of tasks. In fact, even one of our everyday errands would pose a challenge for a traditional robot. Rosie would be stymied by a stray shopping cart in her path, and she wouldn’t know what to do if Jane’s favorite brand of margarine were out of stock.

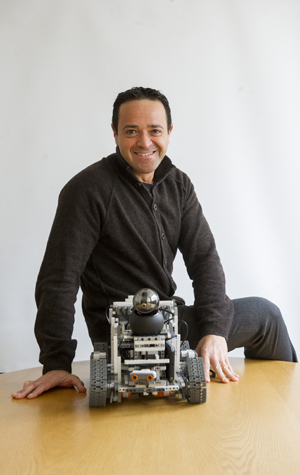

But Massimiliano “Max” Versace, a College of Arts & Sciences research assistant professor and director of BU’s Neuromorphics Laboratory, aims to fix that. His team is building the brain of a versatile, general-purpose robot—maybe not a humanoid, wisecracking helper, but, let’s say, a really smart dog. And with a grant from NASA, that pup may soon be prowling other planets. The Neuromorphics Lab is part of BU’s CompNet (Center for Computational Neuroscience and Neural Technology) and the National Science Foundation–sponsored CELEST (Center of Excellence for Learning in Education, Science and Technology).

Versace (GRS’07) is working on the cutting edge of a convergence of neuroscience, computer processing, and other disciplines that promises to yield a better robot, one with a “brain” modeled after that of a mammal. He believes conventional robots are hamstrung by their basic architecture, which has changed little since the 1960s. By necessity, even a powerful supercomputer’s processing unit is located apart from its memory stores. The tiny delay as data travels between them is not noticeable because a typical AI today is devoted only to a single task or a narrow set of tasks.

But those delays would quickly multiply if a robot were asked to step outside that narrow field—adding car parts on an assembly line or answering questions on Jeopardy!—and into an unpredictable situation, such as exploring the ocean floor or caring for an elderly person. To prepare a robot for every possibility in that broader role, its programmers would have to add so many lines of code that the machine would need as much power as used by the entire Charles River Campus.

The brain of an ordinary rat, on the other hand, runs on the energy equivalent of a Christmas-tree bulb, Versace and colleague Ben Chandler (GRS’14) write in an article in IEEE Spectrum, a publication of the Institute of Electrical and Electronics Engineers, yet the rodent can successfully explore unfamiliar tunnels, avoid mousetraps, follow a food aroma coming from an unexpected source—all things that might befuddle a robot.

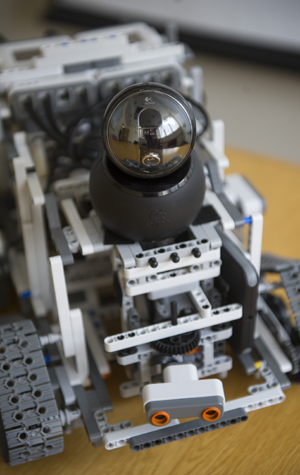

It’s that ability to learn and adapt that Versace is working to replicate in an artificial brain. To do it, he’s made use of a breakthrough electrical component designed by Hewlett-Packard, called a memristor. Versace and his team have assembled networks of these microscopic devices to mimic the brain’s neurons and synapses, saving a massive amount of energy while allowing the storage and processing of information to occur simultaneously, as they do in our mammalian heads.

In the lab’s first series of experiments, in 2011, the BU team built a rodent-size brain and let it loose in a virtual tub of water. With training, rather than explicit programming, the “animat” eventually figured out on its own how to find dry ground.

Robo-Rover

Once Versace and colleagues demonstrated that success, the National Aeronautics and Space Administration came calling, and tapped the Neuromorphics Lab for two high-altitude projects.

In the first, the researchers have been charged with designing a Mars explorer that will operate autonomously, navigating and collecting information using passive rather than active sensors.

“An active sensor is, for instance, a laser range finder, which shoots laser beams to estimate the distance from the robot to a wall or object, or even to estimate object size,” explains Versace. “Biology does this task with a passive sensor, the eye, which absorbs energy—light—from the environment rather than emitting it. An active sensor means spending more money and having more weight to carry—sensor plus battery. This is just one example in a trend that sees traditional robots burning tons of energy to do tasks that in biology take a few calories.”

Last month, after repeated tweaks, the lab’s virtual rover, outfitted with biological-eye-like passive sensors, successfully learned the spatial layout of, and identified science targets within, a highly realistic virtual Martian surface. Versace and colleagues are now testing the system in a real-life metal-and-plastic robot in a physical “Mars yard” they built in the Neuromorphics Lab.

The lab’s second NASA project also marshals mammal-style sight, but for a use closer to home. By fall 2015, the Federal Aviation Administration will fully open U.S. airspace to unmanned aerial vehicles (UAVs)—with the common-sense provision that the machines must be at least as adept as human pilots at sensing and avoiding oncoming objects.

The biological advantage Versace and his colleagues have identified in humans is our sense of optic flow. We don’t typically think about it as we’re walking down the street, but the way we perceive our own forward motion is that stationary objects in the distance appear to gradually grow larger while the stationary objects we’re passing appear to more quickly move past us.

When one object is moving in our field of vision at a rate faster than the rest, we quickly zero in on it, understanding it to be one we may need to alter our course to avoid.

For a computer, this is not always so obvious, largely because a conventional computer has no fear. That seems like an asset in a Daredevil comic book, but for an entity piloting an airplane, it is a decided liability. As it turns out, our biological anxiety is often an indispensable mode of self-preservation.

That’s why Versace and company are testing various algorithms that have the pilot AI experience something like pain. During repeated virtual flight tests, the AI is punished for colliding with an oncoming plane and rewarded for avoiding it. “Our task is to build a brain that senses when these obstacles start expanding,” says Neuromorphics Lab postdoctoral associate Timothy Barnes, “and decides early on, is it dangerous or not? And if so, to make a maneuver to avoid it.”

Programming a robot to experience pain and anxiety? Is that asking for trouble? What if the robots one day rebel against their flesh-and-blood creators? That’s what Geek magazine asked Versace recently. The BU professor doesn’t find the scenario likely.

“This will give us insight into how the brain works,” he says. “Learn by creating is probably the best advice I can give to anybody who goes into a scientific field. If you are able to re-create what you think you know, you will have a much more powerful understanding of what you are trying to study.”

Patrick Kennedy can be reached at plk@bu.edu.

Comments & Discussion

Boston University moderates comments to facilitate an informed, substantive, civil conversation. Abusive, profane, self-promotional, misleading, incoherent or off-topic comments will be rejected. Moderators are staffed during regular business hours (EST) and can only accept comments written in English. Statistics or facts must include a citation or a link to the citation.