Previous Projects

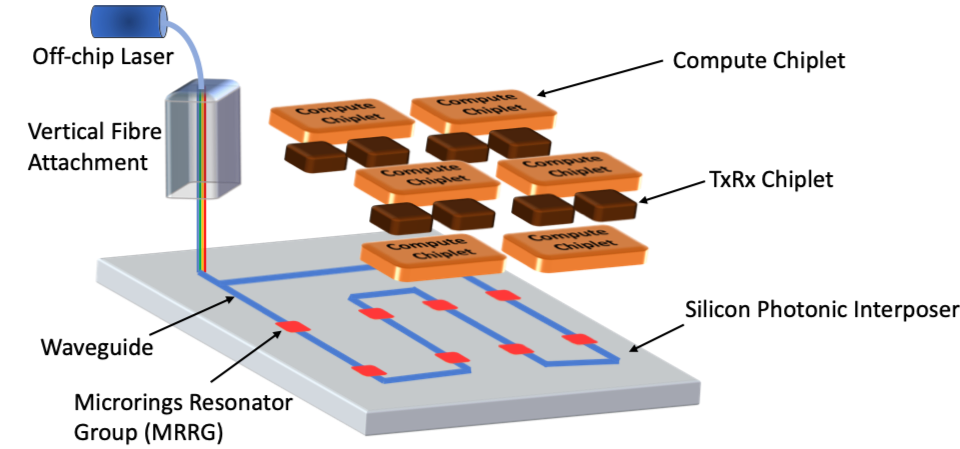

Reclaiming Dark Silicon via 2.5D Integrated Systems with Silicon-Photonic Networks

Funding: NSF; Collaboration: CEA-LETI, France

The design of today’s leading-edge systems is fraught with power, thermal, variability and reliability challenges. As a society, we are increasingly relying on a variety of rapidly evolving computing domains, such as cloud, internet-of-things, and high-performance computing. The applications in these domains exhibit significant diversity and require an increasing number of threads and much larger data transfers compared to applications of the past. Moreover, power and thermal constraints limits the number of transistors that can be used simultaneously, which has lead to the Dark Silicon problem. To handle the needs of the next-generation applications, there is a need to explore novel design and management approaches to be able to operate the computing nodes close to their peak capacity. This project uses 2.5D integration technology with silicon photonic networks to build heterogeneous computing systems that can provide the desired parallelism, heterogeneity, and the network bandwidth to handle the demands of the next-generation applications. To this end, we investigate the complex cross-layer interactions among devices, architecture, applications, and their power/thermal characteristics and design a systematic framework to accurately evaluate and harness the true potential of the 2.5D integration technology with silicon-photonic networks. Specific research tasks focus on cross-layer design automation tools and methods, including pathfinding enablement, for the design and management of the 2.5D integrated system with silicon-photonic networks.

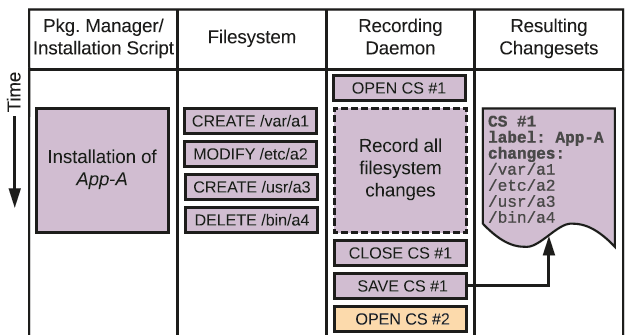

Scalable Software and System Analytics for a Secure and Resilient Cloud

Funding: IBM Research

Today’s Continuous Integration/Continuous Development (CI/CD) trends encourage rapid design of software using a wide range of customized, off-the-shelf, and legacy software components, followed by frequent updates that are immediately deployed on the cloud. Altogether, this component diversity and break-neck pace of development amplify the difficulty in identifying, localizing, or fixing problems related to performance, resilience, and security. Furthermore, existing approaches that rely on human experts have limited applicability to modern CI/CD processes, as they are fragile, costly, and often not scalable.

This project aims to address the gap in effective cloud management and operations with a concerted, systematic approach to building and integrating AI-driven software analytics into production systems. We aim to provide a rich selection of heavily-automated “ops” functionality as well as intuitive, easily-accessible analytics to users, developers, and administrators. In this way, our longer-term aim is to improve performance, resilience, and security without incurring high operation costs.

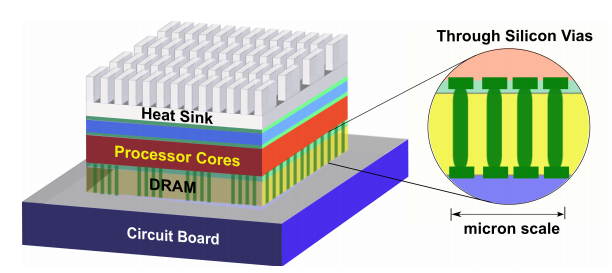

3D Stacked Systems for Energy‐Efficient Computing: Innovative Strategies in Modeling and Runtime Management

Funding: NSF CAREER and DAC Richard Newton Graduate Student Scholarship

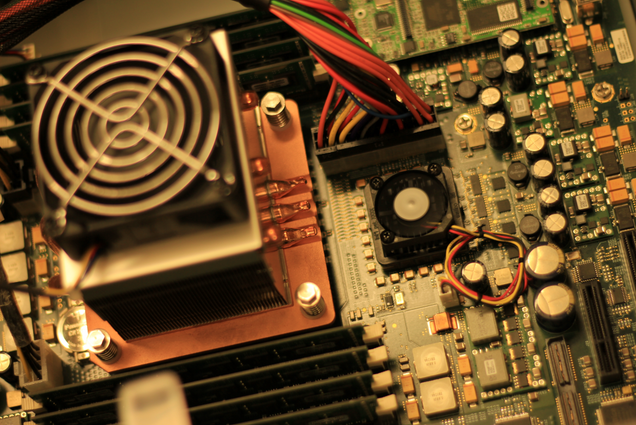

3D stacking is an attractive method for designing high-performance chips as it provides high transistor integration densities, improves manufacturing yield due to smaller chip area, reduces wire-length and capacitance, and enables heterogeneous integration of different technologies on the same chip. 3D stacking, however, comes with several key challenges such as higher on-chip temperatures and lack of mature design and evaluation tools.

This project focuses on several key aspects that will enable cost-efficient design of future high-performance 3D stacks: (1) Thermal modeling and management of 3D systems; (2) Novel cooling (e.g., microchannel based liquid cooling, phase-change materials, etc.) modeling and control to improve cooling efficiency; (3) Architecture-level performance evaluation and optimization of 3D design strategies to maximize performance and energy efficiency of real-life applications; (4) Exploration of heterogeneous integration opportunities such as stacking processors with DRAM layers or with Silicon-Photonics network layers.

Energy-Efficient Mobile Computing

Mobile devices handle diverse workloads ranging from simple daily tasks (i.e., text messaging, e-mail) to complex graphics and media processing while operating under limited battery capacities. Growing computational power and heat densities in modern mobile devices also pose thermal challenges (i.e., elevated chip, battery, and skin temperatures) and lead to undesired performance fluctuations due to insufficient cooling capabilities, and as a result, frequent throttling. Designing practical management solutions is challenged by the diversity in computational needs of different software programs and also by the added complexity in the hardware architecture (i.e., specialized accelerators, heterogeneous CPUs etc.). Addressing these concerns requires revisiting existing management techniques in mobile devices to improve both thermal and energy efficiency without sacrificing user experience.

Our research in addressing energy and thermal efficiency of mobile devices focuses on (1) designing lightweight online frameworks for monitoring the energy/thermal status and for assessing performance sensitivity of applications to hardware and software tunables; (2) practical runtime management strategies to minimize energy consumption and mitigate thermally induced performance losses while providing sufficient user experience; (3) generating software tools and workload sets for enabling evaluation of emerging mobile workloads under realistic usage profiles.

Managing Server Energy Efficiency

The diversity of the elements contributing to computing energy efficiency (i.e., CPUs, memories, cooling units, software application properties, availability of operating system controls and virtualization, etc.) requires system-level assessment and optimization. Our work on managing server energy efficiency focuses on designing: (1) necessary sensing and actuation mechanisms such that a server node can operate at a desired dynamic power level (e.g., power capping), (2) resource management techniques on native and virtualized systems such that several software applications can efficiently share available resources, (3) cooling control mechanisms that are aware of the inter-dependence of performance, power, temperature-dependent leakage power, and cooling power.

Simulation and Management of HPC Systems

Additional levels of management and planning decisions take place at the data center level. These decisions, such as job allocation across the computing nodes, impact energy consumption and performance. HPC applications, e.g., scientific computing loads, typically occupy many server nodes, run for a long time, and include heavy data exchange and communication among the threads of the application.

Our work in this domain focuses on optimizing the cooling energy of the data center and the performance of HPC applications simultaneously. This work includes developing simulation methods that can accurately estimate power and performance of realistic workloads running on large-scale systems with hundreds or thousands of nodes. We also design strategies to assess and optimize system resilience.