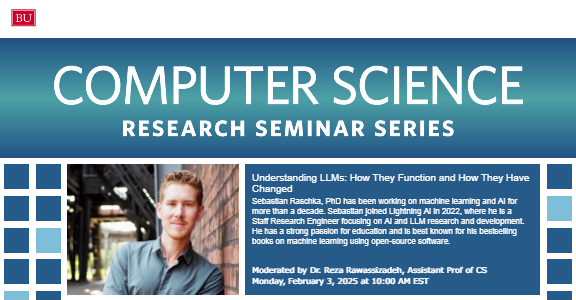

Understanding LLMs: How They Function and How They Have Changed

Guest Speaker: Dr. Sebastian Ratschka, Staff Research Engineer at Lightning AI

Moderated by Dr. Reza Rawassizadeh, Associate Prof of CS

Abstract: This talk will guide the audience through the key stages of developing large language models (LLMs). We’ll start by explaining how these models are built, including the coding of their architectures. Next, we’ll discuss the processes of pretraining and fine-tuning, detailing what these stages involve and why they are important. In this talk, attendees will also learn about recent developments, including how model architectures have evolved from early GPT models to Llama 3.1. Additionally, the talk will provide an overview of the most recent training recipes, which include multi-stage pre-training and post-training.

Bio: Sebastian Raschka, PhD has been working on machine learning and AI for more than a decade. Sebastian joined Lightning AI in 2022, where he is a Staff Research Engineer focusing on AI and LLM research and development. Prior to that, Sebastian worked at the University of Wisconsin-Madison as an assistant professor in the Department of Statistics, focusing on deep learning and machine learning research. He has a strong passion for education and is best known for his bestselling books on machine learning using open-source software. You can find out more about Sebastian’s current projects at https://sebastianraschka.com/.