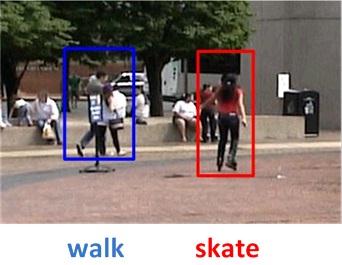

Action Recognition

Kai Guo (PhD ’11)

Prof. Prakash Ishwar, Prof. Janusz Konrad

Funding: National Science Foundation

Award-Winning Research: This research has won the “Aerial View Activity Classification Challenge” at ICPR-2010 (read more) and the best paper award at AVSS-2010 (read more).

Background: Algorithms for automatic detection and recognition of human actions, such as running, jumping, or kicking, from digital video signals recorded by cameras, find applications that range from homeland security (e.g., to detect thefts, assaults, and suspicious activity) and healthcare (e.g., to monitor the actions of elderly patients and detect life-threatening symptoms) to ecological monitoring (e.g., to analyze the behavior of animals in their natural habitats) and automatic sign-language recognition (e.g., to assist the speech-impaired). Action recognition is a challenging problem on account of scene complexity, camera motion, and action variability (the same action performed by different people may look quite different). Although many methods have been developed in the last decade, the problem if far from being considered solved in real-life scenarios when storage and processing resources are severely limited and decisions need to made in real-time.

Description: The success of an action recognition algorithm is dependent on how actions are represented and what classification method is used. In the past decade, many action representations have been proposed, such as interest-point models, local motion models, and silhouette-based models, and many classifiers have been adopted, such as nearest-neighbor, SVM, boosting, and graphical-model-based classifiers. In order to describe actions, we adopt a “bag of dense local features” approach with feature vectors either measuring the shape of a moving object’s silhouette or its optical flow. Since such a representation is overly dense, we reduce its dimensionality by computing the empirical covariance matrix that captures the second-order statistics of the collection of feature vectors. In addition to being surprisingly discriminative, covariance matrices have low computational and storage requirements. As for classifiers, we have investigated the nearest-neighbor (NN) classifier as well as one based on sparse representation. The NN classifier is simple and straightforward: a query video is assigned the class label of the closest training video where closeness is measured relative to a suitable metric. The NN classifier achieves remarkable recognition performance for an affine-invariant Riemannian distance metric based on mapping the convex cone of covariance matrices to the Euclidean space of symmetric matrices. We have also investigated a sparse-representation classifier, developed earlier for face recognition by Wright et al., by applying it to the logarithm of covariance matrices. In this case, the log feature covariance matrix of a query video is approximated as the sparsest linear combination of all training video log feature covariance matrices; the corresponding coefficients indicate the action label of the query video.

Results: The developed action recognition framework has been tested on publicly-available Weizmann and KTH datasets and the recent low-resolution UT-Tower dataset. Our approach attains correct classification rate of up to 100% on the Weizmann dataset, 98.47% on the KTH dataset, and 97.22% on the UT-Tower dataset. The proposed algorithms are lightweight in terms of memory and CPU requirements, and have been implemented to run at video rates on a modern CPU under Matlab.

Find more information and results at http://vip.bu.edu/projects/video/action-recognition

Publications:

- K. Guo, P. Ishwar, and J. Konrad, “Action recognition from video by covariance matching of silhouette tunnels,” in Proc. Brazilian Symp. on Computer Graphics and Image Proc., pp. 299-306, Oct. 2009.

- K. Guo, P. Ishwar, and J. Konrad, “Action change detection in video by covariance matching of silhouette tunnels,” in Proc. IEEE Int. Conf. Acoustics Speech Signal Processing, pp. 1110-1113, Mar. 2010.

- K. Guo, P. Ishwar, and J. Konrad, “Action recognition in video by sparse representation on covariance manifolds of silhouette tunnels,” in Proc. Int. Conf. Pattern Recognition (Semantic Description of Human Activities Contest), Aug. 2010, [SDHA contest web site], Winner of Aerial View Activity Classification Challenge.

- K. Guo, P. Ishwar, and J. Konrad, “Action recognition using sparse representation on covariance manifolds of optical flow,” in Proc. IEEE Int. Conf. Advanced Video and Signal-Based Surveillance, pp. 188-195, Aug. 2010, AVSS-2010 Best Paper Award.

- K. Guo, Action recognition using log-covariance matrices of silhouette and optical-flow features. PhD thesis, Boston University, Sept. 2011.

- K. Guo, P. Ishwar, and J. Konrad, “Action Recognition from Video using Feature Covariance Matrices,” IEEE Transactions on Image Processing, vol. 22, no. 6, pp. 2479-2494, Jun. 2013.

Website: http://vip.bu.edu