Video Analytics

Huseyin Ozkan (MS ’11)

Prof. Venkatesh Saligrama, Prof. Janusz Konrad, Prof. Pierre-Marc Jodoin (University of Sherbrooke, Canada)

Funding: National Science Foundation, National Geospatial Agency

Background: Video camera networks have proliferated in the U.S. and abroad, appearing everywhere from airports to border crossings to city streets. Today more than 30 million surveillance cameras produce nearly 4 billion hours of video footage each week, and this continuous stream of data exceeds the processing capacity of human analysts. Although automatic algorithms searching the data for suspicious activity exist, they are often inadequate, especially in busy urban areas. This is further compounded by algorithm complexity that requires significant computing power, usually available at a central server. An additional consequence of this is network congestion as all video streams need to be transmitted for processing.

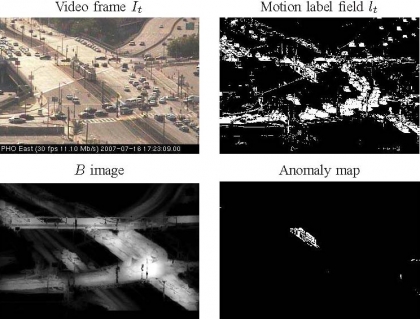

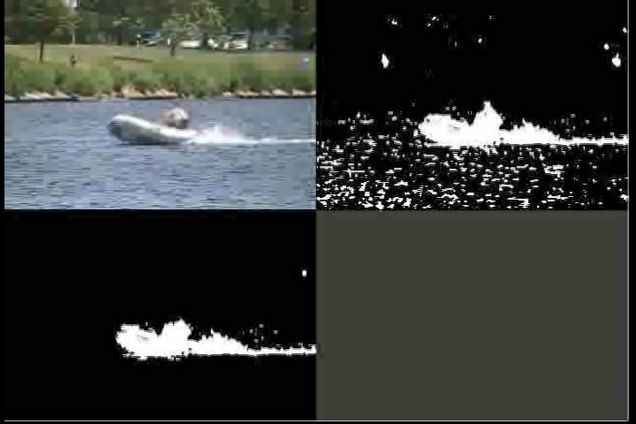

Description: The simplest form of anomaly detection is background subtraction; objects that move or appear in/disappear from camera’s field of view are deemed anomalous. Although this may be sufficient for restricted-area access control where a mere detection of movement is sufficient, it is not enough for the detection of unusual behavior. However, the background subtraction results capture the moving object dynamics while stripping the photometric content. We leverage this for an abnormal behavior detection framework that can detect abnormally high activity, departure from typical activity, etc. all within the same formulation. By aggregating motion labels for a given pixel from all frames of a training sequence, we build a low-dimensionality representation against which we test an aggregate computed from the observed sequence. If the observed aggregate either exceeds or departs from the training aggregate, abnormality is declared. This simple strategy leads to remarkably robust results.

Results: The developed algorithm, called behavior subtraction, has been tested on a wide variety of video sequences, from highly-cluttered traffic scenes captured by vibrating cameras (see image above) to notoriously-difficult boats-on-a-river scenes where specular light reflections off water surface are difficult to account for. Not only is the algorithm effective at detecting anomalous dynamics in the scene, but it is also efficient in that it requires relatively low-power computing hardware and its memory footprint is minimal compared to state-of-the-art algorithms. Both these characteristics are very desirable for the so-called edge implementation, i.e., implementation in the camera instead of a central server.

Find more information and results of behavior subtraction at http://vip.bu.edu/projects/vsns/behavior-subtraction/

Publications:

- J. McHugh, “Probabilistic methods for adaptive background subtraction,” Master’s thesis, Boston University, Jan. 2008.

- P.-M. Jodoin, V. Saligrama, and J. Konrad, “Behavior subtraction,” in Proc. SPIE Visual Communications and Image Process., vol. 6822, pp. 10.1-10.12, Jan. 2008.

- J. McHugh, J. Konrad, V. Saligrama, and P.-M. Jodoin, “Foreground-adaptive background subtraction,” IEEE Signal Process. Lett., vol. 16, pp. 390-393, May 2009.

- V. Saligrama, J. Konrad, and P.-M. Jodoin, “Video anomaly detection: A statistical approach,”in IEEE Signal Process. Mag., vol. 27, pp. 18-33, Sept. 2010.

- P.-M. Jodoin, V. Saligrama, and J. Konrad, “Behavior subtraction,” IEEE Trans. Image Process., vol. 21, pp. 4244-4255, Sept. 2012.

Website: vip.bu.edu