CS Professors Receive $2 Million DARPA Grant to Battle Automated Misinformation in the News

By Keziah Zimmerman

CS Professors Bryan Plummer and Kate Saenko, in collaboration with professionals from UC Berkeley, University of Washington, and UC Davis, have received $2 million in funding from the Defense Advanced Research Projects Agency (DARPA). The grant is in support of a project that aims to battle automated misinformation in the news. BU CS spoke with Professor Bryan Plummer to gain insight into the objectives and challenges of this upcoming research.

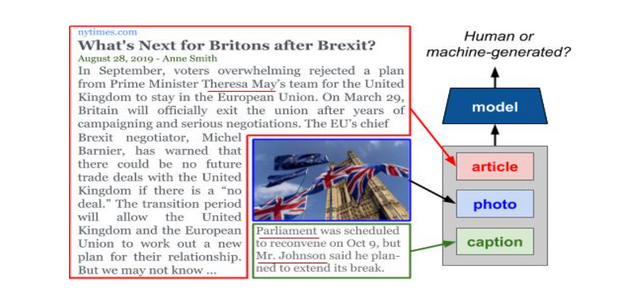

“One of our goals is to automatically detect misinformation in the news,” said Plummer. “Powerful AI agents have recently been developed that can automatically generate text and images that are difficult to identify as machine-generated. Since these AI agents are fairly easily used, there are significant worries about this being used to spread misinformation.”

Citing a competition at Facebook which attempts to detect machine-generated images, Plummer emphasized the relevance of such research to the challenge of addressing misinformation in the news.

“Professor Saenko and I have already started to investigate AI misinformation in some of our recent work,” said Plummer. “With our graduate student, Reuben Tan (GRS’23), we created machine-generated news articles by using state-of-the-art language generation methods, and then our goal was to identify the real and fake news articles. We designed an algorithm that tries to find a mismatch between a new article and any images and captions that significantly improved the performance of our model.”

In addition to this new research project, both professors have worked with DARPA before, developing projects focused on explainable artificial intelligence and learning with fewer labels. To receive the current grant, Plummer and Seanko teamed up with researchers at other institutions for their proposal.

“Rapid advances in AI and machine learning have led to the ability to synthesize images of people who don’t exist, videos of people doing things they never did, recordings of them saying things they never said, and entirely fabricated news stories about events that never happened,” said Plummer when summarizing the research proposal. “While many earlier forensic techniques have proven effective at combating more traditional falsified content, new approaches are required to combat this new type of AI-synthesized content.”

One of the biggest challenges the researchers expect to face is the ever-changing nature of specific AI models that automatically detects machine-manipulated media. Plummer explained how this is an obstacle because the methods that identify the manipulated media most likely will not work once the AI model changes.

“It is especially difficult to detect for some methods that selectively manipulate an image or news article to change its intent,” said Plummer. “Since most of the content is from the original source, you have to find anomalies due to the local changes that were made.”

When asked about goals for the project, Plummer identified that there are multiple objectives the group has, but he is particularly interested in learning how to adapt models to identify new AI automatically, and then to discern the intent behind the manipulation. This will allow the researchers to interpret the overall goal of this sort of AI manipulation.

With this new award and project taking shape, BU CS students will, no doubt, be curious about how they could follow a similar path. When asked for advice for those looking to get into research, Plummer emphasized that while there are many choices, a focused approach helped him along his path.

“There are a lot of choices you can make in your academic career,” said Plummer. “Deciding on what to do, having a clear idea of what you want to see happen, and fitting that into your long term plans can make it easier to decide what to spend your time on. This helped keep me focused on my goals.”

Visit the research page for additional information about the current work Professors Plummer and Saenko and Reuben Tan are conducting: https://cs-people.bu.edu/rxtan/projects/didan/