Revolutionizing American Sign Language Tools

Professor Carol Neidle leads the American Sign Language Linguistic Research Project

Professor Carol Neidle leads the American Sign Language Linguistic Research Project

American Sign Language is the primary means of communication for over 500,000 people in the United States, but because ASL lacks a written form, there is no good way to look up a sign in a dictionary.

Most American Sign Language dictionaries are organized by English translation, so users can only look up words if they know what the sign means and if they know the likely English translation of that sign. Some ASL dictionaries allow for searches based on articulatory properties — such as handshape, location, orientation, and movement type — but require signers to search through hundreds of pictures to find the sign they are looking for, if the sign is even present in that dictionary.

Professor of Linguistics Carol Neidle is hoping to change that. Neidle has spent more than 30 years in collaborative research with computer scientists to advance linguistic and computer science research on American Sign Language (ASL), including sign language recognition, and to develop resources that can help those who use ASL to communicate. She has received continuous National Science Foundation funding since 1994 for this research.

Professor of Linguistics Carol Neidle is hoping to change that. Neidle has spent more than 30 years in collaborative research with computer scientists to advance linguistic and computer science research on American Sign Language (ASL), including sign language recognition, and to develop resources that can help those who use ASL to communicate. She has received continuous National Science Foundation funding since 1994 for this research.

“The linguistic study of signed languages is less advanced than that of spoken languages,” said Neidle, who is retiring this summer from the Department of Linguistics. “There is still much left to be learned.”

Neidle was hired at BU in the early-1980s as a professor of French and French linguistics in the Department of Modern Foreign Languages and Literatures. She founded the linguistics program in the mid-1980s (it became a full-fledged department in 2018). She got interested in ASL in the early 1990s because of one of her graduate students, Benjamin Bahan, a member of the Deaf community who also directed the ASL program in the School of Education (now Wheelock College of Education & Human Development).

A linguist fluent in French whose dissertation focused on Russian syntax, Neidle was curious about the grammatical structure of ASL, which only started to be recognized as a full-fledged language in the 1960s and therefore had not been well studied or documented in the linguistic literature. “As a linguist, I knew ASL was a language. That was all I knew about it,” she said. “I got interested, and a number of students in the program got interested. Working with Ben was a great opportunity to try to discover what was going on with the language.”

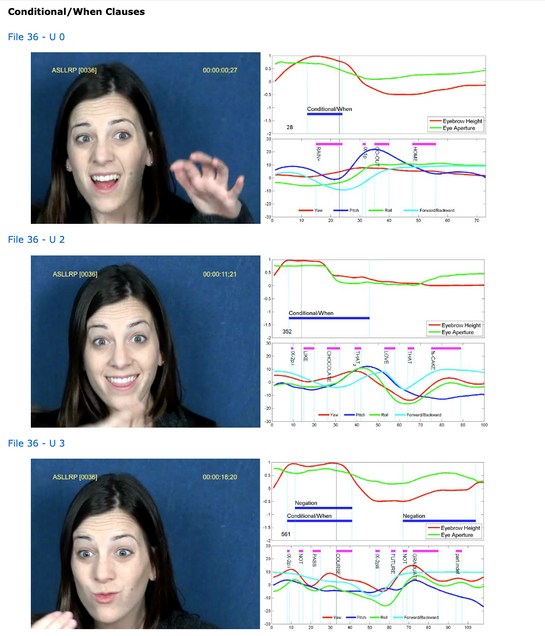

Rather than being articulated with the vocal tract and perceived auditorily as spoken languages are, signed languages like ASL are articulated with the hands and arms, as well as by facial expressions and head gestures, and perceived visually. A particular sign may have multiple translations in English, and a particular English word may be translated by multiple ASL signs, depending on the context and intended meaning.

The first problem Neidle faced was how to study a language that doesn’t have a written form. She and her collaborators decided to create tools so they could study this language. They teamed up with computer scientists — including faculty members at Rutgers as well as Computer Science Professor Stan Sclaroff, now dean of Arts & Sciences — and, with funding from the NSF, started collecting high-quality video data.

The first problem Neidle faced was how to study a language that doesn’t have a written form. She and her collaborators decided to create tools so they could study this language. They teamed up with computer scientists — including faculty members at Rutgers as well as Computer Science Professor Stan Sclaroff, now dean of Arts & Sciences — and, with funding from the NSF, started collecting high-quality video data.

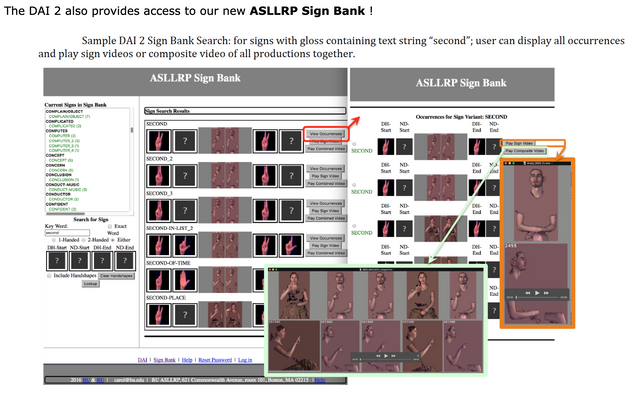

They then developed a linguistic annotation tool for visual language data — called SignStream® — which enabled them to build a large and expanding collection of linguistically annotated video data. Since then, Neidle and her colleagues have been developing a sign bank with lots of examples of isolated ASL signs and signs as used in ASL sentences.

Neidle and her collaborators — currently including faculty members at Rutgers University, RIT, and Gallaudet University — are now at a place where they can begin to use their research accomplishments and data for development of real-world applications, such as lookup tools for dictionaries. They have developed a prototype of a tool that will allow users to upload a video clip of an unknown ASL sign. Their tool analyzes the file and offers the user the top 5 most likely matches from the sign bank based on their sign recognition technology.

They are also working on developing video anonymization. Because critical information is expressed on the hands and face, ASL videos necessarily reveal the identity of the signer. But there are many cases where ASL signers would like to remain anonymous — for instance, for submitting a sensitive question on the Internet about a medical issue. The goal of the anonymization tool that is under development is to transform videos in such a way as to preserve the critical linguistic information while disguising the identity of the signer.

“Spoken language users can achieve this by just sending text. Nobody knows who wrote it,” Neidle said. “But to communicate through an ASL video is more complicated. ASL doesn’t have a written form. Signers could communicate via in English (the second language for many Deaf signers), but they want the freedom to communicate in their own language.”

Neidle said that this technology will benefit both deaf and hearing individuals. For example, 90 percent of deaf children are born to hearing parents. Even if the parents don’t become perfect signers, an entire family could benefit if parents had an easy way to look up ASL signs in a dictionary by submitting a video of an unfamiliar sign, she said.

“We now have a lot of data and online resources that we’re sharing with the research community and ASL signers, and we’re using these resources to advance our own research,” she said. “Our annotation software for visual language data, which will incorporate sign video lookup, also has the potential to revolutionize the process of sign language annotation.”

Neidle will be retiring officially as of July 1, but she is excited about continuing this collaborative research beyond that date as a Professor Emerita. Neidle said “It has been a real revelation to me personally to come to understand the profound analogies between spoken and signed languages, despite some modality-specific differences. It’s been fun. It’s been a real discovery.”