Virtual Humans Becoming Reality

Don’t be looking for the Terminator in this battle for entertainment.

Virtual Humans are pushing the limits with the latest artificial intelligence technology.

“Would you like to play a game?” asked Joshua, an early conception of artificial intelligence (AI) technology depicted in the 1983 hit film, WarGames. Despite the machine’s simplicity and its monotonous and mechanical voice, Joshua aptly describes one of the leading motivations behind AI research and development: the gaming industry. But where is this industry taking us in terms of new experiences and what is on the horizon?

Gunslinger

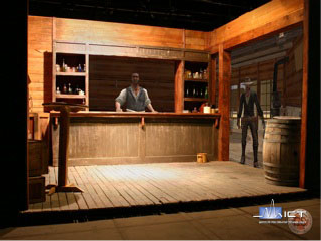

The ‘Gunslinger’ mixed reality interaction. Shown above are the integration of two kinetic, Virtual Humans in a static environment.

Ever wonder, “what if time travel were reality?” Good news, we’re getting closer. Not the Back to the Future ‘flux capacitor’ kind of time travel (sorry Doc’ Brown), but real experiences with real characters – well, almost real.

Imagine stepping through a pair of swinging doors that could take you to another place and time – one minute you are standing in a dark warehouse, and the next, you are standing on the sandy floor of an old western saloon. The tinkling of an upright piano and the clinking of glasses play in the background, while the sounds of horses and coaches are heard outside. “Howdy Ranger,” says a man from across the bar. Confused, you take a step back. You look down, realizing that you are wearing a holster with a six-shot revolver, and a conspicuous metal star with the words “U.S. Ranger” on your chest. While you try to make sense of the situation, the man continues on, “You here to rid our town of that evil bandit?” Several hopeful eyes turn to you, waiting expectantly for an answer.

“Gunslinger focuses on the immersive aspect of entertainment

Welcome to the Gunslinger, a flagship project that combines several technologies across a range of disciplines to provide a sneak peek into one possible future of entertainment experiences. Designed and developed at the Institute for Creative Technologies at The University of Southern California, Gunslinger is the result of a unique collaboration between cutting-edge research, gaming technology, and creative Hollywood storytelling and set building. Gunslinger focuses on the immersive aspect of entertainment, allowing a user to interact with Virtual Humans (VHs) through the creation of a virtual reality. Unlike any other form of entertainment, Gunslinger goes beyond traditional gaming and provides the participant with the opportunity to interact directly with VHs, independent of a controller or display, to experience AI technology in a new way. The VH artificial characters who look and act like humans, but inhabit a simulated environment, have the ability to respond to questions, ask a few of their own, figure out when they are being ignored. Some even duck when shots are fired in their direction.1

The ‘Virtual’ Nuts and Bolts

So how does the technology work – what allows a VH evolve during a conversation, for example, when it tries to convince you to help bring safety to the town? A virtual reality system can be broken down into two components: a physical component and a virtual component. Often times, integrating these two components seamlessly is very difficult, but ultimately determines if a user of Gunslinger buys into the illusion.2

The physical components of the virtual reality system can be thought of as (1) its visible image and animations on a screen, and (2) the phrases it uses to communicate with the participant. Typically the screens are designed into the physical set and positioned such that the static background of the screen closely matches its physical surroundings, providing continuity between the two. This allows the VH to use a variety of graphics and animations to portray a lifelike and believable appearance. Much like our own body language, VH animations are separated into stationary motions that are looped when a character is not speaking, and conversational motions that add information and emotion during interactions. The conversational motions are made audible by speakers placed strategically throughout the set when the VH human speaks to the user or another VH. As a result of these animations, a VH is always kinetic, conveying information when speaking through hand gestures and body motions. Even when not engaged in conversation, the VH will perform chores, such as cleaning tables and pouring drinks.

While the physical manifestation of the VH is where the Hollywood “smoke and mirrors” happen, the behind-the-scenes technology renders it possible. The virtual component of the entire system closely resembles our own biological information processing systems. Cutting-edge visual and auditory recognition programs function as the ‘eyes and ears’ of the system. They acquire detailed information during the user’s interaction with the VH, bridging the gap between the two. The inputs are then processed by an advanced piece of software, making decisions just like our brain would. This software is a statistical text classifier, called NPCEditor. The software uses a number of algorithms to determine the next response and motion of the VH based on a number of possible conditions that evolve over the course of the interaction.3 Once the response is determined, one final program functions to sync the audio file and animations, resulting in the physical embodiment of the VH response. The combination of artificial intelligence, motion detection, and speech recognition technologies with dynamic animations and speech capabilities results in an unmatched, interactive entertainment experience.

What’s Next?

While the Gunslinger project is leading the way in innovative and immersive experiences, the applications of this technology outside the entertainment industry are growing just as quickly. Similar VH-based projects are beginning to appear across other fields ranging from military applications to museum tour guides.

SGT Star, one example of virtual human technology – currently being used by the U.S. Army.

Meet SGT Star, for example, the official virtual guide to the U.S. Army. This VH, which can be found at goarmy.com, is one the most knowledgeable and convenient public relations agents yet. He can answer all your questions about the Army in an informal conversation that does not require you to leave your desk.

Virtual Human tour guides, currently part of the “Science Behind Virtual Humans” exhibit at the Museum of Science, Boston.

If you happen to visit the Museum of Science in Boston, Massachusetts, you might run into Ada and Grace, two more VHs that serve as virtual tour guides. These 19-year old twins are the center of the “Science Behind Virtual Humans” exhibit and they educate visitors through face-to-face interactions on the technology that drives them. They can suggest other exhibits to visit or answer questions about VH research and development. They are among some of the most advanced VHs to be implemented in interactive, public environments, displaying the same speech recognition and natural language processing technologies as those used in the Gunslinger project.

Whether they are inviting you to dust off your boots and have a drink in an old western saloon, or explaining the benefits of a career in the Army, VH technology is on rise. While the current projects have produced VH characters that respond directly based on input, future projects could explore new technologies that could understand or maintain a dynamic model of the world around them. Virtual Humans provide the opportunity to create unparalleled immersive experiences and the ability to simulate engaging and interactive environments, which are only limited by the restrictions that we place on them.

References

1Traum D. Institute for Creative Technologies, University of Southern California. “Talking to Virtual Humans: Dialogue Models and Methodologies for Embodied Conversational Agents.” Modeling Communication with Robots and Virtual Humans pp. 296-309. 2006.

2Kenny P, Hartholt A, Gratch J, Swartout W, Traum D, Marsella S, Piepol D. Institute for Creative Technologies / University of Southern California. “Building Interactive Virtual Humans for Training Environments.” Interservice/Industry Training, Simulation, and Education Conference (I/ITSEC). 2007.

3Leuski A, Traum D.Institute for Creative Technologies University of Southern California. “A Statistical Approach for Text Processing In Virtual Humans.” Army Science Conference (Orlando, FL, December 1 – 4, 2008).