Performance

MPI-parallel code

Strong and weak scaling on CPUs

On multi-core systems (Kraken supercomputer), strong scaling with 108 particles on 2048 processes achieved:

- 93% parallel efficiency for the non-SIMD code, and

- 54% for the SIMD-optimized version (which is 2x faster).

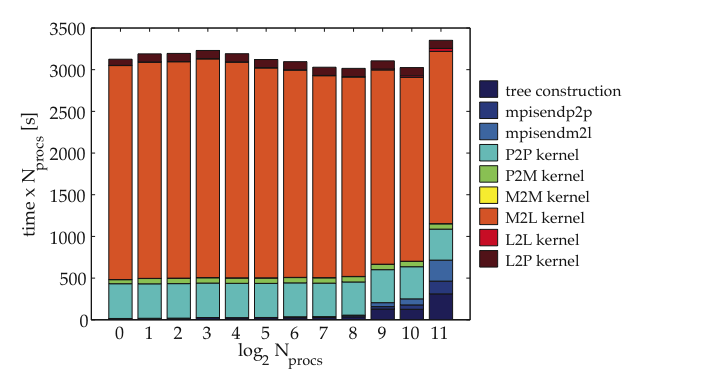

The plot in Figure 3 shows MPI strong scaling from 1 to 2,048 processes, and timing breakdown of the different kernels, tree construction and communications. Test problem: N=108 points placed at random in a cube; FMM with order p=3. Calculation time is multiplied by the number of processes, so that equal bar heights would indicate perfect scaling.

Figure 3 —MPI strong scaling from 1 to 2,048 processes, and timing breakdown of the different kernels, tree construction and communications. Parallel efficiency is 93% on 2,048 processes. © 2011 R Yokota, L Barba.

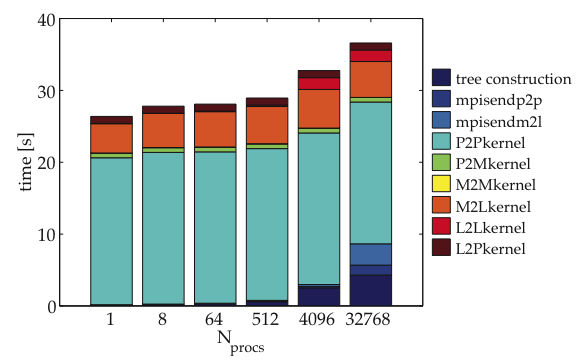

Weak scaling with 106 particles per node achieved 72% efficiency on 32,768 processes of the Kraken supercomputer. The plot in Figure 4 shows MPI weak scaling with (SIMD optimizations) from 1 to 32,768 processes, and timing breakdown of the different kernels, tree construction and communications. Test problem: N=106 points per process placed at random in a cube; FMM with order p=3.

Figure 4—MPI weak scaling with (SIMD optimizations) from 1 to 32,768 processes. Parallel efficiency is 72% on 32,768 processes. © 2011 R Yokota, L Barba.

The results above are detailed in the following publication:

“A tuned and scalable fast multipole method as a preeminent algorithm for exascale systems”, Rio Yokota and Lorena A Barba, Int. J. High-perf. Comput., online 24 Jan. 2012, doi:10.1177/1094342011429952 — Preprint: arXiv:1106.2176

Weak scaling on GPU systems

The ExaFMM code scales excellently to thousands of GPUs. We studied scalability on the Tsubame 2.0 supercomputer of Tokyo Institute of Technology (thanks to guest access). A timing breakdown is shown below, on up to 2048 processes, where the communication time is seen to be minor.

Parallel efficiency of ExaFMM on a weak scaling test in Tsubame 2.0 achieved more than 70% on 4096 processes (with GPUs). A similar test of a parallel FFT (without GPUs) showed a dramatic degradation of efficiency at this number of processes.

Figure 6—Parallel efficiency of the FMM on a weak scaling test on Tsubame 2.0 (with GPUs), and of a parallel FFT (without GPUs) on up to 4096 processes.

Cite this figure:

Weak scaling of parallel FMM vs. FFT up to 4096 processes. Lorena Barba, Rio Yokota. Figshare.

http://dx.doi.org/10.6084/m9.figshare.92425