Unlocking Emily’s World

Cracking the code of silence in children with autism who barely speak

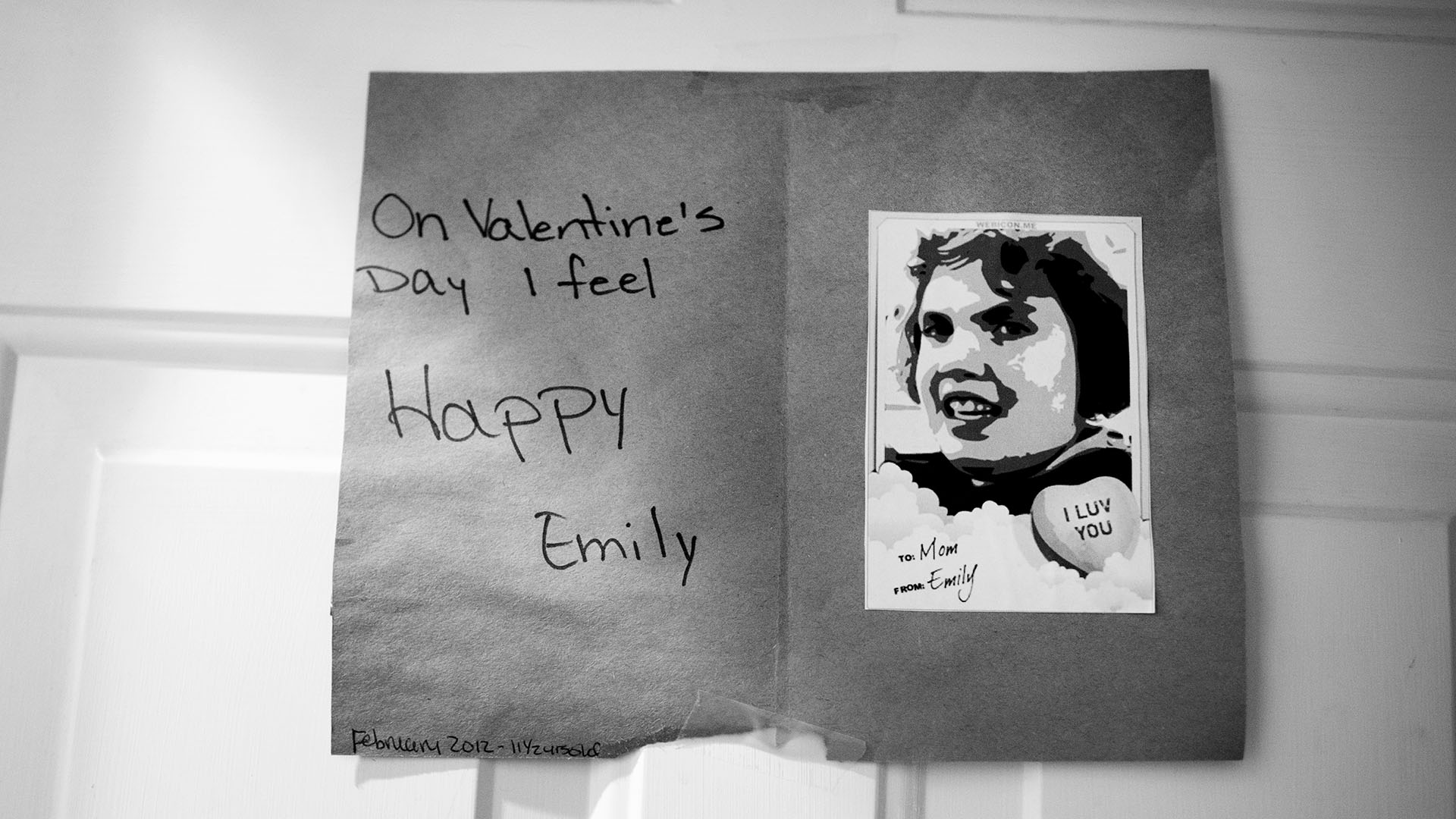

Emily Browne is laughing, and nobody really knows why. The 14-year-old with a broad face and a mop of curly brown hair has autism. She drifts through her backyard in Boston’s Dorchester neighborhood, either staring into the distance or eyeballing a visitor chatting with her dad, Brendan, and her 15-year-old sister, Jennifer, on the nearby patio. That’s where the laughter started—a conversational chuckle from somebody on the patio that Emily answered with a rollicking, high-pitched guffaw. Then another, and another, and another.

Emily can’t join the conversation. She is among the 30 percent of children with autism who never learn to speak more than a few words—those considered “nonverbal” or “minimally verbal.” Emily was diagnosed with autism at two, but Brendan and his wife, Jeannie, knew something was wrong well before then. “There was no babbling. She didn’t play with anything. You could be standing beside her and call her name, and she wouldn’t look at you,” says her dad. “Emily was in her own little world.”

“We were worried about Emily from pretty much her 12-month checkup….and we had talked with the doctor about the fact that she didn’t make any sounds. She didn’t really pay attention to anybody, which seemed a little unusual.”

But why? What is it about the brains of “minimally verbal” kids like Emily that short circuits the connections between them and everyone else? And can it be overcome? That’s the research mission of Boston University’s new Center for Autism Research Excellence, where Emily is a study subject.

Partly because of the expanding parameters of what is considered autism, the number of American children diagnosed with autism spectrum disorder has shot up in recent years, from one in 155 children in 1992 to one in 68 in 2014, according to the Centers for Disease Control and Prevention. And Helen Tager-Flusberg, a BU College of Arts & Sciences professor of psychology who has studied language acquisition and autism for three decades and heads the Autism Center, says minimally verbal children are among the most “seriously understudied” of that growing population.

Backed by a five-year, $10 million grant from the National Institutes of Health awarded in late 2012, her team includes researchers and clinicians from Massachusetts General Hospital, Harvard Medical School, Beth Israel Deaconess Medical Center, Northeastern University, and Albert Einstein College of Medicine in New York City. The researchers are focusing on the areas of the brain used for understanding speech, the motor areas activated to produce speech, and the connections between the two. They’ll combine functional magnetic resonance imaging (fMRI), electroencephalography (EEG), and neural models of how brains understand and make speech. The models were developed at BU by Barbara Shinn-Cunningham, a professor of biomedical engineering in the College of Engineering, and Frank Guenther, a professor of speech, language, and hearing sciences at BU’s Sargent College of Health & Rehabilitation Sciences. They’ll also run the first clinical trials of a novel therapy using music and drumming to help minimally verbal children acquire spoken language.

Ultimately, Tager-Flusberg and her colleagues hope to crack the code of silence in the brains of minimally verbal children and give them back their own voices. Getting these kids to utter complete sentences and fully participate in conversation is years away. For now, the goal is to teach words and phrases in a way that can rewire the brain for speech and allow more traditional speech therapy to take hold.

“Imagine if you were stuck in a place where you could not express anything and people were not understanding you,” says Tager-Flusberg, who is also a professor of anatomy and neurobiology and of pediatrics on Boston University’s Medical Campus. “Can you imagine how distressing and frustrating that would be?”

On a hot, muggy morning in late August, Emily’s dad escorts her into the Autism Center on Cummington Mall for a couple hours of tests. It’s part of a sound-processing study comparing minimally verbal adolescents with high-functioning autistic adolescents who can speak, as well as normal adolescents and adults.

The investigation is painstaking, because every study must be adapted for subjects who not only don’t speak but may also be prone to easy distraction, extreme anxiety, aggressive outbursts, and even running away. “[Minimally verbal children] do tend to understand more than they can speak,” says Tager-Flusberg. “But they won’t necessarily demonstrate in any situation that they are following what you are saying.”

“The study at BU especially was interesting to us because it focused on the kind of autism that Emily has….I know autistic children can behave a certain way—they can be antisocial and so forth—but no one seemed to be addressing the fact that some of these kids can’t communicate.”

That’s obvious in Emily’s first task, a vocabulary test. Seated before a computer, she watches as pictures of everyday items pop up on the screen, such as a toothbrush, a shirt, a car, and a shoe. When a computer-generated voice names one of these objects, Emily’s job is to tap the correct picture. Emily’s earlier pilot testing of this study showed that she understands more than 100 words. But today, she’s just not interested. Between short flurries of correct answers, Emily weaves her head, slumps in her chair, or flaps her elbows as the computer voice drones on—car…car…car and then umbrella…umbrella…umbrella. When one of the researchers tries to get Emily back on task, she simply taps the same spot on the screen over and over. Finally, she gives the screen a hard smack.

The next session is smoother. Emily is given a kind of IQ test in which she quickly and (mostly) correctly matches shapes and colors, identifies patterns, and points out single items within increasingly complicated pictures of animals playing in the park, kids at a picnic, or cluttered yard sales.

Emily is minimally verbal, not nonverbal. “Words do come out of her,” her dad explains. She’ll say “car” when she wants to go for a ride or “home” when she’s out somewhere and has had enough. Sometimes she communicates with a combination of sounds and signs or gestures, because she has trouble saying words with multiple syllables. For instance, when she needs a “bathroom,” her version sounds like, “ba ba um,” but she combines it with a closed hand tilting 90 degrees—pantomiming a toilet flush.

“That’s a handy one,” her dad says. “She uses it to get out of things. When she’s someplace she doesn’t want to be, she’ll ask to go to the bathroom five or six times.”

The first word Emily ever said was “apple” when she was four years old. “We were going through the supermarket, and she grabbed an apple. Said it, and ate it. It was amazing to me,” her dad recalls.

The final item on the morning agenda is an EEG study, in which Emily must wear a net of moist electrodes fitted over her head while she listens to a series of beeps in a small, soundproof booth. The researchers have tried EEG with Emily twice before in pilot testing. The first time, she tolerated the electrode net. The second time, she refused. This time, with her dad to comfort her and a rewarding snack of gummi bears, Emily dons the neural net without protest.

The point of this study is to see how well Emily’s brain distinguishes differences in sound—a key to understanding speech. For instance, normally developing children learn very early, well before they can speak, to separate out somebody talking from the birds chirping outside the window or an airplane overhead. They also learn to pay attention to deviations in speech that matter—the word “cat” versus “cap”—and to ignore those that don’t—cat is cat whether mommy or daddy says it.

“The brain filters out what’s important based on what it learns,” says Shinn-Cunningham. Some of this sound filtering is automatic, what brain researchers call “subcortical.” The rest is more complicated, a top-down process of organizing sounds and focusing the brain’s limited attention and processing power on what’s important.

EEG measures electrical fields generated by neuron activity in different parts of the brain. “Novel sounds should elicit a larger-than-normal brain response, and that should register on the EEG signal,” Shinn-Cunningham explains. There are 128 tiny EEG sensors surrounding Emily’s head and upper neck. Each sensor is represented as a line jogging along on the computer monitor outside the darkened booth where Emily sits with her dad holding her hand, watching a silent version of her favorite movie, Shrek.

Today’s experiment is focused on the automatic end of sound-processing. A constant stream of beeps in one pitch is occasionally interrupted by a higher-pitched beep. How will Emily’s brain respond? Most of the time, the 128 EEG lines are tightly packed as they move across the screen. However, muscle movements generate large, visible peaks and troughs in the signals when Emily blinks or lolls her head from side to side. Once, just after a gummi bear break, several large, concentrated spikes show her chewing.

Shifts in attention are much more subtle, and the raw data will have to be processed before anything definitive can be said about Emily’s brain. The readout is time-coded with every beep, and the researchers will be particularly interested in the signals from the auditory areas in the brain’s temporal cortex, located behind the temples.

The beep test has six five-minute trials. But, after about twenty minutes, Emily is getting restless. It’s been a long morning. She starts scratching at the net of sensors in her hair. She’s frustrated that Shrek is silent. The EEG signals start to swing wildly. From inside the booth, stomping and moans of protest can be heard. When the booth’s door is opened at the end of the fourth trial, Emily’s eyes are red. She’s crying. Her father and the researchers try to cajole her into continuing.

“Just two more, Emmy,” her dad says. “Can you do two more for daddy?” And Emily answers with a word she can speak, quite loudly. “Noooo!” They call it a day. Emily will return to the center as the experiments move from beeps to words, and they can finish the last two trials then. All in all, it’s been a successful morning. “She did great,” says Tager-Flusberg.

In one room at the Autism Center, the researchers have rigged up a mock MRI, using a padded roller board that can slide into a cloth tunnel supported by those foam “noodles” kids use in swimming pools. It’s for helping the children in these studies learn what to expect in the real brain scanners operated by Massachusetts General Hospital.

“We’ve been finishing up our pilot projects for the scanning protocols and trimming them down to a time the kids will tolerate,” says Tager-Flusberg. “At first, the imaging folks at MGH said we need 40 to 50 minutes in the scanner for each subject. I said, ‘well that’s not happening. These kids won’t last that long.’”

The brain scans will be done with the adolescents as well as a group of younger minimally verbal kids, aged six to ten. The younger kids will also participate in an intervention study of a new therapy called Auditory-Motor Mapping Training (AMMT). The therapy was developed by Gottfried Schlaug, a neurologist who runs the Music and Neuroimaging Lab at Beth Israel Deaconess Medical Center. In AMMT, a therapist guides a child through a series of words and phrases, sung in two pitches, while tapping on electronic, tonal drum pads.

“The idea, from a neuroscience perspective,” says Schlaug, is that, “maybe in autistic children’s brains one of the problems is that the regions that have to do with hearing don’t communicate with the regions that control oral motor activity.”

“The first time she ever actually said a word to me that I understood, we were in Stop & Shop….She reached into the bin and she picked up an apple and said ‘apple.’”

Many of the same brain areas activated when we move our hands and gesture are also activated when we speak. So, combining the word practice with drumming could help reconnect what Schlaug calls the “hearing and doing” regions of the brain. The initial results, from pilot work on a handful of children in 2009 and 2010, were promising. After five weeks of AMMT, kids who had never spoken before were able to say things like, “more please” and “coat on.” That’s when Schlaug sought out Tager-Flusberg.

“I was aware of her importance in the field of autism research, and we wanted to discuss these findings with somebody who was an expert to ask if what we were seeing was believable,” says Schlaug, who is one of the principal investigators for the Autism Center. For the intervention study, the researchers aim to recruit about 80 minimally verbal children who will be randomized to either 25 sessions of AMMT or a similar therapy that differs in a few vital respects. (The control group subjects will have the option of getting AMMT after the study is complete.)

All the children will get brain scans before and after the therapy to see if improvements in vocal ability correspond with changes in the brain. “I would consider it a great success if we could turn on the brain’s ability to say words in an appropriate context,” says Schlaug. After that, he says, maybe AMMT could be scaled up to teach longer words and more complex phrases, or just get kids to a point where more traditional speech therapy could be effective.

Of course, as Tager-Flusberg stresses, the children classified as minimally verbal are, “an enormously variable population,” both in their facility with words and the other behavioral measures of the autism spectrum. The standards of improvement, and the hopes of the families joining in the center’s research, are no doubt just as varied.

Emily, for instance, goes to a public grade school in Boston. She’s in a special program for students with autism, and she has done well there. “She’s a relatively happy child,” her dad says. “She can count to 20. She knows her ABCs.” She can even spell a few words. Cat. Dog. Love.

Emily takes music classes at the local Boys & Girls Club, as well as dance and movement classes on Saturdays. Plus, the Brownes are a tight family. Brendan is an insurance underwriter, Jeannie teaches kindergarten part time, and Jennifer is a sophomore at the Boston Latin School. They’ve learned the fuller meanings of Emily’s limited vocabulary. When Emily says “pink,” for instance, she means yogurt, because her first yogurt was pink, strawberry, and delicious. When she says “orange,” that means quesadilla (her favorite food) because of the orange cheese they used at her school when she learned to make them.

Back on the patio in the Browne’s backyard, Emily’s dad explains how she used to run away a lot. She’d take off on him in the grocery store and flee across the parking lot, oblivious to traffic. Once caught, she’d be perfectly calm, even laughing. She also used to hit people for no particular reason. Both behaviors abated after Emily started taking the antipsychotic drug risperidone. Still, transitions are tough for her, and she’s entering her final year at the school she’s attended since she was three. Her parents are searching for the right high school.

“We still treat her like a child, but she’s a teenager now,” her dad says. “I don’t know what it’s going to be like when she’s 23. Will she be able to live independently? And this communication piece is really key to that, which is why we jumped at the research.”

“They gave me a list of 100 words….quite a few of them I realized not only could she understand but she could say them….So I learned a lot more about Emily. We’re used to Emily the way she is, but Emily is growing and growing up.”

It’s nearing lunch time. Emily is making noises, slamming the lid of the grill and vocalizing a kind of “aaaahheeeeahhh,” drawing attention to herself.

“Emmy, you want some lunch? Are you hungry?” her dad asks.

“Orange?” Emily says. They go inside and get out the tortillas, salsa, and cheese. It’s time for the visitor to head home. “Goodbye, Emily,” he calls from the front door. “Bye,” she answers with a half wave.

“Very good!” her dad exclaims. “See, that was spontaneous!”

Comments & Discussion

Boston University moderates comments to facilitate an informed, substantive, civil conversation. Abusive, profane, self-promotional, misleading, incoherent or off-topic comments will be rejected. Moderators are staffed during regular business hours (EST) and can only accept comments written in English. Statistics or facts must include a citation or a link to the citation.