Pushing the Boundaries of Artificial Intelligence

CAS lab develops robots modeled on the human brain

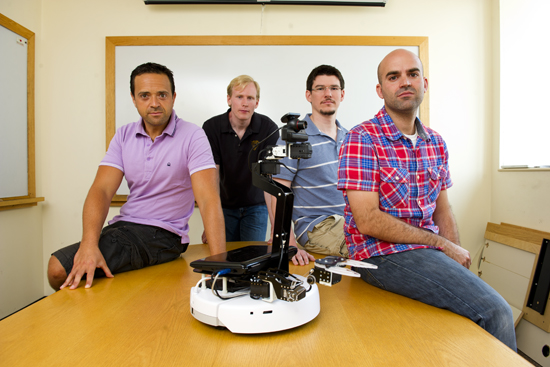

Neuromorphics Lab director Max Versace (from left), Ben Chandler (GRS’14), Byron Galbraith (GRS’15), and Sean Lorenz (GRS’14) with their prize robot (an IRobot Create® model), whose robotic arm can be controlled by an EEG cap. Photos by Cydney Scott

Massimiliano “Max” Versace sits in a conference room at BU’s Neuromorphics Laboratory. The lab director is holding one of the lab’s frequent visitors—his infant son, who is looking intently at his father. “This is a great example of a general-purpose learning machine,” Versace says—and he is only half joking.

Versace has been discussing the lab’s primary goal: to build an artificial intelligence that is smarter than any robot yet created. As every parent knows, babies have astonishing brains; they take in a wealth of information from the senses and over time learn how to move around, communicate, and begin to make independent decisions. Compared to a baby—or even the simplest animal—computers are sorely lacking in learning ability. Even sophisticated robots and software programs can accomplish only tasks they’re specifically programmed to do, and their ability to learn is limited by their programming. A Roomba® may manage to clean your house with random movements, but it doesn’t learn which rooms collect the most dirt or what is the least distracting time of day to clean.

Versace (GRS’07), a College of Arts & Sciences research assistant professor in the cognitive and neural systems department, calls this limited capability “special-purpose intelligence,” and his group is aiming for something much more sophisticated. The Neuromorphics Lab, launched in summer 2010 as part of the National Science Foundation–funded Center of Excellence for Learning in Education, Science, and Technology, is pushing the boundaries of artificial intelligence by creating a new kind of computer that can sense, learn, and adapt—all the behaviors that come naturally to a living brain.

BU is well known for its work in computational neuroscience—creating computer algorithms that describe the complex behavior of brains. The Neuromorphics Lab draws on that tradition, but is focused on turning this fundamental knowledge into real-world applications. The primary project is an ambitious program to develop what Versace refers to as a “brain on a chip.” The project, dubbed MoNETA (Modular Neural Exploring Traveling Agent), also the name for the Roman goddess of memory, would become the brain behind virtual and robotic agents that can learn on their own to interact with new environments, using the information they glean to make decisions and perform tasks.

“We want to eliminate, as much as possible, human intervention in deciding what the robot does,” Versace says. This is a tall order, which is why the lab is breaking down aspects of behavior, tackling them one at a time.

To demonstrate this idea, MoNETA project leader Anatoli Gorchetchnikov (GRS’05), a CAS research assistant professor at the Center for Adaptive Systems in the department of cognitive and neural systems, points to a screen in the conference room that shows a classic psychological experiment called the Morris water maze. A cartoon depicts the position of a rat that is dropped in a round pool of water. Rats can swim, but they don’t like to; the animal explores the pool until it finds a partially submerged platform it can stand on. On subsequent trials, it remembers the location of the platform and finds it much more quickly.

In this case, however, instead of a real rat, it’s a computer program designed to mimic a rat’s behavior. But rather than being programmed with the explicit task of finding the platform, this program has a series of motivations: a lack of comfort when in water motivates it to find solid ground, for instance, while a “curiosity drive” compels it to search nearby places it hasn’t been before. The idea is to create algorithms that produce lifelike behavior without explicitly telling the program what to do.

Other lab members are addressing different aspects of brain function. Gennady Livitz (GRS’11) is working with postdoc Jasmin Léveillé (GRS’10) on the visual systems of MoNETA—how it will interpret what it sees—and implementing those systems in simple robots. Others are working on how it will sense sounds in its environment, and how it will make decisions.

Wired for brain power

Modeling the complexities of the brain is only the first task. Versace and his colleagues believe that a lifelike artificial brain would require innovations in both the software and the hardware that houses it. While some lab members are creating computer models of the brain, the group is also working in partnership with Hewlett-Packard to develop the operating system for such a brain, called Cog Ex Machina, or Cog. This software will run on a memristor, an innovative type of electrical component just a few atoms wide, created by HP.

Ben Chandler (GRS’14) a cognitive and neural systems PhD candidate, explains that a new kind of hardware is necessary to overcome fundamental physical limits in what current computer chips can accomplish. A key difference between the way brains are wired and the way computers are wired is that computers store information in a separate place from where they process it: when they perform a calculation, they retrieve the necessary information from memory, perform the processing task, and then store the result in another location. Brain cells, however, manage to do all of this at the same time and location, making transfer of information from cell to cell much faster and more efficient.

Another key difference is power. For all its tremendous activity, the human brain runs on the equivalent of a 20-watt lightbulb. If the goal is to create a free-moving machine with an intelligence on a par with even a small mammal, it can’t involve large, power-guzzling supercomputers. Such a machine must have a “brain” that is dense, compact, and requires little power. Memristors, says Versace, allow hardware designers to build chips with unprecedented density that operate at very low power.

Because the lab’s work requires applying a deep understanding of the brain to the practical problems of software development, and then integrating that software into computer chips and eventually robotic vehicles and devices, it is highly interdisciplinary. “We are a bridge between neuroscience and engineering,” Versace says. “We are fluent in both languages. We can talk neurotransmitters and molecules with biologists and electronics and transistors with engineers.” Lab members come from a wide range of backgrounds, some bringing knowledge in neuroscience, psychology, and biology, and others in computer science, engineering, and math. To thrive here, however, they need to feel comfortable working at both ends of the bridge.

Doctoral student Sean Lorenz (GRS’14) works on interfacing the robot with an EEG (electroencephalogram) cap. The target application will be medical, particularly for robot devices that will enable people with disabilities to interact with the world by means of noninvasive brain/machine interfaces.

The composition of the laboratory’s staff signals a focus on the future: newer faculty members and graduate students spearhead projects, without the traditional hierarchies of an academic lab. “It’s a brand-new field, and it’s wide open,” says Chandler. “For anyone who has the interest and the talent, there’s an opportunity to move in.” Chandler personifies this point: one of the lab’s cofounders, he has taken a leading role in the partnership between the lab and HP, while still managing to make progress on his graduate thesis.

Usually academic labs make theoretical advances and publish scientific papers, but transferring this work to the real world requires a different approach. Cognitive and neural systems postdoctoral fellow Heather Ames (GRS’09), one of the lab’s founding members, is leading an outreach effort to engage industry in the lab’s work. She and her colleagues believe that such partnerships are crucial to keep these ideas from languishing in a lab. Versace says that the ultimate goal is to “take neuroscience out of the lab” and turn theory into reality.

This story originally ran in the fall 2011 issue of arts + sciences.

Comments & Discussion

Boston University moderates comments to facilitate an informed, substantive, civil conversation. Abusive, profane, self-promotional, misleading, incoherent or off-topic comments will be rejected. Moderators are staffed during regular business hours (EST) and can only accept comments written in English. Statistics or facts must include a citation or a link to the citation.