An Inside Look

ENG researchers from across disciplines are joining forces to produce images and insights into how neurons and other cells work

By Patrick L. Kennedy

An electrical engineer, a biomedical engineer, and a physicist walk into a lab. Wait—make that an electrical engineer, two biomedical engineers, a materials science engineer, and a physicist. And, so far, that’s just two people—Michelle Sander (ECE, BME, MSE) and Allyson Sgro (BME, CAS Physics).

They’re followed by colleagues from the Photonics Center, the Biological Design Center, and even Australia’s Swinburne University. This team has assembled to develop a custom-built microscope that can image a cell membrane in its natural environment, without extensively preparing (and often compromising) the sample as many other methods do.

“We have experts in optics, electrical engineering, cell engineering,” says Sander. “The project spans disciplines while it spans continents. And it’s been very enriching for the PhD students involved, as they’ve each learned a lot about the other fields.”

This is how it’s done at Boston University College of Engineering. Its departments and divisions—Biomedical Engineering, Electrical and Computer Engineering, Mechanical Engineering, Materials Science & Engineering, and Systems Engineering—don’t exist in silos. In one particularly fruitful area—imaging—researchers across the college are bringing together expertise in biomedicine, optics, and data science to see what’s going on inside our bodies. Whether they’re imaging cells, lighting up groups of neurons, or measuring the blood flow in regions of the brain, they are advancing our understanding about our inner workings.

Professor David Boas (BME, ECE) could be describing any number of projects when he says, of his own work on a wearable brain imaging cap, “BU is really strong in photonics and neuroscience and the data sciences, and this project draws on all three.”

Moreover, Boas says, the devices he and colleagues are developing will deepen our knowledge of how humans interact. “And society is social interaction,” he says. “So if we can better understand how the brain functions in real-life settings, hopefully we will understand how to make society a better place.”

Learning to forget

A few rusty nails littered a path near the home of Midnight. A smart black Labrador, she knew the way was dangerous, and she avoided it.

Then the path was cleared. Still, it took Midnight six months before she could bring herself to set paw on the path. “There was nothing I could do to convince her,” says Associate Professor Bobak Nazer (ECE, SE), the dog’s owner. “She just remembered, ‘not safe.’”

In a way, military veterans and others suffering from post-traumatic stress disorder (PTSD) struggle with the same problem: It can be hard to dissociate certain settings or sounds—say, a large, wide-open space or fireworks—from certain dangers they’ve experienced—say, sniper fire.

Nazer is one of several ENG researchers collaborating on studies of the hippocampus, the region of the brain that is critical for learning and memory in both animals and humans. Their work has implications not only for PTSD, but also dementia and Alzheimer’s disease, which are associated with hippocampal atrophy.

In recent years, Associate Professor Xue Han (BME) has made a name for herself as a pioneer in optogenetics and optical imaging. Using pulses of light to control and observe the behavior of different neurons, she has discovered new types of brain signals.

“We image hundreds and hundreds of neurons simultaneously,” says Han, “and that actually creates a huge problem on the data analysis front.”

That problem led Han to link up with Nazer, an expert in data science and high-dimensional statistics.

“For me,” says Nazer, “dealing with information and data processing, what’s interesting is trying to ground a mathematical problem in some kind of useful engineering scenario.”

Along with Professor Venkatesh Saligrama (ECE, SE), the pair earned a Dean’s Catalyst Award, and two National Science Foundation grants aimed at leveraging strides in biomedicine and machine learning to advance knowledge of how the brain works.

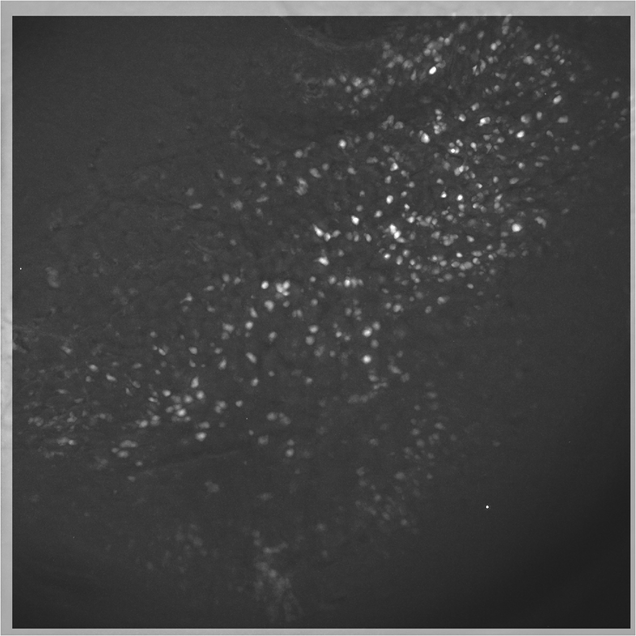

This year, Han and Nazer and ENG colleagues published a study that documents mice learning and unlearning a behavior. First, the mice heard a tone that was soon followed by an annoying puff of air in the eye. Using a technique called calcium imaging, the researchers identified the group of neurons in the hippocampus that was involved in learning to associate the tone with the puff.

After several days of this training, the mice spent one additional day listening to the tone followed by nothing—no more puff. At first, the mice continued to blink, bracing themselves for the air puff. But, fairly quickly, they got used to the tone having no particular meaning. “They learned to forget,” says Han. “And that involved a different population of cells.”

The study was the first to visualize a large-scale neural network to provide detailed, real-time evidence that two distinct populations of neurons are activated when the brain learns something, then learns to disregard it. A greater understanding of how that process works might eventually benefit patients with PTSD and anxiety disorders, as well as those with diseases related to memory loss and cognitive decline.

“I’ll never be able to say, ‘I personally helped this Alzheimer’s patient,’” says Nazer, “but I feel I’m helping Xue’s lab develop tools toward someday making that impact.”

“What I like the most about the neuroscience we do in our lab is that we’re using new techniques and new ways to collect data, and that means we need new ways to extract information from the data,” says BME PhD student Rebecca Mount, the lead author of the paper. “That lends itself really imperatively to collaboration across different types of science. We get to collaborate with data scientists and mathematicians. It was just really fun to all work together and absolutely necessary to have all of us on the team.”

Taking imaging outside the lab

Boas has been pioneering wearable brain imaging systems for more than 20 years. He is the director of the Neurophotonics Center, the first facility of its kind in the U.S. The center assembles researchers across disciplines—from psychology and biology to health and rehabilitation sciences to electrical and computer engineering and mechanical engineering—to study the workings of the brain.

Working with a $5.9 million National Institutes of Health grant, Boas and his team are developing a portable, wearable brain imaging cap. Resembling a swim cap studded with light-emitting sources and detectors, the device uses functional near-infrared spectroscopy (fNIRS) to track blood flow in the brain, thereby learning what neurons are activated during different activities.

“When you shine a flashlight on your hand, you see red,” says Boas. “That’s because the light is being absorbed by hemoglobin. Light scatters through the scalp and skull in exactly the same way.”

Light scatters, and it can also be absorbed, Boas explains. The probability of absorption is much lower than for scattering, and if the light used is near infrared, the probability for absorption is lower still. “As such, light scatters hundreds of times before it is absorbed,” says Boas. “This means light can scatter all the way through the scalp and skull into the brain and back and thus report to us the amount of hemoglobin in that part of the brain.”

Boas combines fNIRS readings with EEG (electrical) signals to gain the richest possible data on the brain and how it changes moment to moment.

Boas points out that a variety of BU faculty will use the system for their own studies. For example, Swathi Kiran at Sargent College, who normally uses functional magnetic resonance imaging (fMRI) to gauge the effectiveness of treatment on stroke survivors learning to speak again. This is the standard way to measure blood flow in the brain, but it must be performed while the subject is lying very still in a big, noisy machine.

“She’s excited about using the wearable system instead, because she’ll actually be able to measure the brain activity during the treatment regimen and see the impact on the brain on a day-to-day basis, in real-world settings,” Boas says.

At the College of Arts & Sciences, David Somers will use the system in a study of how attention and perception work in the brains of people who are walking while looking at their phones.

Assistant Professor Laura Lewis (BME) will be using it in her sleep studies. “She’s been looking at sleep with EEG and fMRI, but one thing she hasn’t been able to do is get her subjects to go into REM sleep in the fMRI machine,” says Boas. “So we’re starting to work with her to modify the technology and engineer a different cap that will be more comfortable on subjects, so they can sleep through the night.”

“fMRI has taught us a lot about the functioning human brain,” says Boas, but it hasn’t taught us about the socially interacting human brain, and what really makes us human is that social interaction. The devices we’re now developing will allow us to better understand what makes us human.”

Not just a pretty picture

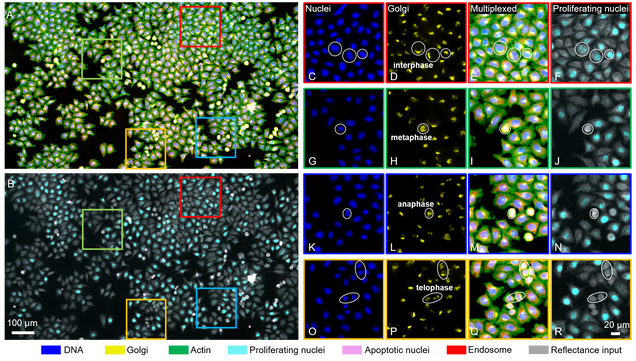

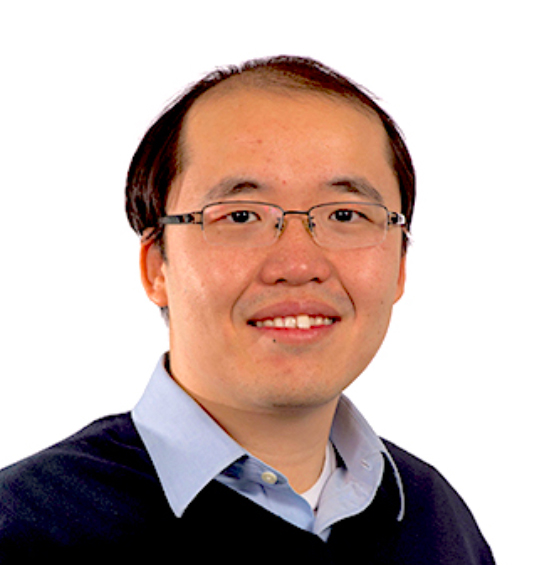

Not all ENG imaging pioneers study the brain, per se. Assistant Professor Lei Tian (ECE) has teamed up with several BU collaborators, bringing together expertise in machine learning, optics, and biomedicine to develop a novel microscope technology that produces a richer set of cell data with the help of deep learning.

“The ultimate goal is to understand cell functions and behaviors,” says Tian, who leads the Computational Imaging Systems Lab. “This is a building block of biology.”

To see what’s going on inside a cell, researchers commonly tag it with fluorescent stains or dyes to identify subcellular structures. But, the cell preparation is tedious, and most methods only allow researchers to view at most three colors at a time, limiting their picture of the cell’s components.

Tian and his colleagues use digital labeling that can apply six virtual fluorescent labels, thereby viewing six aspects of a cell at the same time, such as its DNA, components, and stage in its life cycle.

The group is among the first in cytometry (cell analysis) to use reflectance microscopy: Their custom-built microscope captures back-scattering signals that provide “exquisite sensitivity in detecting nanoscale structural changes,” Tian and co-authors write.

And they are the first to apply deep learning to analyze the reflectance images. “Once you have these beautiful virtual images, to the untrained eye, it’s very difficult to assess whether they’re just beautiful or they’re actually biologically correct,” says Tian. “We showed that this new data-driven imaging cytometry technique is fairly accurate.”

Importantly, some of the parameters that the Tian lab’s method can pick up on with great accuracy include markers for cancer and other diseases that are difficult to detect with current methods.

The culture of collaboration at ENG has been critical to the project, says Tian, who earned ENG’s Early Career Excellence in Research Award in 2021.

“It’s multidisciplinary,” says Tian of his team’s work. His collaborators include researchers not only in ENG’s ECE and BME departments but also the College of Arts & Sciences’ Biology, Philosophy, and Neuroscience departments, and the School of Medicine. “I consider myself an instrument builder slash data science developer in the field of biomedical science and bioengineering,” he added. “Deep learning is a big field, but you need domain expertise—in this case, biomedical microscopy—in order to accurately interpret the data. That has been the major contribution from my lab.”

Seeing across boundaries

Like the Tian group’s work, the custom-built microscope of Sander, Sgro, and their colleagues seeks to avoid the drawbacks of labeling cells with dyes, which can affect the cell’s health and behavior.

As an alternative, Sander, Sgro, Shyamsunder Erramilli (CAS Physics, MSE, BME), and Australian biomedical engineer Sally McArthur have demonstrated the capabilities of a novel technology they call vibrational infrared photothermal amplitude and phase signal (VIPPS) imaging. Essentially, their method can detect the chemical composition as well as thermal barriers in and around cells—for example, the membranes of a cell and its nucleus—as a way to gain a clearer picture of the cells and their subcellular structures in tissue models mimicking a mouse or a human cell.

“We have shown that if the cells are grown in an environment that is designed to copy the structure of our tissue—in this case, skin—then we can see these cells within that environment,” says McArthur, who began collaborating with Sander through an Air Force Office of Scientific Research (AFOSR) Young Investigator Program award and the AFOSR Biophysics Program. “This is really important as it shows that we can use this technique to look at cells in their native environments and not just when they are isolated and attached to plastic in the lab.”

Panagis Samolis (’21), who worked on VIPPS as an ECE PhD student, offers a sentiment common to many such collaborative projects at ENG.

“I have personally benefited significantly from these interdisciplinary and international collaborations,” says Samolis. “It is a unique and exciting opportunity for a student to have multiple experts to consult, work closely with other students beyond one’s cohort and learn from them. In addition, it allows you to broaden your perspective by getting glimpses into other cultures and education systems.”

And, always, says Samolis, ENG researchers—whether or not they work in imaging—keep the bigger picture in mind.

“In my opinion, the value of a technology is measured mostly by the impact of its direct application for advancing society and human life,” says Samolis. “Thus having a better understanding of the societal needs can help guide new technologies in a targeted and beneficial way. Overall, the sharing of knowledge and resources is a vital aspect of scientific progress.”

This article originally appeared in the fall 2021 issue of ENGineer, the Boston University College of Engineering alumni magazine.