New Technology Could Predict When Someone’s Mobility is Declining

CISE Faculty Affiliate Roberto Tron uses Visual-Inertial Filtering for Clinically-Relevant Human Walking Quantification

As we age, the likelihood of falling and getting injured increases. But what if we could prevent these accidents from happening? CISE faculty affiliate Roberto Tron is working on preventing injuries by monitoring mobility through cameras, sensors, machine learning, and estimation algorithms from robotics. His work also focuses on automatic controls and robotics, with a particular interest in geometrical problems.

Working with Lou Awad from the School of Rehabilitation and David Levine from Brigham and Women’s Hospital, he is looking to predict when someone’s mobility is declining so that the person can get help before they fall and are hospitalized.

Prof. Tron compared this technology to getting blood work and being able to see if you are at higher risk of heart disease (e.g. from high cholesterol). Today, there does not exist an equivalent test that doctors can use to predict mobility-related falls and injuries; with this technology, doctors will be able to do exactly that.

“Before the fall, studies have shown that signs of decline in how subjects move, like how fast they can walk, is predictive of whether they are going to fall in the next six months or the next year and whether they are going to be hospitalized,” Tron said.

He and his team, consisting of Ph.D. Candidates Marc Mitjans, Xinhuan (Leo) Sang, and formerly Postdoc Michail Theofanidis, combined the use of visual and inertial measures– Visual-Inertial Odometry (VIO)– to evaluate the mobility of people recovering from strokes. Using cameras and Inertial Measurement Unit (IMU) sensors, they were able to measure the movements of patients completing the 10-meter walk test, which is helpful in quantifying a patient’s mobility.

IMUs can be found in phones and detects the acceleration of gravity, how fast you’re turning, and what direction the phone is moving. By strapping IMU sensors to the lower body (thighs, shanks, knees, ankles, hips), the team was able to infer when the patient was taking a step and the angular velocity of the leg.

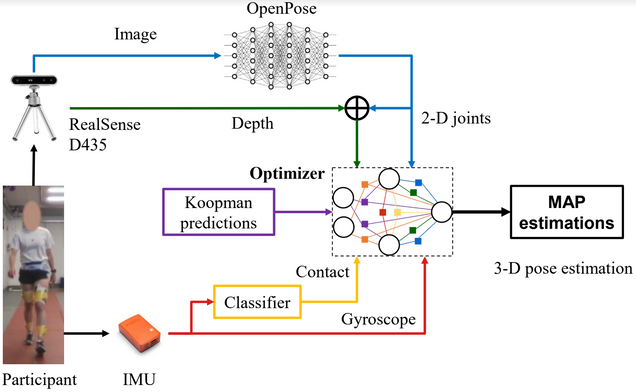

However, IMU sensors do not give the full picture of movement; for instance, they do not measure accurately how far a person has walked. This is where the cameras come into play. Through machine learning and a pre-trained Deep Neural Network (DNN) called OpenPose, the cameras are able to extract the joints of the human body, recognize the activity, and detect which limb is moving even when the image is noisy. On a more technical level, the camera provides 2D plane pixel coordinates for each joint, which are passed through OpenPose and then combined with the depth. Tron and his team are looking to predict the next position of the person given the current frame or position.

Combining information from IMU sensors on the body and the picture processed in the camera through the GTSAM algorithm (which is typically used for localization and mapping in robotics) creates the final pose. Through the reconstruction of human movement using GTSAM, the final system is capable of giving a frailty score to a patient that is equivalent to one that a trained clinician would have given.

The technology doesn’t require patients to have the camera on all the time. As long as they move around in front of the camera once a week, or every few days, the system will be able to process the data and evaluate if their mobility goes down. If it goes down to a certain threshold, the designated caretaker provider will be alerted to check on the patient.

In the future, these cameras will hopefully be relatively inexpensive so they can be implemented in the home of patients that health providers think are at risk. Through the collaboration with Prof. Awad, Prof. Tron hopes to integrate his system with electrostimulation, which sends an impulse to muscles that aren’t strong enough to perform actions such as standing up quickly. Cameras would predict what the person is trying to do, and then the electrostimulation would aid the person in the movement.

“The goal is to prevent people from going into hospitals,” Prof. Tron said. “A huge part of the population is getting older, and every time you hospitalize somebody, you have, in addition to the acute trauma, the risk of contracting other unrelated diseases. This isn’t even taking into account the time it takes to recover from a fracture or other trauma and subsequent rehabilitation.”

Tron started working on this project when he met Awad in a break room during a Center for Teaching and Learning workshop. They started collaborating, leading to funding from the American Heart Association and the National Institute of Health.

M. Mitjans et al., “Visual-Inertial Filtering for Human Walking Quantification,” 2021 IEEE International Conference on Robotics and Automation (ICRA), 2021, pp. 13510-13516, doi: 10.1109/ICRA48506.2021.9561517.