Category: Categories

Not Your Old McDonald’s Farm

Vertical farms could provide the food of the future.

According to a growing body of evidence, the human species may be in crisis. Earth is becoming overpopulated, polluted, and drained of resources at an alarming rate. The basic issue is simply about numbers: there are a whole lot of us, and not much land. The planet is currently populated by 7 billion human beings, and projected to rise to 9.5 billion people by 2050.1 In order to feed the growing population, we would need an area of additional farmland approximately the size of Brazil.2 Yet we cannot create much more arable land than we have. The productivity of plant life may already be at maximum capacity even as we try to increase crop yield year after year.3 We need to work with what we have already taken to meet our needs.

Unfortunately, we are not using our resources wisely. Commercial agricultural practices typically have high-energy costs due to irrigation methods, fertilizer, and fuel usage. As the cost of fuel increases, so does the market cost of food. While products like corn ethanol reduce fuel costs in the short term, this means more of our limited crop supply is not available for consumption and may further increase the cost of food. The current system also forces us all to depend on a select few countries to produce enough to feed the whole world, causing high shipping costs, and one poor growing season can place dire limitations on all of us.4

Furthermore, current irrigation methods waste more water than any other human activity. Between 70% and 90% of the world’s freshwater supply (which is a mere 3% of all water to begin with) is used for irrigation of farmland and then rendered unsanitary for human use due to pesticides.2 On top of these issues, modern agricultural practices cause high outputs of pollutants in our air and water and lead to food-borne illnesses due to unsanitary animal overcrowding. Our only hope is to change the way we feed ourselves, to reduce waste and to maximize efficiency without increasing our consumption of materials. What we need is another agricultural revolution.

Solving a Growing Problem

The vertical farm is a potential solution for these global issues. Ideally, a vertical farm would be a large, independently operating structure centrally located in a major city. It would feature two multistory, skyscraper-like buildings working together- one to manage food production with nutrient film techniques, and another to manage waste through living machines and generate energy with photovoltaic cells and carbon sequestering. Popularized in recent years by Dr. Dickson Despommier, a professor at Columbia University, the concept originated in the 1950’s with a “Glass House” and has been further developed by several innovators over the years.5 Controlled Environment Agriculture (CEA), which allows for control of temperature, pH, and nutrients, has also been employed in for many years in commercial greenhouses in order to produce crops unsuited for the local climate. Although these greenhouses are often high-yielding, they typically require fossil fuels that produce considerable emissions, and do not eliminate agricultural runoff. In contrast, the vision of the vertical farm is one of grand scale: sky-scraping, glass-paneled buildings placed in every major urban center to provide affordable, carbon neutral, pollutant-free food to the cities’ residents. A project of this scale involves a huge number of factors, all dependent on the ratio of cost to potential yield. This could completely change the way we get food from the ground to the table. Instead of shipping produce from several states away, or from outside of the country, grocery stores could stock fruits and vegetables grown right in the heart of their city. It would reduce pollution, increase production, and be healthier for ourselves and the planet.

Designing the Future Farm

Constructing vertical farms within major cities may eliminate the problems of land shortage, pollution, deforestation, water shortage, and unsanitary practices commonly found in the agriculture industry around the world. Office spaces could be located nearby for the business and management end of the operation. This design would have high initial costs, but over time would recoup these losses and become highly profitable while benefiting urban centers and the environment. Savings from reduced energy and maintenance costs would over time compensate for initial losses, and sales profits are projected to be comparable to stock market averages.2 Despite high costs for technology and construction at the upstart, vertical farms could have astounding effects on local and global populations. The cleanliness and convenience of an environmental friendly food center would improve the property value of surrounding urban neighborhoods and improve quality of life. It would be an economic boon to cities and generate a wide range of new urban jobs, but would cause employment and sales losses for rural farmers. Global effects could be even more important. This design would be especially effective in tropical and subtropical locations, where incoming solar radiation is at a maximum and controlled climates are easiest to maintain. If implemented in less developed nations in these locations, vertical farms could transform those economies and be a catalyst to slow excessive population growth as urban agriculture is adopted as a strategy for sustainable food production. It might also reduce or eliminate the occurrence of armed conflict over natural resources, such as water and land, as both would be more available thanks to successful conservation.

Putting the Design to Work

Vertical farming proposes to be the ultimate design for sustainability and conservation of resources. A controlled climate allows for high yield, year-round crop production. Consider strawberries as an example: 1 acre of berries grown indoors produces as much fruit in one year as 30 outdoor acres.2 Generally, growing indoor crops is four to six times more productive than outdoor farming. This method also protects plants against inclement weather, parasites, and disease, so fewer crops are lost and toxic chemicals are not needed for pesticides. Using special dirt-free hydroponic systems and re-circulating ‘living machines’, we can even recycle city waste water and turn it into clean water for irrigation.6 This method of water use would drastically reduce consumption, eliminate most pollutants found in runoff, and lead to cleaner rivers, lakes and oceans. Additionally, a vertical farm could add energy “back to the grid”, rather than consuming nonrenewable fossil fuels. Tractors, plows and shipping trucks, all “gas guzzlers”, would be unneeded in a vertical farm. A combination of solar panels and sequestered methane generated from the composting of non-edible organic materials could generate the heat necessary for a controlled climate.7 Essentially, the building could run on sunshine and garbage. Waste from other parts of the city could even be reduced if we were able to incorporate it for methane generation in our farm. Vertical farming might be our answer for low waste, high yield farming of the future.

However, we likely won’t see this solution implemented any time soon. While technologically possible today, urban vertical farms are unlikely to find a place in society in the near future because of the finances required to begin this endeavor. Hope lies with universities and private institutions to expand the idea with further research and with far-sighted investors to provide the funds to implement this incredible solution. Perhaps with more information, a corporation could be convinced to finance the first commercial vertical farm.

References

1 United Nations, Department of Economic and Social Affairs, Population Division (2011): World Population Prospects: The 2010 Revision. New York.

2 Despommier, Dickson D. The Vertical Farm: Feeding Ourselves and the World in the 21st Century. New York: Thomas Dunne, 2010. Print.

3 Li, Sophia. “Has Plant Life Reached Its Limits?” New York Times. New York Times, 20 Sept. 2012. Web. 15 Oct. 2012. .

4 Lowrey, Annie. “Experts Issue a Warning as Food Prices Shoot Up.” The New York Times. The New York Times, 4 Sept. 2012. Web. 15 Oct. 2012. .

5 Hix, John. 1974. The glass house. Cambridge, Mass: MIT Press.

6 Ives-Halperin, John and Kangas, Patrick C. 2000. 7th International Conference on Wetland Systems for Water Pollution Control. International Water Association, Orlando, FL. pp. 547-555. http://www.enst.umd.edu/files/secondnature.pdf

7 Concordia University (2011, October 4). From compost to sustainable fuels: Heat-loving fungi sequenced. ScienceDaily. Retrieved October 18, 2012, from http://www.sciencedaily.com/releases/2011/10/111003132441.htm.

Ear Buds, Not Ear Enemies

A closer look at which earphones are better for listeners.

How many times have you been in the library, on the T, or at the gym and received angry looks from the people closest to you because they can hear the music through your headphones? While listening to your favorite playlist may be the most enjoyable way to pass time, it not fun for your ears or those around you.

Our ears and the auditory system are delicate structures. The ear is set up in three parts: the outer ear, the middle ear, and the inner ear. The outer ear is the visible lobe into which we place our ear buds. The middle ear is the ear canal, an air-filled pathway that transports sound to the inner ear. The inner ear has two components, one of which has an air medium, and the other, a fluid medium. The goal of the inner ear is to transduce the sound waves into physical fluid waves in order to generate the sensation of sound. Music travels through the ear canal as sound waves and is then transformed into physical waves once it enters the fluid-filled inner ear.1 The waves of fluid move the sensory receptors of the ears, which are known as hair cells. Hair cells are cylindrical cells that sway and bend in sync with the movement of the fluid in the inner ear; their position determines whether or not a signal is sent to the brain to alert it to sound.1 Like the hairs on your head, hair cells are easily broken. If the waves are too strong or intense, (like from very loud music), the hair cells can be permanently damaged. Unlike the hairs on your head, they do not grow back.1 As the number of hair cells decrease, so does your ability to hear.

It is important to protect our auditory systems. A high functioning auditory system allows us to truly appreciate every note of a song, hear a friend calling our name down the street, or hear a car honk so we know not to cross the street.

There are many ways we can protect our ears, and one of the easiest and most practical ways is picking the right pair of headphones. There is much debate about which type is best: – “in-ear” headphones, such as iPod ear buds, or “on-ear” headphones, such as Beatz by Dr. Dre.2 Currently, many suggest that “on-ear” headphones are better for your hearing because they allow for the passage of more air, but a definitive winner has yet to be chosen. While some may be far beyond the price range of a college student, such as the Boise Noise Cancelling headphones, there are many affordable options.3

- AKG K 311 Powerful Bass Performance, 19.95 – affordable and uses a semi –open design that allows air flow to add comfort and prevent damage by allowing for more external environmental sounds to also be let in.3

- Logitech UE 350, 59.99 – automatically dampens sound without losing quality.4

- Beyer Dynamic DTX300p, 64.00 – an on-ear design that keeps the sound from building up too much in the ear canal.3

However, no matter the headphones you have, it is important to remember to keep the volume down. This is for both the sake of your ear and to make sure the lady sitting across from you does not start screaming, which would also be bad for your hearing.

References

12012. Auditory System. Science Daily, Retrieved from http://www.sciencedaily.com/articles/a/auditory_system.htmGrobart, Sam (2011, 12 21).

2Better Ways to Wire Your Ears for Music. The New York Times, Retrieved from http://www.nytimes.com/2011/12/22/technology/personaltech/do-some-research-to-improve-the-music-to-your-ears.html?pagewanted=all&_r=0

32012, 01 20. Which Headphones Should I Use? Deafness Research UK, Retrieved from http://www.deafnessresearch.org.uk/content/your-hearing/looking-after-your-hearing/which-headphones-should-i-use/

4Shortsleeve, Cassie (2012, 12 10). The Coolest New Headphones. Men’s Health, Retrieved from http://news.menshealth.com/best-headphones/2012/09/10/

Time to Activate

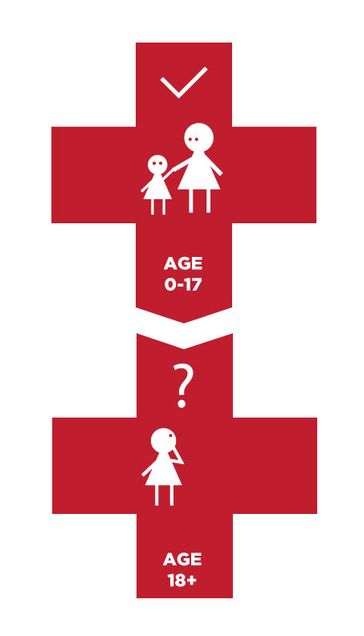

Sick children are cared for by parents, but what happens when they grow up?

Many children in the United States live with chronic disease; Type 1 Diabetes, sickle cell, arthritis, asthma, and cystic fibrosis are common diagnoses. Yet once children grow into adulthood and age out of the pediatric healthcare system, they often find themselves unprepared to advocate for their own medical needs. In the pediatric healthcare system, the child’s perspective is always important although ultimately the child’s guardian is legally responsible (e.g. signing informed consent for a procedure). Once the child reaches the age of 18 (age of majority in most states), the child retains full legal responsibility for choosing and consenting to treatments. With the sudden diminishment of the caregiver’s legal authority, the responsibility becomes the child’s to make the best decisions for his or her own body and lifestyle.

Without a gradual introduction to the realm of consent, a child cannot possibly be expected to understand the complexities of being a medical advocate. Effective transition is different from efficient transfer, where transition is the long-term accumulation of a child’s medical responsibility and transfer is simply the physical move to an adult care facility.1 Merely sending a child and his medical record to a new adult provider is not synonymous with actually preparing the child to care for his chronic illness into adulthood. Ultimately, the latter will be most advantageous to children, families, physicians, and the medical system at large.

Getting Started

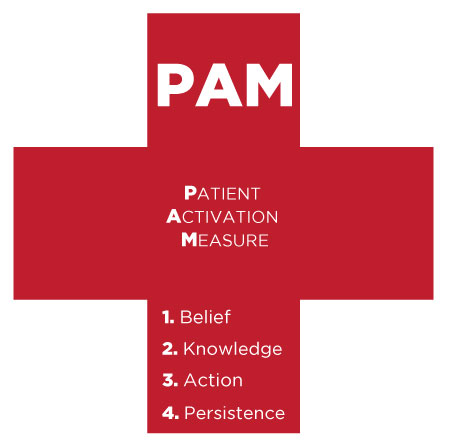

“Patient activation” as the mechanism for achieving meaningful and long-lasting involvement in pediatric chronic illness is a much-debated topic. Patient activation is defined by the patients’ completion of four stages: 1) understanding that their role is paramount 2) having the appropriate understanding and certainty to make a decision 3) having taken actual action towards health goals and 4) persisting in the event of adverse situations.2 It has long been known that actively engaged patients report improved health outcomes) and that successful chronic illness management skills can increase function, as well as minimize pain and healthcare costs. 3,4 The benefit to pediatric patients would be tremendous – particularly to their long-term physical, social, and emotional functioning. The problem remains: even with patient activation conceptualized, how can pediatric patients be ‘activated’?

Will Patient Activation be able to give sick children a more active say in their health once they are older? Photo Credit | Robert Lawton via Wikimedia Commons

The solution is a multifaceted approach, targeting the child, family, medical team, and medical culture at large. If medicine can be increasingly viewed as “consumer driven,” individuals may be less likely to accept doctors’ opinions without ensuring their own voice had been heard.2 For example, few people would tolerate going to a restaurant and being told what to order. Yet medical decisions are often chosen with the patient’s complete understanding.1

Medical education must be progressive and developmentally appropriate for patients and their families; too much too fast would only serve to be overwhelming. Nevertheless, patients and families must be empowered. This inevitably depends on the disease, age of child, culture considerations, and more. For example, a child living with arthritis needs an emphasis on physical activity as he matures. On the other hand, a child living with HIV should be educated about safe sex practices only when it is developmentally and culturally appropriate, involving the family along the way. Additional concerns, such as procuring health insurance with a pre-existing condition and medication prescription, are important to discuss with the child and family as transition begins to further success.

Achieving Independence

How can this success be measured? The Patient Activation Measure (PAM) was developed by Dr. Judith Hibbard, a professor of Health Policy at the University of Oregon, to assess patients’ degrees of involvement in their care. There are four subscales, which include ‘Believes Active Role is Important’ (e.g. “When all is said and done, I am responsible for managing my health condition”), ‘Confidence and Knowledge to Take Action’ (e.g. “I understand the nature and causes of my health condition(s)”), ‘Taking Action’ (e.g. “I am able to handle symptoms of my health condition on my own at home”), and ‘Stay the Course under Stress’ (e.g. “I am confident I can keep my health problems from interfering with the things I want to do”). 2 It is evident that these four core values of patient activation underscore the intersection of belief that the patient is an important actor in their medical care, thorough education of disease and lifestyle, and an active role. In a study with an adult population, the PAM was significantly related to health-related outcomes. Patients who score as being highly activated also experienced improved health-related outcomes when compared with their counterparts.5

The PAM is a useful tool for measuring patient activation, but the problem persists – how do we meaningfully activate pediatric patients? The answer in part lies in transitions clinics, an innovation that patients have been benefiting from for several years. Transition clinics occur within a given specialty (e.g. Rheumatology) with the goal of providing additional resources to prepare a child for adult care: primarily educational programs and skills training.6 The age at which a child becomes engaged in the transition clinic will varies in light of the age at diagnosis, social support, disease severity, and developmental stage. For instance, a child diagnosed with an illness at age fifteen should not begin the transition clinic right away, while another child diagnosed with the same illness at age eight may find the transition clinic appropriate at age fifteen.

The PAM is a useful tool for measuring patient activation, but the problem persists – how do we meaningfully activate pediatric patients? The answer in part lies in transitions clinics, an innovation that patients have been benefiting from for several years. Transition clinics occur within a given specialty (e.g. Rheumatology) with the goal of providing additional resources to prepare a child for adult care: primarily educational programs and skills training.6 The age at which a child becomes engaged in the transition clinic will varies in light of the age at diagnosis, social support, disease severity, and developmental stage. For instance, a child diagnosed with an illness at age fifteen should not begin the transition clinic right away, while another child diagnosed with the same illness at age eight may find the transition clinic appropriate at age fifteen.

Ultimately, the issue in part returns to the present medical culture: how doctors are trained to communicate with patients and the historically paternalistic medical model. It is plausible patients with doctors that have a strong and respect-filled relationship will have more positive medical experiences compared to those with poor patient/physician relationships. Thus, strengthening this relationship should be tandem to encouraging and initiating a transition process for pediatric patients.

References

1Peter et.al, 2009. Transition From Pediatric to Adult Care: Internists' Perspectives. Pediatrics. 123(2):417-423.

2Hibbard et al., 2004. Development of the Patient Activation Measure (PAM): Conceptualizing and Measuring Activation in Patients and Consumers. Health Serv Res. 39(4 Pt 1): 1005–1026.

3 Von Korff et al., 1997. Collaborative Management of Chronic Illness. Annals of Internal Medicine.127(12):1097–102.

4 Glasglow et al., 2002. Self-management Aspects of the Improving Chronic Illness Care Breakthrough Series: Implementation with Diabetes and Heart Failure Teams. Annals of Behavioral Medicine.24(2):80–7.

5J. Greene and J. H. Hibbard. 2011. Why Does Patient Activation Matter? An Examination of the Relationships Between Patient Activation and Health-Related Outcomes. Journal of General Internal Medicine.

6 Crowley, et al. 2011. Improving the transition between paediatric and adult healthcare: a systematic review. ADC. 1:1-6.

Jennie David (CAS 2013) is a psychology major from Nova Scotia. She hopes to use her personal experience with Crohn's Disease to support and inspire chronically ill children as a future pediatric psychologist. Jennie can be reached at jendavid@bu.edu.

Tagged as: medicine, patient care, psychology, pediatrics

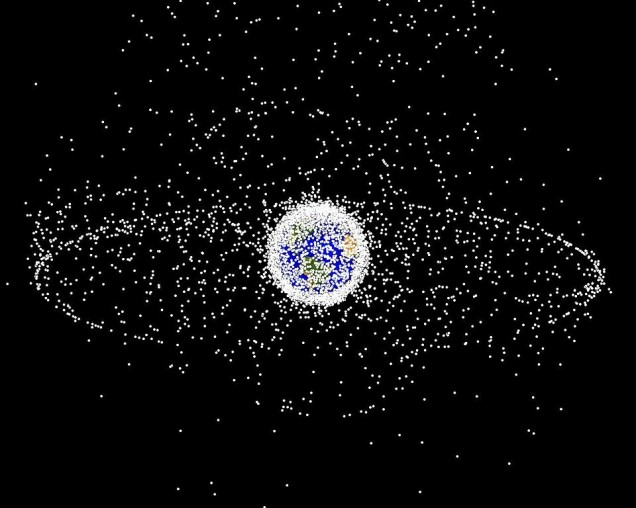

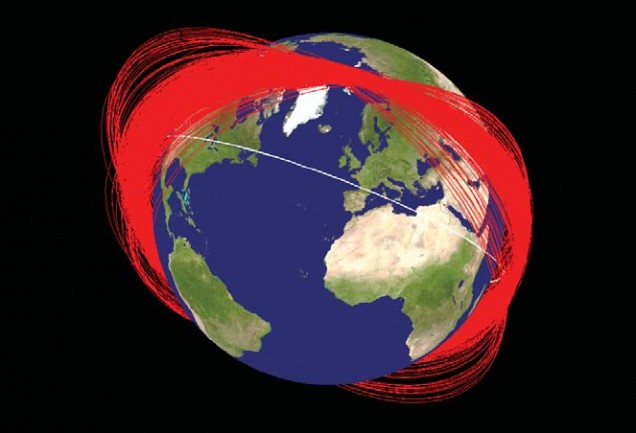

Space Debris: A Universal Problem

Many of the objects surrounding Earth are just man-made waste.

At this very moment, over several million useless pieces of “space junk” orbit our planet, presenting an alarming concern for not only the Unites States, but nearly every country on Earth. Technology is innately tied to space; with the growth of cell phones, GPS systems, and satellite television and radio, consumers understand their modern life requires functioning satellites. However, nonfunctioning satellites and their fragments, which have been left orbiting our planet for decades, pose a grave risk to the space technology that supports our current way of life. Primarily, we face three challenges that must be dealt with. First, we must take measures to reduce debris left in space from now on. Second, we should ensure we do not compromise our national security interests in space. Finally, debris left by past space missions must be removed in order to prevent collisions with current and future space endeavors.

A Call to Action

Each of these challenges requires a combination of technical ingenuity, international cooperation, and financial commitment from multiple nations. The alternative is failure, which is grave for everyone. Every new piece of debris in space increases the potential of "Kessler Syndrome," which arises when collisions between fragments of space debris and larger satellites create exponentially higher amounts of space debris. Kessler Syndrome could leave our satellite networks and space exploration programs useless for decades. For example, China’s destruction of their own nonfunctioning satellite in 2007 yielded more than 3,000 pieces of shrapnel, which have since endangered both the International Space Station and the space shuttle Atlantis. 1,2 This single action effectively undid nearly all the progress made to reduce space debris for the last few decades.

Following China’s actions in 2007, the international community has made progress in defining some standards on space debris, although there is still substantial room for improvement. The Orbital Debris Program Office within NASA has worked on guidelines to reduce space debris. NASA and the Department of Defense have also been working together to track some of the 500 million pieces of space debris orbiting Earth.3 These efforts will be expanded as part of NASA's 2013 budget proposal to shift funding toward "orbital debris and counterfeit parts tracking and reporting programs;" the Department of Defense is beginning contract bids with aerospace and software companies (like Lockheed Martin and Raytheon) over new "Space Fence" software to track orbiting particles.4,5

Satellites also must be able to switch from the popular Low Earth Orbit (LEO) zone to a "graveyard" orbit when they are no longer operational. This requirement helps prevent older satellites from becoming inadvertent hazards; which is precisely what happened in 2009 when a defunct Russian satellite collided with a US communications satellite over Siberia.6 But while US measures to prevent debris have helped stop US satellites from becoming destructive, about 2/3 of the debris in space comes from non-US launches. Therefore, a domestic approach to this issue is not enough; we must work on international agreements to reduce space debris.

Going Global

Some efforts have already been made in this field. The Inter-Agency Space Debris Coordination Committee (IADC) and the United Nations Committee on the Peaceful Uses of Outer Space (COPUOS) have given a forum for many of the great space-faring nations (Including the US, Russia, and China) to discuss the issues surrounding space debris. Now, the European Union has published an International Code of Conduct for Outer Space Activities. This code serves as a framework for multilateral negotiations to begin. However, the United States has some concerns with potential security risks.

Many of the efforts within the code relate to preventing incidents like the 2007 Chinese satellite destruction. In doing so, the code also prevents states from pursuing militarization of space, which has led to domestic concerns in the US. the intentional destruction of enemy satellites could jumpstart Kessler Syndrome and create a cloud of debris around the Earth. Yet concerns have been repeatedly raised against the international code. Many fear the code is "disarmament of space" in disguise.

Concerns for the Future

The United States Secretary of State has responded to these claims by emphasizing how the proposed agreement would not be legally binding. Since the agreement would not have the rule of law, it would technically not be a treaty. Therefore, Senate approval is not necessary. Regardless, it is clear any international agreements would surely need to tread lightly on the topic of national security. After all, if the United States is having security concerns with the wording of the agreement, we can surely expect other military powers, like China, to raise objections. However, the code's provisions are consistent with all existing practices of the National Aeronautics and Space Administration, Pentagon, and State Department.6

The Vanguard 1 satellite, defunct since 1964, will remain in orbit for 240 years. Credit | NASA via Wikimedia Commons.

However, such international agreements, aimed at reducing space debris and allowing each nation the right of protection in space are still controversial and will take time to reach an agreement. While the idea of removing debris is an undeniably positive ambition, the primary opponent to this movement is monetary. None of the existing ideas for removing space junk is cost effective, but new ideas lend hope for a more cost friendly solution. Ideas like electro-dynamic tethers, which pull satellites into their inevitable destruction in Earth's atmosphere, and nanosatellites, which throw nets over debris and pull them into the atmosphere, have the potential to reduce the amount of debris in our atmosphere while still being cost effective, but they are far from deployment.7

Allowing NASA to have the resources required to battle this epidemic is a key way to advance these ideas. The Obama administration has highlighted the importance of these measures in their national space policy.8 NASA has specifically created a budget for researching and tracking space debris, despite having their overall budget reduced from 4.4% of the total federal budget (in 1966) to less than 0.5%.9 By allocating funding to this specific field, we can begin taking steps to prevent space debris from destroying our modern way of life.

An Optimistic Outlook

Space debris is a problem that has only grown worse over time, with every collision in space creating hundreds of thousands of new potential disasters. Through international cooperation, domestic understanding, and technological investment we can solve this problem. We can begin by highlighting the importance of this issue and not allowing it to escape the public eye. Our dependence on space is something we often take for granted, but it hangs precariously on the edge of disaster if we do not answer this challenge. However, we will choose to answer the challenge, not because it is easy, but because it is hard.

References

1 Malik, T. (2012, 01 29). Iss dodges space debris from Chinese satellite. Huffington Post. Retrieved from http://www.huffingtonpost.com/2012/01/30/iss-dodges-debris-from-de_n_1241167.html

2 Schwartz, E. I. (2010, 05 24). The looming space junk crisis: It’s time to take out the trash. WIRED Magazine, Retrieved from http://www.wired.com/magazine/2010/05/ff_space_junk/all/1

3 NASA Orbital Debris Program Office. (2012, 03). orbital debris frequently asked questions. Retrieved from http://orbitaldebris.jsc.nasa.gov/faqs.html

4 National Aeronautics and Space Administration, (2012).Fy2013 president's budget request summary. Retrieved from website: http://www.nasa.gov/pdf/659660main_NASA_FY13_Budget_Estimates-508-rev.pdf

5 Staff. (2012, 03 13). New debris-tracking 'space fence' passes key test. CBS News. Retrieved from http://www.cbsnews.com/8301-205_162-57396451/new-debris-tracking-space-fence-passes-key-test/

6 Broad, W. J. (2009, 02 11). Debris spews into space after satellites collide. The New York Times. Retrieved from http://www.nytimes.com/2009/02/12/science/space/12satellite.html

7 Zenko, M. (2011). A code of conduct for outer space - policy innovation memorandum no. 10. Council on Foreign Relations, Retrieved from http://www.cfr.org/space/code-conduct-outer-space/p26556

8Office of the President of the United States, (2010). National space policy of the united states of America. Retrieved from website: http://www.whitehouse.gov/sites/default/files/national_space_policy_6-28-10.pdf

9 Rogers, S. (2010, 02 01). NASA budgets: Us spending on space travel since 1958 updated. The Guardian. Retrieved from http://www.guardian.co.uk/news/datablog/2010/feb/01/nasa-budgets-us-spending-space-travel

For the Love of Language

An exploration of human language and how it develops.

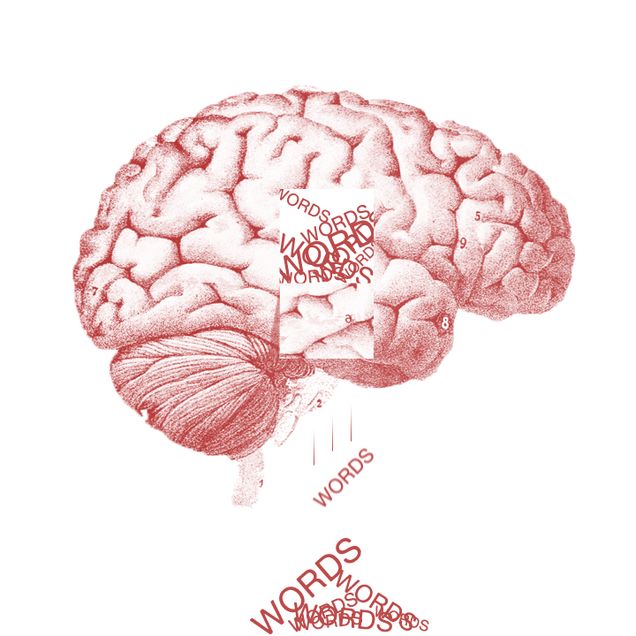

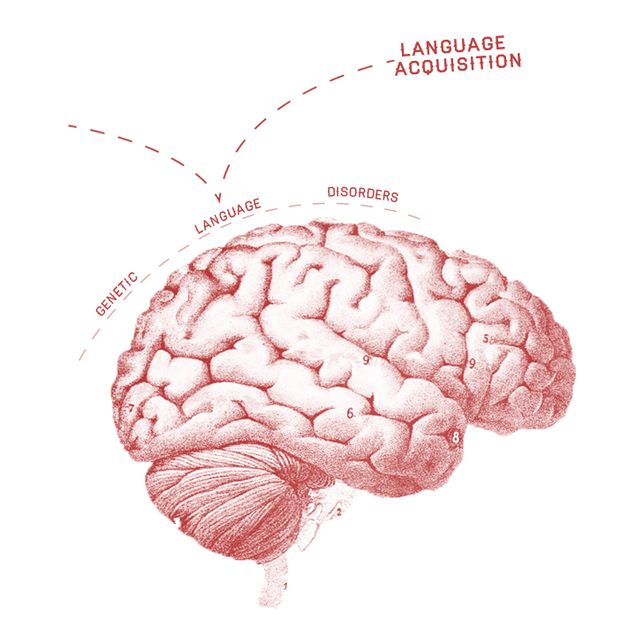

Imagine that you are in a foreign country. You get into a taxi and begin to explain where you want to go. But, with frustration, you realize that the driver does not understand you. Speaking slowly and repetitively, you struggle, finally communicating your intended destination. Such a language barrier can frequently be encountered abroad, but can you imagine a lifetime without language? You are neither mute nor deaf. Rather, you have lost the ability to record your experiences with descriptive words, to convey your ideas to others in speech or writing, and even to speak to yourself as you go about your day. Surprisingly, an ability as fundamental to humans as language must still be acquired over time. Hereditary language disorders and abnormal upbringings reveal the role that genetics and environmental input play in developing language.

Talking the Talk

Language acquisition is one of the few mental challenges that children master effortlessly and without instruction.1 Genetics provide all healthy children with language-specific circuitry so that mere exposure to environmental input leads to rapid acquisition. With adequate environmental input, children can cultivate an extensive vocabulary and acquire a mental grammar of the language.2 Vocabulary aside, a child’s language comprehension (but not speech) approximates adult competence by age five.3 However, with language, timing is essential. Complete growth of the brain's language areas occurs only during the “critical period”, a developmental time between ages two and seven. Language is most easily acquired throughout the duration of this period, during which the brain’s neural circuitry is easily modified in response to environmental input.4 Outside of the critical period, the ability to acquire language decreases rapidly.2 Children who are exposed to their first language as teenagers (e.g., feral children) develop a poor vocabulary and are unable to master complex grammar rules including sentence structure, plurals, and past tense usage. Feral children produce sentences like “I hit ball” or “cupboard put food”. Though these individuals are genetically normal, no amount of experience, instruction, or encouragement can allow for fluency to develop.2 Thus, both proper genetics and early environmental input are necessary to acquire language.

Language and Genetics

Genetic language disorders demonstrate that language needs certain neural foundations. Specific Language Impairment (SLI) is a genetic disorder that delays and impairs language use and comprehension. It is inherited through a dominant gene and is passed on to fifty percent of children with an affected parent. Individuals with SLI perform normally on nonverbal tests of intelligence, but they display slow and ungrammatical speech throughout their lives. They may say sentences like, “the boy eat three cookie.” Their speech lacks language basics, which any child with the necessary genes can easily master. Sentences like these occur because individuals with SLI are unaware or unable to identify rules governing word formation. However, these deficits are not due to the linguistic environment created by an affected parent, as some of these individuals have siblings with normal language. Even with intensive language therapy and correction, these individuals do not demonstrate improvement in their language abilities. For example, these children could not pluralize a made-up word such as “wug’. However, most children over the age of seven can always produce the correct plural, “wugs”1. This evidence suggests that a genetic flaw in SLI individuals limits neurological development in areas of the brain associated with language. Yet, this genetic abnormality spares the development of other cognitive areas. It can therefore be concluded that, without proper genetics, general intelligence and adequate environmental input are not enough to master language.

Williams syndrome is yet another type of genetic disorder that illustrates language acquisition and development in spite of low intelligence, indicating that language acquisition is the result of language-specific genes. Williams syndrome is caused by a defective gene on chromosome 11, which results in individuals with significant mental retardation. Although their linguistic knowledge develops more slowly and to a lesser extent than their peers, individuals with Williams syndrome still develop vocabulary and language that is impressive considering their cognitive deficits. Affected individuals can speak fluently and utilize concepts such as plurality and past tense usage.5 Even with significant mental retardation, language acquisition can still proceed relatively well; language must be genetically coded separate from general intelligence.

Nature and Nurture

The development of early language in deaf children demonstrates to what point acquisition can progress with proper genetics but inadequate environmental input. Deaf children born to non-deaf parents may not be exposed to sign language during the critical years of language development. Even without linguistic input in their environments, they still invent a homemade system of signs so that they can communicate with their parents. “Home sign” systems found among a group of deaf children shared common characteristics with signed and spoken language. Children made eye contact and used a system of abstract gestures rather than simply pointing to the objects they were talking about. The gestures were signed in series separated by pauses just like sentences of any spoken or signed language. Finally, the gestures conveyed a consistent meaning whenever they were used. However, because of a deficit in environmental input, these children never developed the ability to construct complex sentences.1 Later exposure is ineffective because these children are often near the end of the critical period. Yet, it seems extraordinary that communication, similar among these children, developed without linguistic input. This phenomenon suggests that genetics provides a strong foundation for language acquisition, but environmental input is essential to reach adult competence with language.

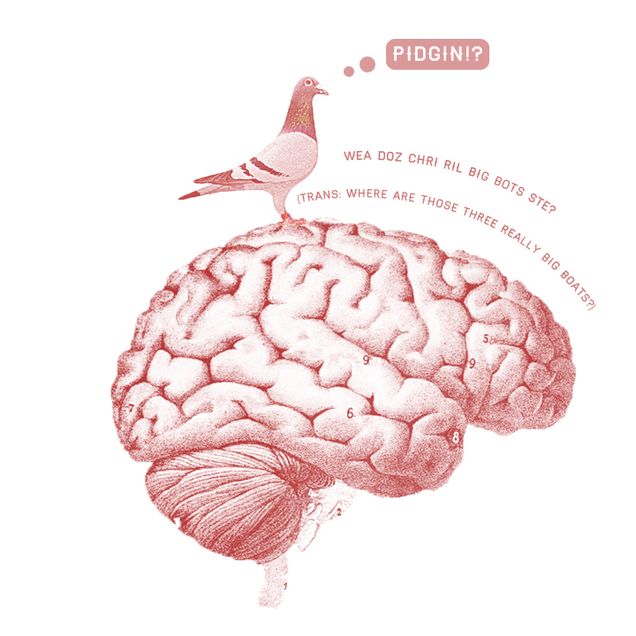

The development of Hawaiian Creole constitutes an example of how the genetic basis of language allows children to cope with imperfect (but adequate) input. In the late 1800s, a need for immigrant laborers on Hawaiian sugar plantations created an environment for the development of a new language. Workers from many different countries needed a language to communicate among themselves and with the English-speaking plantation owners. The solution was a makeshift communication system called a pidgin. Pidgin speakers use words from the socially or economically dominant language to form sentences. Yet, these sentences lack consistent word order, use of function words (articles, prepositions, etc.), and complex sentence structure. When exposed to the pidgin, the children of these immigrants did something extraordinary: they developed a new, complex, and consistent language now called Hawaiian Creole. Even though no extended period of time allowed adult speakers to make these changes, their children easily constructed a consistent and complex language just by hearing the pidgin during their development.6 There are several hypotheses for why such a rapid and complex transformation occurred. First, the children may have drawn on the sentence structure of English as a basis for Hawaiian Creole. This, however, seems unlikely because pidgin speakers had little contact with English speakers. Moreover, its sentence structure is unlike English. Alternatively, children may have drawn on structures from the native language of their parents. However, children rarely learned the languages of their parents due to intermixed marriages and other social settings in which pidgin was the only common language. A rapid, consistent and complex transformation of the pidgin grammar among all children suggests that specific default properties of language exist in genetics. During the critical period, children have access to and impose their innate knowledge onto language even when they are presented with imperfect input. In fact, further study of pidgins from around the world, has shown that Hawaiian Creole shares striking similarities in grammar with other Creoles. 6 Pidgins may be the clearest evidence for an innate and highly specific knowledge about language, which allows children to create rule-based, complex structure when little or none exists.

Concluding Words

Even though language is fundamental to humans, complete language development is not certain. Fortunately, the study of underdeveloped language provides a clear basis for understanding the requirements of acquisition. Hereditary language disorders demonstrate that the necessary foundations for language are built into our DNA. Other linguistic studies of language development in deaf children and the formation of pidgins highlight the role of environmental input. However, even though acquiring complex language is uniquely human, it is unlike all other human abilities and inventions. Language is neither the result of culture nor of high general intelligence or conscious, systematic study. In fact, it is the result of a complex interaction between genetics and environmental input. Though complete acquisition is not certain, to some extent, all humans can communicate. Language is so fundamental and innate that we can never truly know what it is like to exist without language, no matter where in the world we are.

References

1Jackendoff, R. (1994). Patterns in the Mind: Language and Human Nature. New York,

NY: Basic.

2Alrenga, P. Critical Period Effects in Language Development. Class Lecture. Language and Mind. Boston University, Boston, MA.

3 Yang, C.D. (2006) The Infinite Gift: How Children Learn and Unlearn the Languages of the World. New York: Scribner.

4 Squire, L. R. (2008) Fundamental Neuroscience. Amsterdam: Elsevier / Academic.

5 Pinker, Steven. (2007). The Language Instinct: How the Mind Creates Language. New York: HarperPerennial ModernClassics..

6 Bickerton, Derek. (1983). Creole languages. Scientific American: 116-22.

Lions, Tigons, and Pizzly Bears, Oh My!

Are human-mediated changes to the environment destroying species?

His father was an African prince, his mother, a fiercely independent Indian huntress. By all laws governing society, geography, and biology, Hercules should have never been born. Yet in 2002, the newborn cub rose to fame as the largest hybrid feline at the Jungle Island Zoo in Miami, Florida. The lion-tiger mix inherited the best of both worlds, growing into a 12-foot-long, 900-pound carnivore with the capability of running 50 miles per hour and consuming 100 pounds of meat in a single sitting.1 His caretakers insisted that the birth was a mere “accident”, but can such hybridization ever be truly natural?

What is Hybridization?

Hybridization, the interbreeding of two related yet genetically distinct species, is estimated to occur in at least 25% of plants and 10% of animals.2 A hybrid displays traits intermediate between those of its father and those of its mother, indicating either the creation of new characteristics or the introgression of genes from one taxon directly into another.3 Likewise, the species is identified by a portmanteau system of naming in which the first half consists of the father’s species, and the second of the mother’s species.4 The hybrid lineage may end with this newborn, the first member of the F1 (first hybrid) generation. For successful generations of hybrids to occur, the two parent species must share at least some breeding traits, be geographically and reproductively available to each other, and produce viable and fertile offspring. Often, members of the F1 generation will mate with each other as opposed to either of the parent species, thereby laying the foundation for the establishment of a brand new species with selective traits.3

Natural Versus Anthropogenic

Hybridization in the wild, especially among plant species, is essentially an indication of disturbance.5 Anthropogenic, or human, have facilitated an abnormal rise in hybrid mating.6 Such alterations to the environment could possibly result in decreased genetic diversity among species as unique alleles are is lost to a homogenized gene pool. Given enough time, the fit hybrids have the potential to replace their weakly adapted ancestors.2

It’s Getting Hot in Here…

One of the major anthropogenic factors affecting not only species hybridization, but the ecosystem as a whole, is an increase in global temperatures due to carbon emissions. The influence of the greenhouse effect, coupled with levels of carbon approaching 400ppm, has initiated the migration of species from warmer to cooler climates (and vice versa) as organisms attempt to maintain their ideal temperature ranges.7 As organisms encounter similar species in their new habitats, they may reproduce with them. A substantial number of natural hybridizations occurred during the post-glacial Pleistocene era when carbon levels exceeded those of today.8

The pizzly bear is a polar bear-grizzly bear hybrid. Photo Credit | Messybeast via Wikimedia Commons

The most recent example of temperature- mediated hybridization is between polar bears (Ursus maritimus), and grizzlies (Ursus arctos) in a hybrid tentatively referred to as a “pizzly” or “grolar” bear. In 2006, an Inuvialuit hunter from Victoria Island shot what appeared to be polar bear. However, the animal possessed characteristics of a grizzly, with brown patches of fur, long claws, a concave facial profile, and a humped back. DNA testing confirmed the individual to be first documented case of a polar-grizzly hybrid in the wild, a genetically possible but improbable phenomenon given the differing habitat ranges and breeding rituals of the two related species.7 Human-induced climate change, which has caused some animals to shift their range northward, may have driven grizzly bears into polar bear territory and grizzly bears have been recorded in Canada’s western Arctic Nunavut province periodically throughout the last 50 years. An alternate theory proposes that polar bears have been driven southward by the melting of the ice caps, bringing them into closer contact with grizzly bears. Three more hybrid individuals were reported after 2006, and scientists expect more encounters between grizzlies and polar bears in the future.

Habitat Destruction and Fragmentation

Deforestation and urbanization result in decreased habitat area, leading to increased competition between individuals and smaller populations. Habitat destruction may either isolate a singular region from a larger range, or can combine the ranges of several species via the removal of a natural barrier such as a mountain range or river way.3 Facing the deterioration of their territory, organisms extend their ranges to overlap with those of other individuals, leading to a higher density of individuals per unit space. Along the borders where one territory overlaps another, individuals are forced to share resources including food, shelter, and potential mates. Natural selection predicts that the most successful reproducers will be those most fit for the new environment, whether or not the parents are of the same species.

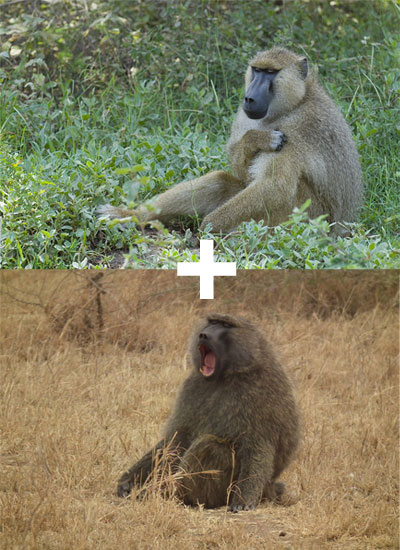

Yellow baboons (top) and anubis baboons (bottom) only started interbreeding recently. Credit | Wikimedia Commons

For example, yellow baboons (Papio cynocephalus) and Anubis baboons (Papio anubis) in Kenya did not interbreed 30 years ago; however, the proportion of hybrids has increased over time with the construction of tourist lodges.2 Historically, the yellow baboons occupied the south and east territories and the neighboring Anubis lived in the north and west. Typically, baboons stay close their natal locations, separated from other populations by physical obstacles like long stretches of waterless land. However, researchers observed an alternate immigration pattern of Anubis baboons that were foraging from tourist lodges. The immigration of Anubis coupled with their successful reproduction led to hybridization zones between the two baboon species. The hybrids are not selected against as would be if they had a genetic fitness lower than their predecessors. Male hybrids undergo puberty earlier in life than the purebred males and as a result, have higher reproductive and competition success. Researchers noted an increase in overall Anubis alleles in yellow baboon populations as well as in the number of offspring resulting from mating between hybrids. By 2000, almost 1/3 of the previously homogenous population had a mixed genome.2 Clearly, hybridization poses a great threat to the genetic integrity of indigenous populations, especially if interbreeding occurs with a non-native species.

Aliens among Us

Non-native, or invasive, species are particularly threatening to genetic diversity because they carry foreign alleles and often lack predators and diseases that would naturally eliminate them from the population. An invasive species may be more aggressive than its indigenous cousins, and thus more successful in finding mates. Genetic pollution from the invasive species may lead to homogenization or even extinction of the local population as its natural alleles are replaced.3 An invasive species, which tends to increase exponentially when colonizing a new territory, also has the potential to outnumber the area’s native individuals.

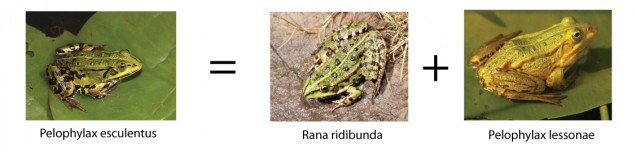

Introduction of an alien species may be accidental, as was the case of the marsh frog Pelophylax esculentus family, which is derived from a cross between P. lessonae and P. ridibuntus.9 Translocations of water frogs into new habitats have been associated with stocking of fish populations and garden ponds. The genetic integrity of native frog population has been aggravated by the recurrent introductions of alien species, and hybridization persists at a steady rate. As the number of hybridogenetic individuals increases, scientists have been able to identify three subsets and potential new species of water frog, each adapted to a different geographic location. The hybrids have been shown to succeed in different environments given its heterozygotic nature, suggesting that the hybrid may eventually overtake one or both of its parent species.9 What does this mean for the future of this species, and so many others that have been genetically altered as a result of anthropogenic hybridization?

Positives and Negatives

Not all effects of hybridization are negative; in fact, many individuals can benefit from reproducing with other species. An intermediate amount of genetic drift in a population corresponds to increased genetic diversity, allowing for new fitness characteristics including disease resistance and higher habitat tolerance.9 Hybridization can help to prevent extinction of rare and endangered species by preserving their unique homozygous alleles within the heterogeneous genome of the F1 generation. Yet the strong adaptive capability of hybrids can also work against the parent species if environmental conditions favor hybrids and thus change the relative fitness of the entire population. In this case, the presence of a very fit F1 generation may drive one or both parents to extinction.6

Still, there is no guarantee that a newborn hybrid will survive past birth, much less live to reproduce and replace its parent species. Many individuals suffer from embryonic fatality or premature death due to fatal recessive or deleterious mutations.9 Those that survive are often sterile, either genetically or physically, and therefore unable to reproduce and continue their lineage.3 In addition to mutations, hybrids may also inherit less-than-desirable traits from the weaker of the parent species, resulting in a reduced capability to handle physical stress. A poorly adapted hybrid may also display lower disease resistance, ineffective foraging ability, or reduced predation survival compared to its parents.10 Even though some hybrids inherit the “good genes,” there is an equal potential for a mixed genome to lead to the individual’s demise.11 Interfering with the process of natural selection certainly will not help anyone: humans, natural species, or anthropogenically-created hybrids.

A Mixed Future

What constitutes an organism as a hybrid? Biologists still lack an explicit operational rule to define species boundaries on genetic tree information.8 While some hybrids have been classified as new species, such as the red wolf, others have been denied the rights to conservation based on lack of genetic integrity.9 The decision whether to preserve a species or not is largely influenced by the method of hybridization, while natural hybrids are eligible for protection, anthropogenic hybrids are not.9 Yet in the case of endangered species, perhaps conserving hybrid offspring will be crucial in preserving their distinct alleles for the future. In either case, management of population biodiversity must be sustainable.10 Going forward we must ask ourselves what type of selection-natural or anthropogenic - will ensure the continuation of a species.

References

1Mott, M. (2005, August 5). Ligers make a "dynamite" leap into the limelight. National Geographic. Retrieved March 10, 2012, from http://news.nationalgeographic.com/news.

2Tung, J., Charpentier, M., Garfield, D., Altmann, J., & Albert, C. (2008). Genetic evidence reveals temporal change in hybridization patterns in a wild baboon population. Molecular Ecology, 17, 1998-2011.

3Largiader, C. (2007). Hybridization and introgression between native and alien species. Ecological Studies, 193, 275-288

4Paugy, D & Leveque, C. (1999). Taxonomy and systematics. ASFA 1: Biological Sciences and Living Resources. pp. 97-119.

5Lamont, B., He, T., Enright, J., Krauss, S., & Miller, B. (2003). Anthropogenic disturbance promotes hybridization between Banksia species by altering their biology. J. Evol. Biol., 16, 551-557.

6Keller, B., Wolinska, J., Manca, M., & Spaak, P. (2008). Spatial, environmental, and anthropogenic effects on the taxon composition of hybridizing Daphnia. Philosophical Transactions of the Royal Society, 363, 2943-2952.

7Roach, J. (2006, May 16). Grizzly-Polar Bear hybrid found -- but what does it mean? National Geographic, 2006. Retrieved October 28, 2011, from http://news.nationalgeographic.com/news/2006/05/polar-bears_1.html.

8Randi, E. (2010). Wolves in the Great Lakes region: a phylogeographic puzzle. Molecular Ecology, 19, 4386-4388.

9Holsbeek, G., & Jooris, R. (2010). Potential impact of genome exclusion by alien species in the hybridogenetic water frogs (Pelphylax esculentus complex). Biol Invasions, 12, 1-13.

10Aguiar, J. B., Jara, P. G., Ferrero, M., Sánchez-Barbudo, I., Virgos, E., Villafuerte, R., et al. (2008). Assessment of game restocking contributions to anthropogenic hybridization: the case of the Iberian red-legged partridge. Animal Conservation, 11, 535-545.

11Lingle, S. (1993). The hybrid's dilemma. Discover, 14, 14. Retrieved November 1 2011, from General Science Full Text database.

Tagged as: hybrids, evolution, invasive species, climate change, habitat destruction, genetics, liger, pizzly bear

Psyching You Out

Interesting psychology experiments that have helped us understand ourselves.

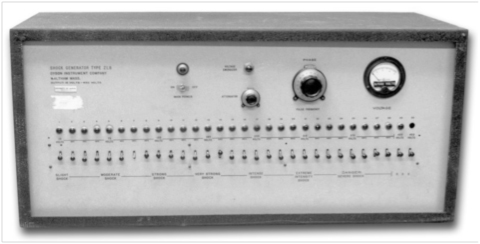

The shock box used for the infamous Milgrams experiment, which revealed much about the human mind. Photo Credit | psichi.org via Wikimedia Commons

Psychology has forced humans to question ourselves: our personality, our thoughts, and our experiences. What do psychology experiments reveal about how we behave and interact with others? Years of research have yielded some of the best and the brightest classic experiments and helped us to understand who we are- and who we might become.

Fake It Til You Make It

Elizabeth Loftus was a researcher who dedicated her life to studying “false memories” and the misinformation effect. To study false memories, she conducted the ‘Lost in the Mall’ experiment, in which she asked participants to imagine and describe an experience of getting lost in a mall.1 To strengthen their recollections, Loftus gathered fake testimonies from participants’ families or friends about the imagined event. Loftus discovered that upon prompting her participants, those interviewed came to believe that they had actually once been lost in the mall. In a similar study, Loftus’s participants watched a car crash scene and were asked afterwards to describe what they had witnessed.2 Interviewers tested the effect of bias upon the participants: leading them with phrases such as, “When the blue car smashed into the red car…” Sure enough, depending on the leading phrase, the crash would be remembered differently by each witness. Watch more here:

Loftus’s discoveries prompt us to wonder the obvious – how do we know what we really remember and what we’ve colored in between the lines? Watch out for similarities between your ‘recovered’ memory and a favorite book or movie – you just might have borrowed the storyline.

Follow the Leader

After the world suffered the atrocities of WWII, people struggled to understand the lack of ethics behind the Nazi’s apathetic terror tactics. Researcher Stanley Milgram pondered this perplexity; and he was determined to figure it out. He designed an experiment to evaluate the importance of ethics and authority by using a ‘learner’ and a ‘teacher’ simulation.3 The subjects (teachers) were falsely led to believe that another person (a learner) was in a separate room, and that it would be their responsibility to ask the learner a predetermined set of questions. If the learner answered correctly, the teacher would move on to the next question. If, however, the answer was wrong, the teacher was instructed to dose the learner with an electrical shock, which increased in intensity with each question. Milgram was interested to see how easily the teacher would follow directions to shock the learner even if they knew the detrimental effects.4 Watch more here:

So what happened? Ordinary people knowingly and willingly gave their imaginary partners enormous shocks and in turn the research community was appalled by the apparent ‘following orders’ outcome. Yet just because someone has authority does not mean you must obey them at all costs! At the end of the day, follow your own moral compass and retain your right to say no. Be your own leader.

To eat, or not to eat

A big, puffy pack of marshmallows sure looks tempting. But by and large we’re able to resist – or at least until we’re left alone with them. How does this trait to resist temptation develop? Were we always able to resist? In a classic developmental research study at Stanford by Walter Mischel, children were brought in a room and told that the researcher had to leave for a moment – leaving a marshmallow on the table – and if the child waited, they would bring back a second one.5 If the child ate the first marshmallow, there would be no seconds. The researcher leaves, and the child’s inner-debate over the treat begins. To eat or not to eat? Of the 600 children who were part of the study, only 1/3rd were able to resist temptation and obtain a second marshmallow. Many of the children tried to cover their eyes or distract themselves, but the temptation would prove to be too great for their young age. See the experiment in action here:

Can you resist temptation? You are not a little kid anymore and can all the cookies you want. But it’s a reminder that you do have those delay of gratification skills and can wait until you’re a little less full to chow down on some more sweets.

Psychology experiments help inform us about how our brains function and how we are wired to react and behave in various settings. We can be smart consumers of research by reading articles with a critical mind and a social awareness to understand how it relates to our immediate lives. This way, we will better comprehend our world, our friends, and our own lives.

References

1False memories - Lost in a shopping mall - Elizabeth Loftus.flv. YouTube. (n.d.) YouTube - Broadcast Yourself. Retrieved March 20, 2012, from http://www.youtube.com/watch?v=Q8xPfJ8cPhs&feature=related.

2Creating False Memories. (n.d.) UW Faculty Web Server. Retrieved March 20, 2012, from http://faculty.washington.edu/eloftus/Articles/sciam.htm.

3Stanley Milgram Experiment (1961). Experiment-Resources.com . A website about the Scientific Method, Research and Experiments. (n.d.) Experiment-Resources.com. A website about the Scientific Method, Research and Experiment. Retrieved March 19, 2012, from http://www.experiment-resources.com/stanley-milgram-experiment.html.

4Milgram Obedience Study. YouTube. (n.d.) YouTube - Broadcast Yourself. Retrieved March 20, 2012, from http://www.youtube.com/watch?v=W147ybOdgpE&feature=related.

5Kids Marshmallow Experiment . YouTube. (n.d.) YouTube - Broadcast Yourself. Retrieved March 19, 2012, from http://www.youtube.com/watch?v=6EjJsPylEOY.

The Coral Calamity

Environmental changes to coral reefs threaten aquatic biomes.

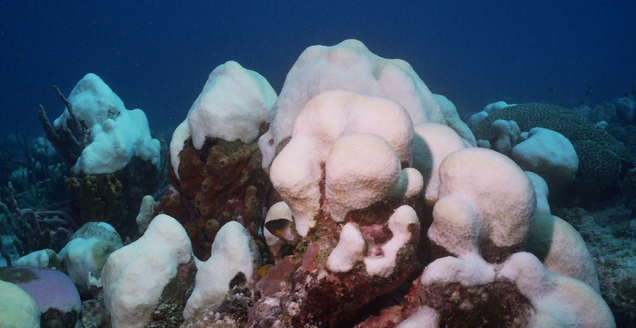

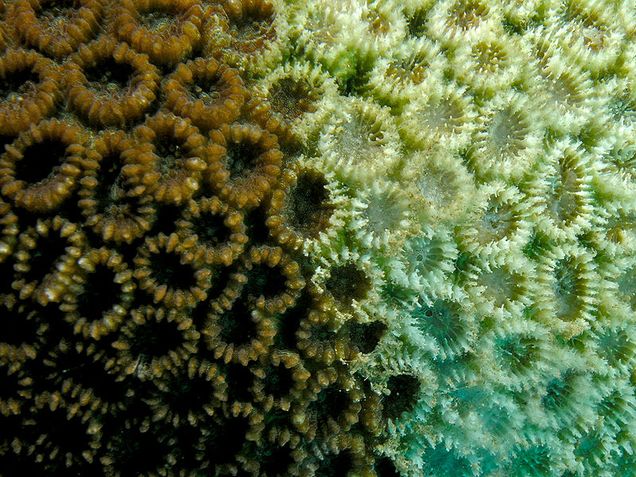

Slight increases in water temperature cause coral bleaching. Credit | Nhobgood via Wikimedia Commons

What if everything you relied upon for survival - your food, your home, your protection - was suddenly stripped away? This tragedy is currently happening to aquatic organisms like plants, fish, and other animals because of the alarming death rates of coral reefs. Corals, invertebrate animals with skeletons comprised of crystalline calcium carbonate, are at the very bottom of the food chain of oceans, feeding on phytoplankton and zooplankton.1 Coral formations like reefs cover an estimated 0.1-0.5% of the ocean floor in warm and tropical climates surrounding islands.2 Corals can only survive in a narrow optimal temperature range of approximately 73 °F-79 °F.3 The thermal limits signify temperature boundaries at which corals can live optimally; the slightest changes due to recent global warming trends, increasing CO2 levels, and over-nutrification are becoming disastrous. Because of their importance as a habitat for other organisms, the 80% decline in coral populations in various tropical and subtropical regions of the World Ocean is an increased threat to global aquatic biodiversity.4

Importance of Corals

Coral reefs are some of the most productive and diverse communities on the planet; they have many uses and provide many ecological services. Coral reefs function as physical barriers for currents and waves in the ocean, and over time, provide appropriate environments for sea grass beds and mangroves (as can be seen in Figure 1).2 Coral reefs’ high species assortment make this aquatic biome one of the most bio-diverse, providing homes and resources for countless marine animals.5 Corals provide a great deal of other ecological services. They act as a physical barrier that slows down erosion, which lets land build up, by blocking or slowing down waves from reaching the rocks of cliffs. Biotic services that offer suitable environments for nursing, spawning, feeding and breeding for a number of organisms, including algae, fish, sharks, eels, and sea urchins, are also provided by coral reefs. Furthermore, corals serve biogeochemical functions as nitrogen fixers in nutrient-poor environments.2

Corals also provide benefits to humans. They generate seafood products consumed and enjoyed around the world, and account for 9-12% of the world’s fisheries.2 Coral reefs contribute to the tourism industry by providing swimming and diving areas, as well as producing aesthetic goods like jewelry and souvenirs.5 Coral has also proven to be useful in the medical world, with successful use of coral skeletons in bone graft operations, and with the increased inclusion of coral reef substances in pharmaceutical products. Additionally, and most superficially engaging, is the contribution of household or public aquariums in the economy, which accounted for $24-40 million in 1985 and has been growing rapidly since.2 Knowing the importance of corals gives us motivation for assessing the causes and effects of coral population, and gives us insight as to why we should pay attention to this topic.

In total, corals contribute an estimated $30 billion to the global economy.6 However, a combination of disturbances, both natural and anthropogenic, or human-induced, has resulted in global declines of up to 80% in coral reefs over the recent three decades.7 This decline is worldwide, where parts of many reefs are being lost rapidly.8

Causes and Effects of Coral Population Decline

One of the main causes of the approximately 80% coral population decline is an increase in global temperature, as a warmer climate leads to other underlying causes such as increased ocean acidity. 1, 4, 9. An approximate 34 °F-36 °F annual increase in water temperature in the tropical zones of the world’s oceans is likely caused by global warming.4 Prolonged exposure to increased temperature results in the partial or complete loss of corals. Since corals exist in environments dangerously close to the top of their upper thermal limit of 73 °F-79 °F, they are vulnerable to the thermal stress that causes mortality.3,7,10 Increasing anthropogenic carbon dioxide (CO2) leads to the warming of the atmosphere and the ocean; these rising upper ocean temperatures cause widespread thermal stress, resulting in a phenomenon known as coral bleaching.6 Coral bleaching is called so because corals lose their color and turn white when affected, since the symbiotic zooxanthellae are released from the host coral.2

Calcification, the process that builds calcium up into a solid mass, is depressed with increased temperature and CO2 levels; this depression inhibits coral skeletal growth, and destroys coral health.7 Though corals can recover from bleaching events, these can still leave a negative impression behind on certain corals. Examples of coral decline after a large bleaching event can be seen in Figure 2. Coral bleaching can be caused by a decrease in salinity, due to run-off from clear cuttings and urbanization, as well as the release of poisonous substances like heavy metals.2

Atmospheric CO2 levels are currently 30% higher than their natural levels throughout the last 650,000 years, and in the last 200 years, approximately one third of all CO2 emissions have been absorbed by the ocean, affecting marine organisms at all depths.12 As carbon dioxide reacts with a body of water, the water surface becomes more acidic. This can negatively impact corals as well as countless biological and physiological processes such as coral and cucumber growth, sea urchin fertilization, and organisms’ larval development, especially for those who cannot survive outside a certain pH range.12 Ocean acidification caused by increasing CO2 levels decreases calcification rates in corals; this decrease results in a decline of coral populations.7

A similar large anthropogenic contributor to coral decline is water pollution through wastes from waterside construction sites and run-off from deforestation sites. Anthropogenic nutrification, the continuous excess nutrient enrichment of coastal waters, is a large contributor of coral population decline; populated sites have significantly higher levels of nutrients than underdeveloped sites, whereby the underdeveloped sites had increased coral cover.11 Corals must also endure mechanical damage from ships, anchors, and tourists colliding against them, which gets harder to do over time since the corals are not strong enough to withstand constant grazing and spillages.4

Future of Coral Populations

An essential part of saving coral populations from further decline is proper assessment of the situation. Researchers should evaluate biotic pigments like chlorophyll in the water around reefs since they play key roles in the uptake and retention of nutrients, which would better understand and improve nutrification problems.11 Aerial photographs, airborne sensors, and satellite images are in the future for quality observation and assessment of coral reef structures and communities.9 Remote sensing technology is also a plausible way to monitor coral reef growth in the future, and to find linkages between growth and different land processes so that we can better understand coral growth.13 Satellite imaging seems to be a good way to better our understanding of coral reef structure and growth, as well as potential threats to this diverse aquatic system through implementation of spatial and time-relevant data. Remote-sensing data is already in use to map and monitor coral reefs, and it is likely that this usage will increase in the future, due to these great advantages.6

The prediction of large-scale coral extinction is very real, with an estimated 80% of reef-building coral populations having been lost in shallow areas of tropical and subtropical zones.4 It is thought that coral bleaching will only become more frequent and intense over the next decades because of anthropogenic climate change that warming and subsequent water acidification will destroy coral reef-building capacities in the decades to come, and that oceanic pH levels will decrease 0.3-0.5 units by the end of the century due to continued absorption of anthropogenic CO2.9 Each of these factors will result in negative impacts on marine organisms.12 The future of coral populations is still very much undecided, but the balance can be tipped in the favor of saving corals if people are proactive about it. The effects of coral population decline have recently come into sight, but now it is clear what needs to be done to minimalize the causes so that coral populations can start steadily increasing. Major physical damage to coral reefs, usually mechanical, can require between 100 and 150 years for recovery, which puts into perspective how important it is for the planet’s inhabitants to make positive changes.14

References

1Keller, N.B. and Oskina, N.S. (2009). About the correlation between the distribution of the Azooxantellate Scleractinian corals and the abundance of the zooplankton in the surface waters. Pp. 811-822. Oceanology, 49; 6.

Miller, Richard L. and Cruise, James F. (1995). Effects of suspended sediments on coral growth. Pp. 177-180. Remote Sens. Environ., 53.

2Moberg, Fredrik and Folke, Carl (1999). Ecological goods and services of coral reef ecosystems. Pp. 215-228. Ecological Economics, 29.

3Al-Horani, F. (2005). Effects of changing seawater temperature on photosynthesis and calcification in the scleractinian coral Galaxea fascicularis, measured with O-2, Ca2+ and pH microsensors. Pp. 347-354. Scientia Marina, 69: 3.

4Titlyanov, E.A. and Titlyanova, T.V. (2008). Coral-algal competition on damaged reefs. Pp. 199- 210. Russian Journal of Marine Biology, 34; 4.

5Bakus, Gerald J. (1983). The selection and management of coral reef preserves. Pp. 305-312. Ocean Management,8.

6Eakin, C.M., Nim, C.J., Brainard, R.E., Aubrecht, C., Elvidge, C., Gledhill, D.K., Muller-Karger, F., Mumby, P.J., Skirving, W.J., Strong, A.E., Wang, M., Weeks, S., Wentz, F. and Ziskin, D. (2010). Monitoring coral reefs from space. Pp. 119-130. Oceanography, 23; 4.

7Glynn, Peter W. (2011). In tandem reef coral and cryptic metazoan declines and extinctions. Pp. 767-771. Bulletin of Marine Science, 87;4.

8Coté, I., Gill, J., Gardner, T., Watkinson, A. 2005. Measuring coral reef decline through meta-analyses. Biological Sciences 360; 1454.

9Scope ́litis, J., Andre ́foue ̈t, S., Phinn, S., Done, T. and Chabanet, P. (2011). Coral colonisation of a shallow reef flat in response to rising sea level: quantification from 35 years of remote sensing data at Heron Island, Australia. Pp. 951-956). Coral Reefs, 30.

10Obura, D and Mangubhai, S. (2011). Coral mortality associated with thermal fluctuations in the Phoenix islands. Pp. 607-608. Coral Reefs, 30.

11Costa Jr. O., Leão, Z., Nimmo, M. and Attrill, M. (2000). Nutrification impacts on coral reefs from northern Bahia, Brazil. Pp. 307-314. Hydrobiologia, 40.

12Albright, Rebecca and Langdon, Chris. (2011). Ocean acidification impacts multiple early life history processes of the Caribbean coral Porites astreoides. Pp. 2478-2479. Global Change Biology, 17.

13Stella, J. S., Munday, P. L. and Jones. G. P. (2011). Effects of coral bleaching on he obligate coral-dwelling crab Trapezia cymadoce. Pp. 719-723. Coral Reefs, 30.

14Precht, W. (1998). The art and science of reef restoration. Pp. 16-20. Geotimes 43

Seeing Stars

The Galactic Ring Survey aims to put new stars on the map.

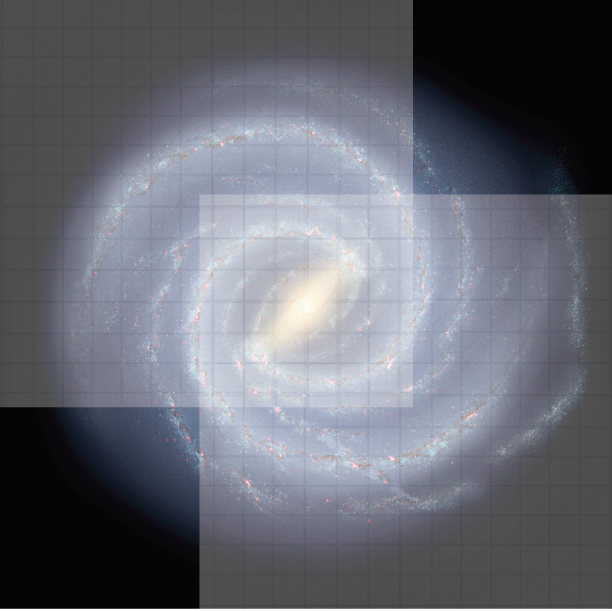

Scientists at Boston University are mapping carbon monoxide in the Milky Way. Illustration by Evan Caughey

A star is born! No, it is not the next American Idol, but one of the celestial bodies illuminating the night sky. Like you, researchers at Boston University have asked themselves how these twinkling objects come to be. To find out, the Astronomy Department initiated the Galactic Ring Survey (GRS) in 1998 in New Salem, Massachusetts.1 Along with the Five College Radio Astronomy Observatory (consisting of University of Massachusetts, Amherst College, Hampshire College, Mount Holyoke College and Smith College)2, BU began an eight-year-long project intended to scan and map out concentrations of carbon monoxide (13CO) gas in the Milky Way. 1 The main purpose of this research was to determine where future stars may form using 13CO gas, which is less common than 12CO or hydrogen in the universe, and thus allows for a narrower scope or error field.3

The Galactic Ring

New stars are most likely to form in the galactic ring, a large area of the Milky Way found inside the solar circle.3. The ring contains 70 percent of all the necessary molecular gases crucial to star formation, including 13CO, 12CO, oxygen and hydrogen. 4 This region is important to the evolution and structure of our Galaxy. However, because the region dominates a large expanse of the Milky Way, it is hard to collect a complete image of the area, making it challenging to estimate distances to, from, and between star-forming regions.3 Most of the ring still remains to be researched; the GRS has only explored the areas between Galactic longitude 18o and 55.7o, only about 10 percent of the entire galaxy.1

New Stars and the Milky Way

Stars are the basic building blocks of any galaxy.5 The Milky Way alone has more than a hundred billion stars, and the capability of producing yet another billion.6 Stars contain and distribute many of the essential elements found in the universe: Carbon, Nitrogen, and Oxygen.5 In addition, stars act as fossil records to astronomers; their age, distribution, and composition of reveal the history, dynamics, and evolution of a galaxy.5

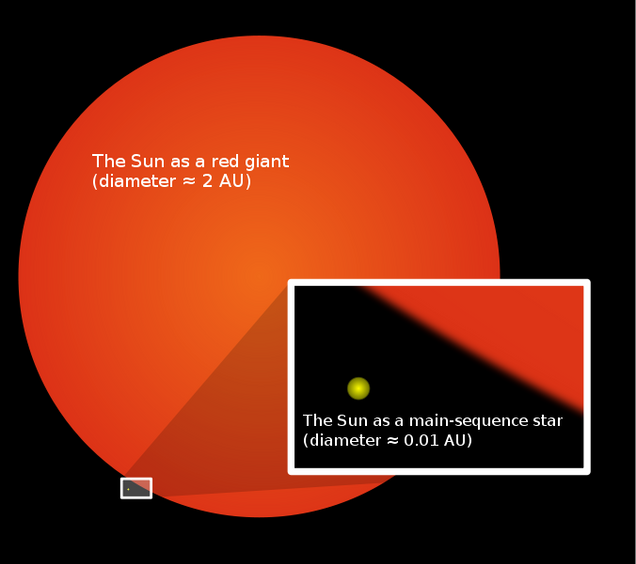

Stars form within dust clouds at different rates and at different times over billions of years. The first form of a star, a mere infant blob of heated gases, is known as a Protostar. New stars are formed when a Protostar sustains nuclear fusions of hydrogen, which enable the development of a hot helium core by preventing the high temperature within to cause the collapse of the star.5 These stars then enter their mature state, a period that can last from just millions of years to more than tens of billions of years. The larger or more massive the star is, the shorter its life span. For example, the Sun is an average-sized star and is expected to stay in its mature state for another ten billion years.5 Small stars, which can be up to ten times smaller than the Sun, are known as Red Dwarfs, and are the most abundant form of stars in the universe. 5 On the other hand, the most massive stars can be up to 100 times the size of the Sun and are known as Hypergiants.5 However, these stars are extremely rare in our current universe because their life span is so short and their deaths is so violent.5

Near the end of its life, a star will often grow into massive red giant. Credit | Mysid via Wikimedia Commons

As stars begin to reach the end of their lifespan, hydrogen fusion within the star stops and temperatures inside the core begin to rise, causing the star to collapse.5 At this stage the star becomes a Red Giant. The dead star still produces heat and light as elements within the stars fuse with hydrogen outside the star’s outer layers.5 Depending on size, explosive and extravagant chemical and nuclear reactions may occur within the star.5 These reactions will ultimately determine what form the dead star will take. Most stars will become White Dwarfs; others, usually if they are larger, will form into Novae or Supernovae. Novae form when the outer layers of the dead star explode, while a Supernovae forms when the core of the dead star explodes.5 The remains of a dead star either form neutron stars, or black holes. Neutron stars are created when electrons and protons in the dead stars combine into neutrons as it collapses. Black holes, are formed when the mass of the collapsing stars is three times larger than the mass of the sun and the gravitational pull is so strong it can pull anything within gravitational distance into its core. At other times the remains of the stars incorporate into dust already present in the area and may contribute to the formation of planets, comets, asteroids or new stars.5

Mapping the Stars

Using a 14-meter telescope with a beam-width of 117 cm, Boston University’s team of astronomers collected vast amounts of images that allowed for a wide range of research to be conducted. 7 The primary advances that emerged from this project were the ability to detect significant samples of star forming clouds, determining the distances of the star clouds and with the distances establish size, mass, luminosity and spatial distributions of clouds. 1

“The images we collected are beautiful, and show that the gas clouds in the Milky Way are not the round blobs we had imagined, but rather more like cobwebs or filaments,” commented Professor Jackson, one of the project’s designers. According to Professor Jackson, over 800 molecular clouds, which are found between stars with the potential to produce new stars, were discovered. 7 The temperature of these clouds was measured and it was discovered that they are very turbulent structures. Turbulence within clouds creates structures of gas and dust that increase in mass, and which collapse due to its own gravitational pull.5 As the gas and dust structure collapses, other materials at the core of the clouds heat up, becoming the basis of a Protostar.5 Each of the observed clouds seemed to have the same amounts of turbulence and thus the potential to become a new star.

Further Investigations

Although the actual data collection of the project ended in 2005, the GRS continues to impact new research. 7 One of the largest areas still being researched is the structure of infrared clouds, which were first discovered in the solar system by the GRS. They are dense, cold clouds that will form large gas clumps. 7These clouds are cold because they are in the early stages of star-formation. 7 Eventually these clouds evolve into massive stars. 7

According to Professor Jackson, over one hundred research papers have used the data collected by the GRS, during and after its conclusion. 7

“I think the GRS data will be used for years to come,” stated Professor Jackson.

This publication makes use of molecular line data from the Boston University-FCRAO Galactic Ring Survey (GRS). The GRS is a joint project of Boston University and Five College Radio Astronomy Observatory, funded by the National Science Foundation under grants AST-9800334, AST-0098562, & AST-0100793.

Works Cited

1Jackson, J. M. (2006). The Boston University–five college radio astronomy observatory. Manuscript submitted for publication, Astronomy Department, Boston University, Boston, MA.

2FCRAO general information. (n.d.) U Mass Astronomy: Five College Radio Astronomy Observatory. Retrieved March 4 2012, from http://www.astro.umass.edu/~fcrao/telescope/.

3 Lavoie, R, Stojimirovic, I, and Johnson, A. (2007). Galactic Ring Survey. The GRS. Retrieved March 4 2012, from http://www.bu.edu/galacticring/new_index.htm.

4Where is M13? (2006). The Galactic Coordinate System. Retrieved March 4 2012, from http://www.thinkastronomy.com/M13/Manual/common/galactic_coords.html.