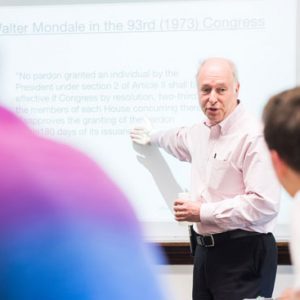

Some time ago, Roscoe Giles gave a talk to BU computer scientists where he used his iPad 3—a handheld, aging technology, he noted, that nevertheless “had the same arithmetic power” as BU’s first $2 million supercomputer from the 1990s.

“What was this giant, aspirational thing in 1990 is like nothing now,” says Giles, a College of Engineering professor of electrical and computer engineering. “A Sony PlayStation 3 had the same power as the Cray supercomputers of 1985.”

The next step in this exponential expansion of computing is the exascale computer—for now, just a dream of computer scientists—that would run one billion billion (yes, two “billions”) calculations per second, far more powerful than the current fastest supercomputer, developed by China. It would enhance everything from analyzing climate change to developing better plane and car engines to enhanced national security, says Giles, a 30-year veteran of the BU faculty.

He stepped down last October as a member and chairman of the US Department of Energy’s Advanced Scientific Computing Advisory Committee (ASCAC), which is helping advance President Obama’s executive order last year green-lighting development of exascale capability. ASCAC predicts that the United States will develop such a computer by 2023. When operational, the computer “is not going to be made up of a billion processors,” Giles says, “it’s going to be made up of fewer that are each more powerful and run at lower energy than most we have now.

“You probably won’t own an exascale computer—maybe in 40 years—but you’ll have devices that have exascale computing technology in them. Your smartphone will someday be a hundred times smarter than it is now, for not much more money.”

Giles discussed the brave new world of exascale with Bostonia.

Bostonia: What’s the technological challenge to developing exascale computing?

Giles: Up until about 2004, exponential growth in computing power meant that everything went faster. That stopped; computing didn’t get faster. Instead, you are getting computer chips with multiple processors on the chip. You can put more stuff on a chip; what hasn’t continued is the part that says you can make the chip go faster, which means the energy you need goes up faster.

Is it correct then that the main impediment is that exascale requires such immense energy?

That’s sort of true. It takes much too much power. But if you were willing to expend that power, it’s not clear that it makes sense to do it. What problems will I solve? If you took a ship filled with cell phones and said how much computing power is in that ship, it might be an exascale, but that doesn’t mean that they can work together to solve any particular problem.

So why are we pursuing exascale computing?

You may be able to solve new problems or old problems in new ways. For example, simulating how matter will behave inside a car engine to help engineer a better engine. You need to simulate millions or billions of molecules. Historically, people could never get close to the number of molecules in a real engine in a computer model. So what we do know tends to be based on artfully chosen, very tiny computational samples. Exascale computing enables larger simulations that can have greater fidelity to real systems.

On a larger scale, think about the simulation of climate and climate science. You have to represent individual patches of the Earth inside the computer. In the early days, you’d be dividing the world up into a large number of 100-kilometer squares. What is the temperature in that square? How much ice is there, and what is the reflectivity in that square? As computers got more powerful, they made the square smaller and smaller. The current number is, like, 20 kilometers. You get more understanding in the narrower regions, particularly things like the ocean flows, where at a greater level you can’t see what’s going on. That’ll give me a greater picture of the little eddies and flows. Exascale computing hopes to enable simulations at a one-kilometer scale.

Exascale also helps engineering companies doing production. The classic example is numerical wind tunnels. In design of airplanes, the test was that you build some mock-up of a plane or section, you put it in a wind tunnel, you measure its properties, you may tweak the engineering. The capability that computing already has offered is to replace some of those simulations by computer simulation. Your ideal would be sitting at a drawing board and saying, “I want to make a wing with this shape out of this material,” and then you press a button and the computer tells you how well that would perform.

It’s the same thing with looking at the airflow around trucks, based on how you design the body of the truck, to save fuel. They design trucks with 20 to 30 percent better gas efficiency through computer modeling with the highest computers we have now. What we’re looking for in exascale is to include more science and understanding of materials in design and production.

What about search engines like Google?

One of the hottest things going is data science. There are problems where exascale computing, married to the right level of data science support, will lead to breakthroughs to do lots of cross-correlation of data.

A Google data center uses a unit of computing that’s basically a tractor-trailer truckful of computers. The data center is a roomful of these containers. Each of those processors is doing a share of the searching, but it’s a challenge to get 10,000 of those things to be communicating rapidly, back and forth, to solve my problem. That’s the kind of problem where exascale computing directly affects the data science, to bring lots of computing power to bear on a single problem.

Are governments and business the only entities likely to own an exascale computer?

I think that’s probably true. But you’d get a machine comparable to some of today’s midsize supercomputers that could be on your desk once exascale technology is around.

The other use is national security. The National Security Agency is really interested in this.

Because you could read more data from terrorists’ cell phones or computers?

Right. They want to be number one in the world at that. That actually parallels Google, in terms of it being a data-centric application, the ability to handle large amounts of data and make correlations.

Why does government need to be involved—why can’t Google or industry develop exascale on their own?

The economic incentives are not aligned to make it possible to develop the exascale machine. There’s not a big enough market. Companies would like better roads and faster railroads, but that doesn’t mean they’re going to create the railroad. They’ll contribute to help building the road, but by themselves, they won’t be able to do it.

What are other governments doing to develop exascale?

They intervene to make it happen, like China. There’s a European consortium that’s investing in exascale. That’s actually a software initiative.

I used to joke that we say, “You want to start a program that’s $200 million a year? Oh my God, where are we going to get the money?” My impression is in China, they say, “Oh, it only costs money? We’ll buy slightly less real estate in New York to pay for this.”

Is t conceivable that China or some other country might get exascale before us?

Oh, sure. It’s conceivable. I don’t know if it’s likely. We have the best ecosystem for scientific computing, meaning that not only do we have powerful computers, but we have people who develop the algorithms. We have the laboratories where the computers live.

Is there a debate in academia about the role of government in developing exascale?

There’s always debate. There’s a debate first about the role of universities versus the role of government labs. And there’s the overall question of how you spend government money.

Are there dangers from exascale computing?

A danger comes when one part of society has access to a technology that no one else has. We will have that technology distributed to more than just the government. We could not get industry to participate if the only end point was to make 10 machines for the government. Our committee had people from industry and from academia.

This goes with having stuff in data centers and cloud services. One vision is you deploy exascale technology and the market for it is going to be data centers. The way people benefit is from their access through the data centers. The iPad 200 will have stuff in it that is exascale.

Human society is incredibly adaptive. The internet itself—I was around before it existed at all. We adapted to that technology. That doesn’t mean the adaptation is not painful. The issues of privacy we’re addressing only arose after the technology came in. We’re going to figure it out; our institutions will adapt. Those of us who are older are horrified by what people put out on Facebook and that they take their cell phone to the bathroom with them and live-stream it. But it’s clear other people are not going to feel that way: “Everybody has naked pictures of themselves in mud on the internet from when they were in college. So what?”

Related Stories

Klamkin Receives NASA Early Career Faculty

Recognized for efforts to improve deep space communication

Inaugural Levine Career Development Professor Named

Research by ENG’s Kulis makes it easier to analyze Big Data

BU Expanding Its Ranks of Data Scientists

Provost’s initiative will hire up to six

Post Your Comment